Abstract

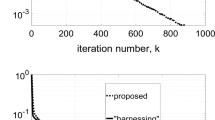

Communication load in heterogeneous edge networks is becoming heavier because of excessive computation and delay caused by straggler dropout, leading to high electricity cost and serious greenhouse gas emissions. To create a green edge environment, we focus on mitigating computation and straggler dropout to improve the communication efficiency during the distributed training. Therefore, we propose a novel scheme named Dynamic Grouping and Heterogeneity-aware Gradient Coding (DGHGC) to speed up average iteration time. The average iteration time is used as a metric reflecting the effect of mitigating computation and straggler dropout. Specifically, DGHGC firstly uses the static grouping to evenly distribute stragglers in each group. After the static grouping, considering the nonuniform distribution of nodes due to straggler dropout during the training process, a dynamic grouping depending on dropout frequency of stragglers is employed. The dynamic grouping tolerates more stragglers by examining the dropout threshold to improve the rationality of the static grouping for stragglers. In addition, DGHGC applies a heterogeneity-aware gradient coding to allocate reasonable data to stragglers based on their computing capacity and encode gradients to prevent stragglers from dropping out. Numerical results demonstrate that the average iteration time of DGHGC can be reduced largely compared to the state-of-art benchmark schemes.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Daghero, F., Pagliari, D.J., Poncino, M.: Energy-efficient deep learning inference on edge devices. Adv. Comput. 122, 247–301 (2021)

Zhou, Q., et al.: Falcon: addressing stragglers in heterogeneous parameter server via multiple parallelism. IEEE Trans. Comput. 70(1), 139–155 (2020)

Li, W., Liu, D., Chen, K., Li, K., Qi, H.: Hone: mitigating stragglers in distributed stream processing with tuple scheduling. IEEE Trans. Parallel Distrib. Syst. 32(8), 2021–2034 (2021)

Zhou, Q., et al.: Petrel: heterogeneity-aware distributed deep learning via hybrid synchronization. IEEE Trans. Parallel Distrib. Syst. 32(5), 1030–1043 (2020)

Zhou, A., et al.: Brief industry paper: optimizing memory efficiency of graph neural networks on edge computing platforms. In: 27th IEEE Real-Time and Embedded Technology and Applications Symposium, RTAS, pp. 445–448 (2021)

Kai, J., Zhou, H., Chen, X., Zhang, H.: Mobile edge computing for ultra-reliable and low-latency communications. IEEE Commun. Stand. Mag. 5(2), 68–75 (2021)

Lu, H., Wang, K.: Distributed machine learning based mitigating straggler in big data environment. In: IEEE International Conference on Communications, ICC (2021)

Yuan, G., et al.: Improving DNN fault tolerance using weight pruning and differential crossbar mapping for ReRAM-based edge AI. In: IEEE International Symposium on Quality Electronic Design, pp. 135–141. ISQED (2021)

Zhou, Q., et al.: Petrel: community-aware synchronous parallel for heterogeneous parameter server. In: 40th IEEE International Conference on Distributed Computing Systems, ICDCS, pp. 1183–1184 (2020)

Miao, X., et al.: Heterogeneity-aware distributed machine learning training via partial reduce. In: SIGMOD 21th International Conference on Management of Data, SIGMOD, pp. 2262–2270 (2021)

Raviv, N., Tamo, I., Tandon, R., Dimakis, A.G.: Gradient coding from cyclic MDS codes and expander graphs. IEEE Trans. Inf. Theory 66(12), 7475–7489 (2020)

Wang, H., Guo, S., Tang, B., Li, R., Li, C.: Heterogeneity-aware gradient coding for straggler tolerance. In: 2019 IEEE 39th International Conference on Distributed Computing Systems, ICDCS, pp. 555–564 (2019)

Buyukates, B., Ozfatura, E., Ulukus, S., Gunduz, D.: Gradient coding with dynamic clustering for straggler mitigation. In: IEEE International Conference on Communications, ICC (2021)

Schreiber, E.L., Korf, R.E., Moffitt, M.D.: Optimal multi-way number partitioning. In: Dissertations and Theses - Gradworks vol. 65, no. (4), pp. 24:1–24:61 (2018)

Acknowledgements

This work is supported by The Key Research and Development Program of China (No. 2022YFC3005400), Key Research and Development Program of China, Yunnan Province (No. 202203AA080009), The National Natural Science Foundation of China (No. 61902110), Postgraduate Research & Practice Innovation Program of Jiangsu Province (No. KYCX22_0610), The Fundamental ResearchFunds for The Central Universities (No. B210203024), Transformation Program of Scientific and Technological Achievements of Jiangsu Provence (No. BA2021002), the Key Research and Development Program of China, Jiangsu Province (No. BE2020729) and the Key Technology Project of China Huaneng Group (Grant No. HNKJ20-H46).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Mao, Y., Wu, J., He, X., Ping, P., Huang, J. (2022). Communication Optimization in Heterogeneous Edge Networks Using Dynamic Grouping and Gradient Coding. In: Wang, L., Segal, M., Chen, J., Qiu, T. (eds) Wireless Algorithms, Systems, and Applications. WASA 2022. Lecture Notes in Computer Science, vol 13473. Springer, Cham. https://doi.org/10.1007/978-3-031-19211-1_4

Download citation

DOI: https://doi.org/10.1007/978-3-031-19211-1_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19210-4

Online ISBN: 978-3-031-19211-1

eBook Packages: Computer ScienceComputer Science (R0)