Abstract

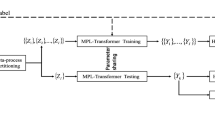

In this work, we consider the problem of cross-domain 3D action recognition in the open-set setting, which has been rarely explored before. Specifically, there is a source domain and a target domain that contain the skeleton sequences with different styles and categories, and our purpose is to cluster the target data by utilizing the labeled source data and unlabeled target data. For such a challenging task, this paper presents a novel approach dubbed CoDT to collaboratively cluster the domain-shared features and target-specific features. CoDT consists of two parallel branches. One branch aims to learn domain-shared features with supervised learning in the source domain, while the other is to learn target-specific features using contrastive learning in the target domain. To cluster the features, we propose an online clustering algorithm that enables simultaneous promotion of robust pseudo label generation and feature clustering. Furthermore, to leverage the complementarity of domain-shared features and target-specific features, we propose a novel collaborative clustering strategy to enforce pair-wise relationship consistency between the two branches. We conduct extensive experiments on multiple cross-domain 3D action recognition datasets, and the results demonstrate the effectiveness of our method. Code is at https://github.com/canbaoburen/CoDT.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

In fact, as proven in [66], even if the exact number of ground-truth categories is unknown, we can overcluster to a larger amount of clusters.

References

Asano, Y.M., Rupprecht, C., Vedaldi, A.: Self-labelling via simultaneous clustering and representation learning. In: ICLR (2020)

Ben-David, S., Blitzer, J., Crammer, K., Kulesza, A., Pereira, F., Vaughan, J.W.: A theory of learning from different domains. Mach. Learn. 151–175 (2009). https://doi.org/10.1007/s10994-009-5152-4

Ben-David, S., Blitzer, J., Crammer, K., Pereira, F., et al.: Analysis of representations for domain adaptation. In: NIPS (2007)

Blum, A., Mitchell, T.: Combining labeled and unlabeled data with co-training. In: COLT, pp. 92–100 (1998)

Cao, Z., Simon, T., Wei, S.E., Sheikh, Y.: Realtime multi-person 2d pose estimation using part affinity fields. In: CVPR (2017)

Caron, M., Bojanowski, P., Joulin, A., Douze, M.: Deep clustering for unsupervised learning of visual features. In: ECCV (2018)

Caron, M., Bojanowski, P., Mairal, J., Joulin, A.: Unsupervised pre-training of image features on non-curated data. In: ICCV (2019)

Caron, M., Misra, I., Mairal, J., Goyal, P., Bojanowski, P., Joulin, A.: Unsupervised learning of visual features by contrasting cluster assignments. In: NIPS (2020)

Carreira, J., Noland, E., Hillier, C., Zisserman, A.: A short note on the kinetics-700 human action dataset. arXiv preprint arXiv:1907.06987 (2019)

Chang, J., Wang, L., Meng, G., Xiang, S., Pan, C.: Deep adaptive image clustering. In: ICCV (2017)

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. In: ICML (2020)

Chen, X., Fan, H., Girshick, R., He, K.: Improved baselines with momentum contrastive learning. arXiv preprint arXiv:2003.04297 (2020)

Cui, S., Wang, S., Zhuo, J., Su, C., Huang, Q., Tian, Q.: Gradually vanishing bridge for adversarial domain adaptation. In: CVPR (2020)

Cuturi, M.: Sinkhorn distances: Lightspeed computation of optimal transport. In: NIPS (2013)

Deng, W., Zheng, L., Ye, Q., Kang, G., Yang, Y., Jiao, J.: Image-image domain adaptation with preserved self-similarity and domain-dissimilarity for person re-identification. In: CVPR (2018)

Doersch, C., Gupta, A., Efros, A.A.: Unsupervised visual representation learning by context prediction. In: ICCV (2015)

Fan, H., Zheng, L., Yan, C., Yang, Y.: Unsupervised person re-identification: clustering and fine-tuning. ACM Trans. Multimedia Comput. Commun. Appl. 14(4), 1–18 (2018)

Fankhauser, P., Bloesch, M., Rodriguez, D., Kaestner, R., Hutter, M., Siegwart, R.: Kinect v2 for mobile robot navigation: Evaluation and modeling. In: ICAR (2015)

Fini, E., Sangineto, E., Lathuilière, S., Zhong, Z., Nabi, M., Ricci, E.: A unified objective for novel class discovery. In: ICCV (2021)

Ganin, Y., et al.: Domain-adversarial training of neural networks. J. Mach. Learn. Res. 17(1), 2030–2096 (2016)

Ge, Y., Chen, D., Li, H.: Mutual mean-teaching: pseudo label refinery for unsupervised domain adaptation on person re-identification. In: ICLR (2019)

Ghifary, M., Kleijn, W.B., Zhang, M., Balduzzi, D.: Domain generalization for object recognition with multi-task autoencoders. In: ICCV (2015)

Grill, J.B., et al.: Bootstrap your own latent: a new approach to self-supervised learning. In: NIPS (2020)

Guo, Y., et al.: A broader study of cross-domain few-shot learning. In: ECCV (2020)

Gupta, P., et al.: Quo vadis, skeleton action recognition? Int. J. Comput. Vision 129(7), 2097–2112 (2021)

Han, B., et al.: Co-teaching: robust training of deep neural networks with extremely noisy labels. In: NIPS (2018)

Han, K., Rebuffi, S.A., Ehrhardt, S., Vedaldi, A., Zisserman, A.: Automatically discovering and learning new visual categories with ranking statistics. In: ICLR (2019)

He, K., Fan, H., Wu, Y., Xie, S., Girshick, R.: Momentum contrast for unsupervised visual representation learning. In: CVPR (2020)

Hornik, K., Feinerer, I., Kober, M., Buchta, C.: Spherical k-means clustering. J. Stat. Softw. 50, 1–22 (2012)

Huang, J., Gong, S., Zhu, X.: Deep semantic clustering by partition confidence maximisation. In: CVPR (2020)

Huang, Y., Peng, P., Jin, Y., Xing, J., Lang, C., Feng, S.: Domain adaptive attention model for unsupervised cross-domain person re-identification. In: AAAI (2019)

Islam, A., Chen, C.F., Panda, R., Karlinsky, L., Feris, R., Radke, R.J.: Dynamic distillation network for cross-domain few-shot recognition with unlabeled data. In: NIPS (2021)

Islam, A., Chen, C.F., Panda, R., Karlinsky, L., Radke, R., Feris, R.: A broad study on the transferability of visual representations with contrastive learning. In: ICCV (2021)

Ji, X., Henriques, J.F., Vedaldi, A.: Invariant information clustering for unsupervised image classification and segmentation. In: ICCV (2019)

Ke, Q., Bennamoun, M., An, S., Sohel, F., Boussaid, F.: A new representation of skeleton sequences for 3d action recognition. In: CVPR (2017)

Khosla, P., et al.: Supervised contrastive learning. In: NIPS (2020)

Kocabas, M., Athanasiou, N., Black, M.J.: Vibe: Video inference for human body pose and shape estimation. In: CVPR (2020)

Kundu, J.N., Gor, M., Uppala, P.K., Radhakrishnan, V.B.: Unsupervised feature learning of human actions as trajectories in pose embedding manifold. In: WACV (2019)

Laine, S., Aila, T.: Temporal ensembling for semi-supervised learning. In: ICLR (2016)

Li, B., Li, X., Zhang, Z., Wu, F.: Spatio-temporal graph routing for skeleton-based action recognition. In: AAAI (2019)

Li, C., Zhong, Q., Xie, D., Pu, S.: Skeleton-based action recognition with convolutional neural networks. In: 2017 IEEE International Conference on Multimedia & Expo Workshops, pp. 597–600. IEEE (2017)

Li, J., Li, G., Shi, Y., Yu, Y.: Cross-domain adaptive clustering for semi-supervised domain adaptation. In: CVPR (2021)

Li, J., Zhang, Y., Wang, Z., Tu, K.: Semantic-aware representation learning via probability contrastive loss. arXiv preprint arXiv:2111.06021 (2021)

Li, L., Wang, M., Ni, B., Wang, H., Yang, J., Zhang, W.: 3d human action representation learning via cross-view consistency pursuit. In: CVPR (2021)

Lin, L., Song, S., Yang, W., Liu, J.: Ms2l: multi-task self-supervised learning for skeleton based action recognition. In: ACMMM (2020)

Lin, S., Li, H., Li, C.T., Kot, A.C.: Multi-task mid-level feature alignment network for unsupervised cross-dataset person re-identification. In: BMVC (2018)

Liu, C., Hu, Y., Li, Y., Song, S., Liu, J.: Pku-mmd: a large scale benchmark for continuous multi-modal human action understanding. arXiv preprint arXiv:1703.07475 (2017)

Liu, J., Shahroudy, A., Perez, M., Wang, G., Duan, L.Y., Kot, A.C.: NTU RGB+ D 120: a large-scale benchmark for 3d human activity understanding. IEEE Trans. Pattern Anal. Mach. Intell. 42(10), 2684–2701 (2019)

Liu, X., Zhang, S.: Domain adaptive person re-identification via coupling optimization. In: ACMMM (2020)

Liu, Z., Zhang, H., Chen, Z., Wang, Z., Ouyang, W.: Disentangling and unifying graph convolutions for skeleton-based action recognition. In: CVPR (2020)

Mekhazni, D., Bhuiyan, A., Ekladious, G., Granger, E.: Unsupervised domain adaptation in the dissimilarity space for person re-identification. In: ECCV (2020)

Misra, I., Maaten, L.V.D.: Self-supervised learning of pretext-invariant representations. In: CVPR (2020)

Nie, Q., Liu, Z., Liu, Y.: Unsupervised 3d human pose representation with viewpoint and pose disentanglement. In: ECCV (2020)

Park, S., et al.: Improving unsupervised image clustering with robust learning. In: CVPR (2021)

Pathak, D., Krahenbuhl, P., Donahue, J., Darrell, T., Efros, A.A.: Context encoders: feature learning by inpainting. In: CVPR (2016)

Phoo, C.P., Hariharan, B.: Self-training for few-shot transfer across extreme task differences. In: ICLR (2020)

Qiao, S., Shen, W., Zhang, Z., Wang, B., Yuille, A.: Deep co-training for semi-supervised image recognition. In: ECCV (2018)

Shahroudy, A., Liu, J., Ng, T.T., Wang, G.: NTU RGB+ D: a large scale dataset for 3d human activity analysis. In: CVPR (2016)

Shi, L., Zhang, Y., Cheng, J., Lu, H.: Two-stream adaptive graph convolutional networks for skeleton-based action recognition. In: CVPR (2019)

Sohn, K., et al.: Fixmatch: simplifying semi-supervised learning with consistency and confidence. In: NIPS (2020)

Song, S., Lan, C., Xing, J., Zeng, W., Liu, J.: Spatio-temporal attention-based LSTM networks for 3d action recognition and detection. IEEE Trans. Image Process. 27(7), 3459–3471 (2018)

Su, K., Liu, X., Shlizerman, E.: Predict & cluster: unsupervised skeleton based action recognition. In: CVPR (2020)

Su, Y., Lin, G., Wu, Q.: Self-supervised 3d skeleton action representation learning with motion consistency and continuity. In: ICCV (2021)

Tarvainen, A., Valpola, H.: Mean teachers are better role models: weight-averaged consistency targets improve semi-supervised deep learning results. In: NIPS (2017)

Tseng, H.Y., Lee, H.Y., Huang, J.B., Yang, M.H.: Cross-domain few-shot classification via learned feature-wise transformation. In: ICLR (2019)

Van Gansbeke, W., Vandenhende, S., Georgoulis, S., Proesmans, M., Van Gool, L.: Scan: learning to classify images without labels. In: ECCV (2020)

Wang, T., Isola, P.: Understanding contrastive representation learning through alignment and uniformity on the hypersphere. In: ICML (2020)

Wei, L., Zhang, S., Gao, W., Tian, Q.: Person transfer GAN to bridge domain gap for person re-identification. In: CVPR (2018)

Wilson, G., Cook, D.J.: A survey of unsupervised deep domain adaptation. ACM Trans. Intell. Syst. Technol. 11(5), 1–46 (2020)

Xie, J., Girshick, R., Farhadi, A.: Unsupervised deep embedding for clustering analysis. In: ICML (2016)

Xie, Q., Luong, M.T., Hovy, E., Le, Q.V.: Self-training with noisy student improves imagenet classification. In: CVPR (2020)

Xu, S., Rao, H., Hu, X., Hu, B.: Prototypical contrast and reverse prediction: unsupervised skeleton based action recognition. arXiv preprint arXiv:2011.07236 (2020)

Yan, H., Ding, Y., Li, P., Wang, Q., Xu, Y., Zuo, W.: Mind the class weight bias: weighted maximum mean discrepancy for unsupervised domain adaptation. In: CVPR (2017)

Yan, S., Xiong, Y., Lin, D.: Spatial temporal graph convolutional networks for skeleton-based action recognition. In: AAAI (2018)

Yang, D., Wang, Y., Dantcheva, A., Garattoni, L., Francesca, G., Bremond, F.: Unik: a unified framework for real-world skeleton-based action recognition. In: BMVC (2021)

Yang, S., Liu, J., Lu, S., Er, M.H., Kot, A.C.: Skeleton cloud colorization for unsupervised 3d action representation learning. In: ICCV (2021)

Yao, F.: Cross-domain few-shot learning with unlabelled data. arXiv preprint arXiv:2101.07899 (2021)

Zhai, Y., Ye, Q., Lu, S., Jia, M., Ji, R., Tian, Y.: Multiple expert brainstorming for domain adaptive person re-identification. In: ECCV (2020)

Zhang, P., Lan, C., Xing, J., Zeng, W., Xue, J., Zheng, N.: View adaptive recurrent neural networks for high performance human action recognition from skeleton data. In: ICCV (2017)

Zhang, P., Lan, C., Zeng, W., Xing, J., Xue, J., Zheng, N.: Semantics-guided neural networks for efficient skeleton-based human action recognition. In: CVPR (2020)

Zhang, R., Isola, P., Efros, A.A.: Colorful image colorization. In: ECCV (2016)

Zhang, X., Cao, J., Shen, C., You, M.: Self-training with progressive augmentation for unsupervised cross-domain person re-identification. In: ICCV (2019)

Zhang, Z.: Microsoft kinect sensor and its effect. IEEE Multimedia 19(2), 4–10 (2012)

Zhao, B., Han, K.: Novel visual category discovery with dual ranking statistics and mutual knowledge distillation. In: NIPS (2021)

Zhao, F., Liao, S., Xie, G.S., Zhao, J., Zhang, K., Shao, L.: Unsupervised domain adaptation with noise resistible mutual-training for person re-identification. In: ECCV (2020)

Zhao, L., et al.: Learning view-disentangled human pose representation by contrastive cross-view mutual information maximization. In: CVPR (2021)

Zheng, K., Liu, W., He, L., Mei, T., Luo, J., Zha, Z.J.: Group-aware label transfer for domain adaptive person re-identification. In: CVPR (2021)

Zheng, N., Wen, J., Liu, R., Long, L., Dai, J., Gong, Z.: Unsupervised representation learning with long-term dynamics for skeleton based action recognition. In: AAAI (2018)

Zhong, Z., Zheng, L., Luo, Z., Li, S., Yang, Y.: Invariance matters: exemplar memory for domain adaptive person re-identification. In: CVPR (2019)

Acknowledgement

This work is supported by the National Natural Science Foundation of China under Grant No. 62176246 and No. 61836008.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Liu, Q., Wang, Z. (2022). Collaborating Domain-Shared and Target-Specific Feature Clustering for Cross-domain 3D Action Recognition. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13664. Springer, Cham. https://doi.org/10.1007/978-3-031-19772-7_9

Download citation

DOI: https://doi.org/10.1007/978-3-031-19772-7_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19771-0

Online ISBN: 978-3-031-19772-7

eBook Packages: Computer ScienceComputer Science (R0)