Abstract

In hash-based image retrieval systems, degraded or transformed inputs usually generate different codes from the original, deteriorating the retrieval accuracy. To mitigate this issue, data augmentation can be applied during training. However, even if augmented samples of an image are similar in real feature space, the quantization can scatter them far away in Hamming space. This results in representation discrepancies that can impede training and degrade performance. In this work, we propose a novel self-distilled hashing scheme to minimize the discrepancy while exploiting the potential of augmented data. By transferring the hash knowledge of the weakly-transformed samples to the strong ones, we make the hash code insensitive to various transformations. We also introduce hash proxy-based similarity learning and binary cross entropy-based quantization loss to provide fine quality hash codes. Ultimately, we construct a deep hashing framework that not only improves the existing deep hashing approaches, but also achieves the state-of-the-art retrieval results. Extensive experiments are conducted and confirm the effectiveness of our work. Code is at https://github.com/youngkyunJang/Deep-Hash-Distillation.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Refer supplementary material for proof.

- 2.

We provide a pseudo-code implementation in supplementary material.

- 3.

The details of each dataset are described in the supplementary material.

- 4.

More details can be found in the supplementary material.

- 5.

Visualized results with all classes for each dataset are shown in the supplementary material.

- 6.

Detailed deformation setup is listed in the supplementary material.

References

Bai, J., et al.: Targeted attack for deep hashing based retrieval. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12346, pp. 618–634. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58452-8_36

Boudiaf, M., et al.: A unifying mutual information view of metric learning: cross-entropy vs. pairwise losses. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12351, pp. 548–564. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58539-6_33

Cao, Y., Long, M., Liu, B., Wang, J.: Deep cauchy hashing for hamming space retrieval. In: CVPR, pp. 1229–1237 (2018)

Cao, Z., Long, M., Wang, J., Yu, P.S.: HashNet: Deep learning to hash by continuation. In: CVPR, pp. 5608–5617 (2017)

Caron, M., et al.: Emerging properties in self-supervised vision transformers. In: ICCV, pp. 9650–9660 (2021)

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. arXiv preprint arXiv:2002.05709 (2020)

Chen, W.Y., Liu, Y.C., Kira, Z., Wang, Y.C.F., Huang, J.B.: A closer look at few-shot classification. ICLR (2019)

Chen, X., He, K.: Exploring simple Siamese representation learning. In: CVPR, pp. 15750–15758 (2021)

Chen, Z.M., Wei, X.S., Wang, P., Guo, Y.: Multi-label image recognition with graph convolutional networks. In: CVPR, pp. 5177–5186 (2019)

Chua, T.S., Tang, J., Hong, R., Li, H., Luo, Z., Zheng, Y.: Nus-wide: a real-world web image database from national university of Singapore. In: ACM ICMR, pp. 1–9 (2009)

Cui, Q., Jiang, Q.-Y., Wei, X.-S., Li, W.-J., Yoshie, O.: ExchNet: a unified hashing network for large-scale fine-grained image retrieval. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12348, pp. 189–205. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58580-8_12

Dosovitskiy, A., et al.: An image is worth 16 \(\times \) 16 words: transformers for image recognition at scale. In: ICLR (2020)

Riba, E.: Kornia: an open source differentiable computer vision library for Pytorch. In: WACV (2020). arxiv.org/pdf/1910.02190pdf

Fan, L., Ng, K., Ju, C., Zhang, T., Chan, C.S.: Deep polarized network for supervised learning of accurate binary hashing codes. In: IJCAI, pp. 825–831 (2020)

Gong, Y., Lazebnik, S., Gordo, A., Perronnin, F.: Iterative quantization: a procrustean approach to learning binary codes for large-scale image retrieval. PAMI 35(12), 2916–2929 (2012)

Gu, G., Ko, B., Kim, H.G.: Proxy synthesis: Learning with synthetic classes for deep metric learning. In: AAAI (2021)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR, pp. 770–778 (2016)

Hinton, G., Vinyals, O., Dean, J.: Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531 (2015)

Hoe, J.T., et al.: One loss for all: deep hashing with a single cosine similarity based learning objective. NeurIPS 34 (2021)

Jang, Y.K., Cho, N.I.: Deep face image retrieval for cancelable biometric authentication. In: AVSS, pp. 1–8. IEEE (2019)

Jang, Y.K., Cho, N.I.: Generalized product quantization network for semi-supervised image retrieval. In: CVPR, pp. 3420–3429 (2020)

Jang, Y.K., Cho, N.I.: Self-supervised product quantization for deep unsupervised image retrieval. In: ICCV, pp. 12085–12094 (2021)

Jang, Y.K., Jeong, D., Lee, S.H., Cho, N.I.: Deep clustering and block hashing network for face image retrieval. In: Jawahar, C.V., Li, H., Mori, G., Schindler, K. (eds.) ACCV 2018. LNCS, vol. 11366, pp. 325–339. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-20876-9_21

Jeong, D.j., Choo, S.K., Seo, W., Cho, N.I.: Classification-based supervised hashing with complementary networks for image search. In: BMVC, p. 74 (2018)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: ICLR (2015)

Koch, G., Zemel, R., Salakhutdinov, R., et al.: Siamese neural networks for one-shot image recognition. In: ICML deep learning workshop, vol. 2. Lille (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: NeurIPS, pp. 1097–1105 (2012)

Lai, H., Pan, Y., Liu, Y., Yan, S.: Simultaneous feature learning and hash coding with deep neural networks. In: CVPR, pp. 3270–3278 (2015)

Li, C., Deng, C., Li, N., Liu, W., Gao, X., Tao, D.: Self-supervised adversarial hashing networks for cross-modal retrieval. In: CVPR, pp. 4242–4251 (2018)

Li, Y., van Gemert, J.: Deep unsupervised image hashing by maximizing bit entropy. In: AAAI (2020)

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Liu, S., Wang, Y.: Few-shot learning with online self-distillation. In: ICCV, pp. 1067–1070 (2021)

Liu, W., Wang, J., Ji, R., Jiang, Y.G., Chang, S.F.: Supervised hashing with kernels. In: CVPR, pp. 2074–2081. IEEE (2012)

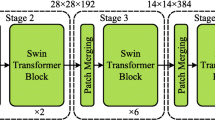

Liu, Z., et al.: Swin transformer: hierarchical vision transformer using shifted windows. In: ICCV (2021)

Loshchilov, I., Hutter, F.: SGDR: stochastic gradient descent with warm restarts. arXiv preprint arXiv:1608.03983 (2016)

Movshovitz-Attias, Y., Toshev, A., Leung, T.K., Ioffe, S., Singh, S.: No fuss distance metric learning using proxies. In: ICCV, pp. 360–368 (2017)

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. IJCV 115(3), 211–252 (2015)

Shen, F., Shen, C., Liu, W., Tao Shen, H.: Supervised discrete hashing. In: CVPR, pp. 37–45 (2015)

Shen, Y., et al.: Auto-encoding twin-bottleneck hashing. In: CVPR, pp. 2818–2827 (2020)

Touvron, H., Cord, M., Douze, M., Massa, F., Sablayrolles, A., Jégou, H.: Training data-efficient image transformers & distillation through attention. In: ICML, pp. 10347–10357. PMLR (2021)

Wang, J., Zhang, T., Sebe, N., Shen, H.T., et al.: A survey on learning to hash. PAMI 40(4), 769–790 (2017)

Wang, Z., Zheng, Q., Lu, J., Zhou, J.: Deep hashing with active pairwise supervision. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12364, pp. 522–538. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58529-7_31

Weiss, Y., Torralba, A., Fergus, R.: Spectral hashing. In: NeurIPS, pp. 1753–1760 (2009)

Xia, R., Pan, Y., Lai, H., Liu, C., Yan, S.: Supervised hashing for image retrieval via image representation learning. In: AAAI (2014)

Xu, T.B., Liu, C.L.: Data-distortion guided self-distillation for deep neural networks. In: AAAI, vol. 33, pp. 5565–5572 (2019)

Yang, E., Liu, T., Deng, C., Liu, W., Tao, D.: DistillHash: unsupervised deep hashing by distilling data pairs. In: CVPR, pp. 2946–2955 (2019)

Yuan, L., et al.: Central similarity quantization for efficient image and video retrieval. In: CVPR, pp. 3083–3092 (2020)

Cao, Y.: Deep quantization network for efficient image retrieval. In: AAAI (2016)

Yun, S., Park, J., Lee, K., Shin, J.: Regularizing class-wise predictions via self-knowledge distillation. In: CVPR, pp. 13876–13885 (2020)

Zhou, X., et al.: Graph convolutional network hashing. IEEE Trans. Cybernet. 50(4), 1460–1472 (2018)

Zhu, H., Long, M., Wang, J., Cao, Y.: Deep hashing network for efficient similarity retrieval. In: AAAI (2016)

Acknowledgement

This research was supported in part by NAVER Corporation, the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (2021R1A2C2007220), and the Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korean government (MSIT) [NO.2021-0-01343, Artificial Intelligence Graduate School Program (Seoul National University)].

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Jang, Y.K., Gu, G., Ko, B., Kang, I., Cho, N.I. (2022). Deep Hash Distillation for Image Retrieval. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13674. Springer, Cham. https://doi.org/10.1007/978-3-031-19781-9_21

Download citation

DOI: https://doi.org/10.1007/978-3-031-19781-9_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19780-2

Online ISBN: 978-3-031-19781-9

eBook Packages: Computer ScienceComputer Science (R0)