Abstract

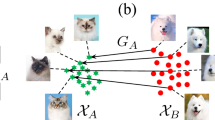

Most image-to-image translation methods require a large number of training images, which restricts their applicability. We instead propose ManiFest: a framework for few-shot image translation that learns a context-aware representation of a target domain from a few images only. To enforce feature consistency, our framework learns a style manifold between source and additional anchor domains (assumed to be composed of large numbers of images). The learned manifold is interpolated and deformed towards the few-shot target domain via patch-based adversarial and feature statistics alignment losses. All of these components are trained simultaneously during a single end-to-end loop. In addition to the general few-shot translation task, our approach can alternatively be conditioned on a single exemplar image to reproduce its specific style. Extensive experiments demonstrate the efficacy of ManiFest on multiple tasks, outperforming the state-of-the-art on all metrics. Our code is avaliable at https://github.com/cv-rits/ManiFest.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Abid, M.A., Hedhli, I., Lalonde, J.F., Gagne, C.: Image-to-image translation with low resolution conditioning. arXiv (2021)

Cao, J., Hou, L., Yang, M.H., He, R., Sun, Z.: Remix: towards image-to-image translation with limited data. In: CVPR (2021)

Chen, W.Y., Liu, Y.C., Kira, Z., Wang, Y.C.F., Huang, J.B.: A closer look at few-shot classification. In: ICLR (2019)

Cherian, A., Sullivan, A.: SEM-GAN: semantically-consistent image-to-image translation. In: WACV (2019)

Choi, Y., Uh, Y., Yoo, J., Ha, J.W.: Stargan v2: diverse image synthesis for multiple domains. In: CVPR (2020)

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: CVPR (2016)

Dell’Eva, A., Pizzati, F., Bertozzi, M., de Charette, R.: Leveraging local domains for image-to-image translation. In: VISAPP (2022)

Endo, Y., Kanamori, Y.: Few-shot semantic image synthesis using stylegan prior. CoRR (2021)

Gatys, L.A., Ecker, A.S., Bethge, M.: Image style transfer using convolutional neural networks. In: CVPR (2016)

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., Hochreiter, S.: Gans trained by a two time-scale update rule converge to a local nash equilibrium. In: NeurIPS (2017)

Hu, H., Wang, W., Zhou, W., Zhao, W., Li, H.: Model-aware gesture-to-gesture translation. In: CVPR (2021)

Huang, X., Belongie, S.: Arbitrary style transfer in real-time with adaptive instance normalization. In: ICCV (2017)

Huang, X., Liu, M.Y., Belongie, S., Kautz, J.: Multimodal unsupervised image-to-image translation. In: ECCV (2018)

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: CVPR (2017)

Jia, Z., et al.: Semantically robust unpaired image translation for data with unmatched semantics statistics. In: ICCV (2021)

Jiang, L., Zhang, C., Huang, M., Liu, C., Shi, J., Loy, C.C.: TSIT: a simple and versatile framework for image-to-image translation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12348, pp. 206–222. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58580-8_13

Karras, T., Aittala, M., Hellsten, J., Laine, S., Lehtinen, J., Aila, T.: Training generative adversarial networks with limited data. In: NeurIPS (2020)

Lee, H.-Y.: DRIT++: diverse image-to-image translation via disentangled representations. Int. J. Comput. Vis. 128(10), 2402–2417 (2020). https://doi.org/10.1007/s11263-019-01284-z

Li, P., Yu, X., Yang, Y.: Super-resolving cross-domain face miniatures by peeking at one-shot exemplar. In: ICCV (2021)

Li, P., Liang, X., Jia, D., Xing, E.P.: Semantic-aware grad-gan for virtual-to-real urban scene adaption. In: BMVC (2018)

Li, Y., Fang, C., Yang, J., Wang, Z., Lu, X., Yang, M.H.: Universal style transfer via feature transforms. In: NeurIPS (2017)

Li, Y., Liu, M.Y., Li, X., Yang, M.H., Kautz, J.: A closed-form solution to photorealistic image stylization. In: ECCV (2018)

Li, Y., Zhang, R., Lu, J., Shechtman, E.: Few-shot image generation with elastic weight consolidation. In: NeurIPS (2020)

Li, Y., Yuan, L., Vasconcelos, N.: Bidirectional learning for domain adaptation of semantic segmentation. In: CVPR (2019)

Lin, C.T., Wu, Y.Y., Hsu, P.H., Lai, S.H.: Multimodal structure-consistent image-to-image translation. In: AAAI (2020)

Lin, J., Wang, Y., He, T., Chen, Z.: Learning to transfer: Unsupervised meta domain translation. In: AAAI (2020)

Lin, J., Xia, Y., Liu, S., Zhao, S., Chen, Z.: Zstgan: an adversarial approach for unsupervised zero-shot image-to-image translation. Neurocomputing 461, 327–335 (2021)

Liu, M.Y., Breuel, T., Kautz, J.: Unsupervised image-to-image translation networks. In: NeurIPS (2017)

Liu, M.Y., et al.: Few-shot unsupervised image-to-image translation. In: ICCV (2019)

Luan, F., Paris, S., Shechtman, E., Bala, K.: Deep photo style transfer. In: CVPR (2017)

Ma, L., Jia, X., Georgoulis, S., Tuytelaars, T., Van Gool, L.: Exemplar guided unsupervised image-to-image translation with semantic consistency. In: ICLR (2019)

Mao, X., Li, Q., Xie, H., Lau, R.Y., Wang, Z., Paul Smolley, S.: Least squares generative adversarial networks. In: ICCV (2017)

Mo, S., Cho, M., Shin, J.: Instagan: instance-aware image-to-image translation. In: ICLR (2019)

Ojha, U., et al.: Few-shot image generation via cross-domain correspondence. In: CVPR (2021)

Park, T., Efros, A.A., Zhang, R., Zhu, J.-Y.: Contrastive learning for unpaired image-to-image translation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12354, pp. 319–345. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58545-7_19

Park, T., Liu, M.Y., Wang, T.C., Zhu, J.Y.: Semantic image synthesis with spatially-adaptive normalization. In: CVPR (2019)

Park, T., et al.: Swapping autoencoder for deep image manipulation. In: NeurIPS (2020)

Patashnik, O., Danon, D., Zhang, H., Cohen-Or, D.: Balagan: cross-modal image translation between imbalanced domains. In: CVPR Workshops (2021)

Pizzati, F., Cerri, P., de Charette, R.: Model-based occlusion disentanglement for image-to-image translation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12365, pp. 447–463. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58565-5_27

Pizzati, F., Cerri, P., de Charette, R.: CoMoGAN: continuous model-guided image-to-image translation. In: CVPR (2021)

Pizzati, F., Cerri, P., de Charette, R.: Guided disentanglement in generative networks. arXiv (2021)

Ramirez, P.Z., Tonioni, A., Di Stefano, L.: Exploiting semantics in adversarial training for image-level domain adaptation. In: IPAS (2018)

Richter, S.R., Hayder, Z., Koltun, V.: Playing for benchmarks. In: ICCV (2017)

Saito, K., Saenko, K., Liu, M.-Y.: COCO-FUNIT: few-shot unsupervised image translation with a content conditioned style encoder. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12348, pp. 382–398. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58580-8_23

Sakaridis, C., Dai, D., Van Gool, L.: Map-guided curriculum domain adaptation and uncertainty-aware evaluation for semantic nighttime image segmentation. In: T-PAMI (2020)

Sakaridis, C., Dai, D., Van Gool, L.: ACDC: the adverse conditions dataset with correspondences for semantic driving scene understanding. In: ICCV (2021)

Sun, P., et al.: Scalability in perception for autonomous driving: Waymo open dataset. In: CVPR (2020)

Tang, H., Xu, D., Yan, Y., Corso, J.J., Torr, P.H., Sebe, N.: Multi-channel attention selection gans for guided image-to-image translation. In: CVPR (2019)

Torrey, L., Shavlik, J.: Transfer learning. In: Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques. IGI Global (2010)

Tremblay, M., Halder, S.S., de Charette, R., Lalonde, J.-F.: Rain rendering for evaluating and improving robustness to bad weather. Int. J. Comput. Vis. 129, 1–20 (2020). https://doi.org/10.1007/s11263-020-01366-3

Wang, J., et al.: Deep high-resolution representation learning for visual recognition. In: T-PAMI (2019)

Wang, X., Yu, K., Dong, C., Tang, X., Loy, C.C.: Deep network interpolation for continuous imagery effect transition. In: CVPR (2019)

Wang, Y., Khan, S., Gonzalez-Garcia, A., Weijer, J.V.D., Khan, F.S.: Semi-supervised learning for few-shot image-to-image translation. In: CVPR (2020)

Wang, Y., Mantecon, H.L., Lopez-Fuentes, J.V.D.W., Raducanu, B.: Transferi2i: transfer learning for image-to-image translation from small datasets. In: ICCV (2021)

Wu, W., Cao, K., Li, C., Qian, C., Loy, C.C.: Transgaga: geometry-aware unsupervised image-to-image translation. In: CVPR (2019)

Yoo, J., Uh, Y., Chun, S., Kang, B., Ha, J.W.: Photorealistic style transfer via wavelet transforms. In: ICCV (2019)

Yu, X., Chen, Y., Liu, S., Li, T., Li, G.: Multi-mapping image-to-image translation via learning disentanglement. In: NeurIPS (2019)

Zhan, F., et al.: Unbalanced feature transport for exemplar-based image translation. In: CVPR (2021)

Zhang, P., Zhang, B., Chen, D., Yuan, L., Wen, F.: Cross-domain correspondence learning for exemplar-based image translation. In: CVPR (2020)

Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: CVPR (2018)

Zhao, S., Liu, Z., Lin, J., Zhu, J.Y., Han, S.: Differentiable augmentation for data-efficient gan training. In: NeurIPS (2020)

Zheng, C., Cham, T.J., Cai, J.: The spatially-correlative loss for various image translation tasks. In: CVPR (2021)

Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: CVPR (2017)

Zhu, J.Y., et al.: Toward multimodal image-to-image translation. In: NeurIPS (2017)

Zhu, P., Abdal, R., Qin, Y., Wonka, P.: Sean: image synthesis with semantic region-adaptive normalization. In: CVPR (2020)

Zhu, Z., Xu, Z., You, A., Bai, X.: Semantically multi-modal image synthesis. In: CVPR (2020)

Acknowledgements

This work was partly funded by Vislab Ambarella, the French project SIGHT (ANR-20-CE23-0016), and received support from Service de coopération et d’action culturelle du Consulat général de France à Québec. It used HPC resources from GENCI-IDRIS (Grant 2021-AD011012808).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Pizzati, F., Lalonde, JF., de Charette, R. (2022). ManiFest: Manifold Deformation for Few-Shot Image Translation. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13677. Springer, Cham. https://doi.org/10.1007/978-3-031-19790-1_27

Download citation

DOI: https://doi.org/10.1007/978-3-031-19790-1_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19789-5

Online ISBN: 978-3-031-19790-1

eBook Packages: Computer ScienceComputer Science (R0)