Abstract

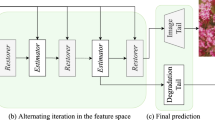

Although current deep learning-based methods have gained promising performance in the blind single image super-resolution (SISR) task, most of them mainly focus on heuristically constructing diverse network architectures and put less emphasis on the explicit embedding of the physical generation mechanism between blur kernels and high-resolution (HR) images. To alleviate this issue, we propose a model-driven deep neural network, called KXNet, for blind SISR. Specifically, to solve the classical SISR model, we propose a simple-yet-effective iterative algorithm. Then by unfolding the involved iterative steps into the corresponding network module, we naturally construct the KXNet. The main specificity of the proposed KXNet is that the entire learning process is fully and explicitly integrated with the inherent physical mechanism underlying this SISR task. Thus, the learned blur kernel has clear physical patterns and the mutually iterative process between blur kernel and HR image can soundly guide the KXNet to be evolved in the right direction. Extensive experiments on synthetic and real data finely demonstrate the superior accuracy and generality of our method beyond the current representative state-of-the-art blind SISR methods. Code is available at: https://github.com/jiahong-fu/KXNet.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

More derivations are provided in the supplementary material.

- 2.

More analysis is provided in the supplementary material.

- 3.

We set \(\alpha _{t}=\beta _{t}=0.1\) at middle stages, \(\alpha _{T}=\beta _{T}=1\) at the last stage, and \(T=19\).

References

Agustsson, E., Timofte, R.: NTIRE 2017 challenge on single image super-resolution: Dataset and study. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 126–135 (2017)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2(1), 183–202 (2009)

Bell-Kligler, S., Shocher, A., Irani, M.: Blind super-resolution kernel estimation using an internal-GAN. Adv. Neural Inf. Process. Syst. 32, 1–10 (2019)

Bevilacqua, M., Roumy, A., Guillemot, C., Alberi-Morel, M.L.: Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In: Proceedings of the British Machine Vision Conference, pp. 135.1–135.10. BMVA press (2012)

Brifman, A., Romano, Y., Elad, M.: Unified single-image and video super-resolution via denoising algorithms. IEEE Trans. Image Process. 28(12), 6063–6076 (2019)

Dai, S., Han, M., Xu, W., Wu, Y., Gong, Y., Katsaggelos, A.K.: SoftCuts: a soft edge smoothness prior for color image super-resolution. IEEE Trans. Image Process. 18(5), 969–981 (2009)

Dong, C., Loy, C.C., He, K., Tang, X.: Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 38(2), 295–307 (2015)

Donoho, D.L.: De-noising by soft-thresholding. IEEE Trans. Inf. Theory 41(3), 613–627 (1995)

Dumoulin, V., Visin, F.: A guide to convolution arithmetic for deep learning. arXiv preprint arXiv:1603.07285 (2016)

Efrat, N., Glasner, D., Apartsin, A., Nadler, B., Levin, A.: Accurate blur models vs. image priors in single image super-resolution. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2832–2839 (2013)

Elad, M., Feuer, A.: Restoration of a single superresolution image from several blurred, noisy, and undersampled measured images. IEEE Trans. Image Process. 6(12), 1646–1658 (1997)

Farsiu, S., Robinson, D., Elad, M., Milanfar, P.: Advances and challenges in super-resolution. Int. J. Imaging Syst. Technol. 14(2), 47–57 (2004)

Gregor, K., LeCun, Y.: Learning fast approximations of sparse coding. In: Proceedings of the 27th International Conference on International Conference on Machine Learning, pp. 399–406 (2010)

Gu, J., Lu, H., Zuo, W., Dong, C.: Blind super-resolution with iterative kernel correction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1604–1613 (2019)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Heide, F., et al.: Proximal: efficient image optimization using proximal algorithms. ACM Trans. Graph. (TOG) 35(4), 1–15 (2016)

Huang, J.B., Singh, A., Ahuja, N.: Single image super-resolution from transformed self-exemplars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5197–5206 (2015)

Ignatov, A., Kobyshev, N., Timofte, R., Vanhoey, K., Van Gool, L.: DSLR-quality photos on mobile devices with deep convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3277–3285 (2017)

Kim, J., Lee, J.K., Lee, K.M.: Deeply-recursive convolutional network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1637–1645 (2016)

Kingma, D.P., Ba, J.: ADAM: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Lai, W.S., Huang, J.B., Ahuja, N., Yang, M.H.: Deep Laplacian pyramid networks for fast and accurate super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 624–632 (2017)

Liang, J., Cao, J., Sun, G., Zhang, K., Van Gool, L., Timofte, R.: SwinIR: Image restoration using Swin transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1833–1844 (2021)

Liang, J., Zhang, K., Gu, S., Van Gool, L., Timofte, R.: Flow-based kernel prior with application to blind super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10601–10610 (2021)

Lim, B., Son, S., Kim, H., Nah, S., Mu Lee, K.: Enhanced deep residual networks for single image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 136–144 (2017)

Luo, Z., Huang, Y., Li, S., Wang, L., Tan, T.: Unfolding the alternating optimization for blind super resolution. Adv. Neural Inf. Process. Syst. (NeurIPS). 33, 5632–5643 (2020)

Luo, Z., Huang, Y., Li, S., Wang, L., Tan, T.: End-to-end alternating optimization for blind super resolution. arXiv preprint arXiv:2105.06878 (2021)

Marquina, A., Osher, S.J.: Image super-resolution by TV-regularization and Bregman iteration. J. Sci. Comput. 37(3), 367–382 (2008)

Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings Eighth IEEE International Conference on Computer Vision. ICCV 2001, vol. 2, pp. 416–423. IEEE (2001)

Matsui, Y., et al.: Sketch-based manga retrieval using manga109 dataset. Multimed. Tools App. 76(20), 21811–21838 (2017)

Michaeli, T., Irani, M.: Nonparametric blind super-resolution. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 945–952 (2013)

Niu, B., Wen, W., Ren, W., Zhang, X., Yang, L., Wang, S., Zhang, K., Cao, X., Shen, H.: Single image super-resolution via a holistic attention network. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12357, pp. 191–207. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58610-2_12

Pan, J., Sun, D., Pfister, H., Yang, M.H.: Blind image deblurring using dark channel prior. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1628–1636 (2016)

Perrone, D., Favaro, P.: Total variation blind deconvolution: the devil is in the details. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2909–2916 (2014)

Ren, D., Zhang, K., Wang, Q., Hu, Q., Zuo, W.: Neural blind deconvolution using deep priors. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3341–3350 (2020)

Riegler, G., Schulter, S., Ruther, M., Bischof, H.: Conditioned regression models for non-blind single image super-resolution. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 522–530 (2015)

Shi, W., et al.: Is the deconvolution layer the same as a convolutional layer? arXiv preprint arXiv:1609.07009 (2016)

Sun, L., Cho, S., Wang, J., Hays, J.: Edge-based blur kernel estimation using patch priors. In: IEEE International Conference on Computational Photography (ICCP), pp. 1–8. IEEE (2013)

Timofte, R., Agustsson, E., Van Gool, L., Yang, M.H., Zhang, L.: NTIRE 2017 challenge on single image super-resolution: methods and results. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 114–125 (2017)

Wang, H., Li, Y., He, N., Ma, K., Meng, D., Zheng, Y.: DICDNet: deep interpretable convolutional dictionary network for metal artifact reduction in CT images. IEEE Trans. Med. Imaging 41(4), 869–880 (2021)

Wang, H., et al.: InDuDoNet: an interpretable dual domain network for CT metal artifact reduction. In: de Bruijne, M., et al. (eds.) MICCAI 2021. LNCS, vol. 12906, pp. 107–118. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-87231-1_11

Wang, H., Xie, Q., Zhao, Q., Liang, Y., Meng, D.: RCDNet: an interpretable rain convolutional dictionary network for single image deraining. arXiv preprint arXiv:2107.06808 (2021)

Wang, H., Xie, Q., Zhao, Q., Meng, D.: A model-driven deep neural network for single image rain removal. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3103–3112 (2020)

Wang, L., et al.: Unsupervised degradation representation learning for blind super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10581–10590 (2021)

Wang, X., Xie, L., Dong, C., Shan, Y.: Real-ESRGAN: training real-world blind super-resolution with pure synthetic data. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1905–1914 (2021)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Xie, Q., Zhou, M., Zhao, Q., Meng, D., Zuo, W., Xu, Z.: Multispectral and hyperspectral image fusion by MS/HS fusion net. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1585–1594 (2019)

Xie, Q., Zhou, M., Zhao, Q., Xu, Z., Meng, D.: MHF-Net: an interpretable deep network for multispectral and hyperspectral image fusion. IEEE Trans. Pattern Anal. Mach. Intell. (2020)

Xu, Y.S., Tseng, S.Y.R., Tseng, Y., Kuo, H.K., Tsai, Y.M.: Unified dynamic convolutional network for super-resolution with variational degradations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12496–12505 (2020)

Yan, Y., Ren, W., Guo, Y., Wang, R., Cao, X.: Image deblurring via extreme channels prior. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4003–4011 (2017)

Yang, D., Sun, J.: Proximal dehaze-net: a prior learning-based deep network for single image dehazing. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 729–746. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_43

Yang, Y., Sun, J., Li, H., Xu, Z.: Deep ADMM-Net for compressive sensing MRI. In: Proceedings of the 30th International Conference on Neural Information Processing Systems, pp. 10–18 (2016)

Zeyde, R., Elad, M., Protter, M.: On single image scale-up using sparse-representations. In: Boissonnat, J.-D., et al. (eds.) Curves and Surfaces 2010. LNCS, vol. 6920, pp. 711–730. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-27413-8_47

Zhang, K., Gool, L.V., Timofte, R.: Deep unfolding network for image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3217–3226 (2020)

Zhang, K., Liang, J., Van Gool, L., Timofte, R.: Designing a practical degradation model for deep blind image super-resolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4791–4800 (2021)

Zhang, K., Zuo, W., Gu, S., Zhang, L.: Learning deep CNN denoiser prior for image restoration. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3929–3938 (2017)

Zhang, K., Zuo, W., Zhang, L.: FFDNet: toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 27(9), 4608–4622 (2018)

Zhang, K., Zuo, W., Zhang, L.: Learning a single convolutional super-resolution network for multiple degradations. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3262–3271 (2018)

Zhang, K., Zuo, W., Zhang, L.: Deep plug-and-play super-resolution for arbitrary blur kernels. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1671–1681 (2019)

Zhang, Y., Li, K., Li, K., Wang, L., Zhong, B., Fu, Y.: Image super-resolution using very deep residual channel attention networks. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 294–310. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_18

Zhang, Y., Tian, Y., Kong, Y., Zhong, B., Fu, Y.: Residual dense network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2472–2481 (2018)

Zhao, H., Gallo, O., Frosio, I., Kautz, J.: Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 3(1), 47–57 (2016)

Acknowledgment

This research was supported by NSFC project under contracts U21A6005, 61721002, U1811461, 62076196, The Major Key Project of PCL under contract PCL2021A12, and the Macao Science and Technology Development Fund under Grant 061/2020/A2.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Fu, J., Wang, H., Xie, Q., Zhao, Q., Meng, D., Xu, Z. (2022). KXNet: A Model-Driven Deep Neural Network for Blind Super-Resolution. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13679. Springer, Cham. https://doi.org/10.1007/978-3-031-19800-7_14

Download citation

DOI: https://doi.org/10.1007/978-3-031-19800-7_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19799-4

Online ISBN: 978-3-031-19800-7

eBook Packages: Computer ScienceComputer Science (R0)