Abstract

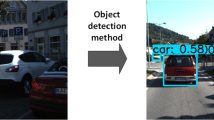

Contemporary deep-learning object detection methods for autonomous driving usually presume fixed categories of common traffic participants, such as pedestrians and cars. Most existing detectors are unable to detect uncommon objects and corner cases (e.g., a dog crossing a street), which may lead to severe accidents in some situations, making the timeline for the real-world application of reliable autonomous driving uncertain. One main reason that impedes the development of truly reliably self-driving systems is the lack of public datasets for evaluating the performance of object detectors on corner cases. Hence, we introduce a challenging dataset named CODA that exposes this critical problem of vision-based detectors. The dataset consists of 1500 carefully selected real-world driving scenes, each containing four object-level corner cases (on average), spanning more than 30 object categories. On CODA, the performance of standard object detectors trained on large-scale autonomous driving datasets significantly drops to no more than 12.8% in mAR. Moreover, we experiment with the state-of-the-art open-world object detector and find that it also fails to reliably identify the novel objects in CODA, suggesting that a robust perception system for autonomous driving is probably still far from reach. We expect our CODA dataset to facilitate further research in reliable detection for real-world autonomous driving. Our dataset is available at https://coda-dataset.github.io.

K. Li, K. Chen and H. Wang—Equal contribution.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

We adopt the definition of object-level corner case proposed in [3].

- 2.

KITTI are captured in a mid-size city of Germany, nuScenes are captured in Singapore, and ONCE are captured in various cities of China.

References

Blum, H., Sarlin, P.E., Nieto, J., Siegwart, R., Cadena, C.: The fishyscapes benchmark: Measuring blind spots in semantic segmentation. arXiv preprint arXiv:1904.03215 (2019)

Bogoslavskyi, I., Stachniss, C.: Fast range image-based segmentation of sparse 3D laser scans for online operation. In: IROS (2016)

Breitenstein, J., Termöhlen, J.A., Lipinski, D., Fingscheidt, T.: Corner cases for visual perception in automated driving: some guidance on detection approaches. arXiv preprint arXiv:2102.05897 (2021)

Caesar, H., et al.: A multimodal dataset for autonomous driving. arXiv preprint arXiv:1903.11027 (2019)

Cai, Z., Vasconcelos, N.: Cascade R-CNN: delving into high quality object detection. In: CVPR (2018)

Chen, K., Hong, L., Xu, H., Li, Z., Yeung, D.Y.: Multisiam: self-supervised multi-instance Siamese representation learning for autonomous driving. In: ICCV (2021)

Chen, K., et al.: MMDetection: Open MMLab detection toolbox and benchmark. arXiv preprint arXiv:1906.07155 (2019)

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 833–851. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_49

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: CVPR (2016)

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981)

Geiger, A., Lenz, P., Urtasun, R.: Are we ready for autonomous driving? the kitti vision benchmark suite. In: CVPR (2012)

Gong, D., et al.: Memorizing normality to detect anomaly: memory-augmented deep autoencoder for unsupervised anomaly detection. In: ICCV (2019)

Han, J., et al.: SODA10M: a large-scale 2D self/semi-supervised object detection dataset for autonomous driving. arXiv preprint arXiv:2106.11118 (2021)

He, K., Fan, H., Wu, Y., Xie, S., Girshick, R.: Momentum contrast for unsupervised visual representation learning. In: CVPR (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR (2016)

Hendrycks, D., Basart, S., Mazeika, M., Mostajabi, M., Steinhardt, J., Song, D.: A benchmark for anomaly segmentation. arXiv preprint arXiv:1911.11132 (2019)

Jiang, C., Xu, H., Zhang, W., Liang, X., Li, Z.: SP-NAS: serial-to-parallel backbone search for object detection. In: CVPR (2020)

Joseph, K., Khan, S., Khan, F.S., Balasubramanian, V.N.: Towards open world object detection. In: CVPR (2021)

LeCun, Y., Chopra, S., Hadsell, R., Ranzato, M., Huang, F.: A tutorial on energy-based learning. Predicting Structured Data 1 (2006)

Lin, T.Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: CVPR (2017)

Lin, T.Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: ICCV (2017)

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Lis, K., Nakka, K., Fua, P., Salzmann, M.: Detecting the unexpected via image resynthesis. In: ICCV (2019)

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Liu, Y.C., et al.: Unbiased teacher for semi-supervised object detection. In: ICLR (2021)

Liu, Z., et al.: Swin transformer: hierarchical vision transformer using shifted windows. In: ICCV (2021)

Liu, Z., et al.: Task-customized self-supervised pre-training with scalable dynamic routing. In: AAAI (2022)

Mao, J., et al.: One million scenes for autonomous driving: ONCE dataset. arXiv preprint arXiv:2106.11037 (2021)

Pinggera, P., Ramos, S., Gehrig, S., Franke, U., Rother, C., Mester, R.: Lost and found: detecting small road hazards for self-driving vehicles. In: IROS (2016)

Qiao, L., Zhao, Y., Li, Z., Qiu, X., Wu, J., Zhang, C.: DeFRCN: decoupled faster R-CNN for few-shot object detection. In: ICCV (2021)

Radford, A., et al.: Learning transferable visual models from natural language supervision. In: ICML (2021)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. In: CVPR (2016)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: NeurIPS (2015)

Reza, M.A., Naik, A.U., Chen, K., Crandall, D.J.: Automatic annotation for semantic segmentation in indoor scenes. In: IROS (2019)

Russell, B.C., Torralba, A., Murphy, K.P., Freeman, W.T.: LabelMe: a database and web-based tool for image annotation. IJCV 77(1–3), 157–173 (2008)

Sohn, K., Zhang, Z., Li, C.L., Zhang, H., Lee, C.Y., Pfister, T.: A simple semi-supervised learning framework for object detection. arXiv:2005.04757 (2020)

Sun, P., et al.: Scalability in perception for autonomous driving: Waymo open dataset. In: CVPR (2020)

Sun, P., et al.: Sparse R-CNN: end-to-end object detection with learnable proposals. In: CVPR (2021)

Wada, K.: LabelMe: image polygonal annotation with python. https://github.com/wkentaro/labelme (2016)

Wang, X., Huang, T., Gonzalez, J., Darrell, T., Yu, F.: Frustratingly simple few-shot object detection. In: ICML (2020)

Wu, Y., Kirillov, A., Massa, F., Lo, W.Y., Girshick, R.: Detectron2. https://github.com/facebookresearch/detectron2 (2019)

Xia, Y., Zhang, Y., Liu, F., Shen, W., Yuille, A.L.: Synthesize then compare: detecting failures and anomalies for semantic segmentation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12346, pp. 145–161. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58452-8_9

Ye, N., et al.: OoD-bench: quantifying and understanding two dimensions of out-of-distribution generalization. In: CVPR (2022)

Yu, F., Chen, H., Wang, X., Xian, W., Chen, Y., Liu, F., Madhavan, V., Darrell, T.: BDD100k: a diverse driving dataset for heterogeneous multitask learning. In: CVPR (2020)

Zhou, X., et al.: Model agnostic sample reweighting for out-of-distribution learning. In: ICML (2022)

Zhou, X., Lin, Y., Zhang, W., Zhang, T.: Sparse invariant risk minimization. In: ICML (2022)

Zhu, X., Su, W., Lu, L., Li, B., Wang, X., Dai, J.: Deformable DETR: deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159 (2020)

Acknowledgement

We gratefully acknowledge the support of MindSpore, CANN (Compute Architecture for Neural Networks) and Ascend AI Processor used for this research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Li, K. et al. (2022). CODA: A Real-World Road Corner Case Dataset for Object Detection in Autonomous Driving. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13698. Springer, Cham. https://doi.org/10.1007/978-3-031-19839-7_24

Download citation

DOI: https://doi.org/10.1007/978-3-031-19839-7_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19838-0

Online ISBN: 978-3-031-19839-7

eBook Packages: Computer ScienceComputer Science (R0)