Abstract

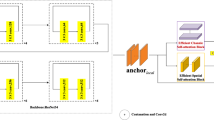

Lane detection requires adequate global information due to the simplicity of lane line features and changeable road scenes. In this paper, we propose a novel lane detection Transformer based on multi-frame input to regress the parameters of lanes under a lane shape modeling. We design a Multi-frame Horizontal and Vertical Attention (MHVA) module to obtain more global features and use Visual Transformer (VT) module to get “lane tokens” with interaction information of lane instances. Extensive experiments on two public datasets show that our model can achieve state-of-art results on VIL-100 dataset and comparable performance on TuSimple dataset. In addition, our model runs at 46 fps on multi-frame data while using few parameters, indicating the feasibility and practicability in real-time self-driving applications of our proposed method.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

For academic consistency, we use “lane” to denote lane line in this paper.

- 2.

References

Ghafoorian, M., Nugteren, C., Baka, N., Booij, O., Hofmann, M.: EL-GAN: embedding loss driven generative adversarial networks for lane detection. In: Leal-Taixé, L., Roth, S. (eds.) ECCV 2018. LNCS, vol. 11129, pp. 256–272. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-11009-3_15

Hou, Y., Ma, Z., Liu, C., Loy, C.C.: Learning lightweight lane detection CNNs by self attention distillation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1013–1021 (2019)

Jung, S., Choi, S., Khan, M.A., Choo, J.: Towards lightweight lane detection by optimizing spatial embedding. arXiv preprint arXiv:2008.08311 (2020)

Ko, Y., Lee, Y., Azam, S., Munir, F., Jeon, M., Pedrycz, W.: Key points estimation and point instance segmentation approach for lane detection. IEEE Trans. Intell. Transp. Syst. 23, 8949–8958 (2021)

Lee, M., Lee, J., Lee, D., Kim, W., Hwang, S., Lee, S.: Robust lane detection via expanded self attention. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 533–542 (2022)

Li, X., Li, J., Hu, X., Yang, J.: Line-CNN: end-to-end traffic line detection with line proposal unit. IEEE Trans. Intell. Transp. Syst. 21(1), 248–258 (2019)

Liu, R., Yuan, Z., Liu, T., Xiong, Z.: End-to-end lane shape prediction with transformers. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3694–3702 (2021)

Liu, Y.B., Zeng, M., Meng, Q.H.: Heatmap-based vanishing point boosts lane detection (2020)

Lo, S.Y., Hang, H.M., Chan, S.W., Lin, J.J.: Multi-class lane semantic segmentation using efficient convolutional networks. In: 2019 IEEE 21st International Workshop on Multimedia Signal Processing (MMSP), pp. 1–6. IEEE (2019)

Mamidala, R.S., Uthkota, U., Shankar, M.B., Antony, A.J., Narasimhadhan, A.: Dynamic approach for lane detection using google street view and CNN. In: TENCON 2019–2019 IEEE Region 10 Conference (TENCON), pp. 2454–2459. IEEE (2019)

Neven, D., De Brabandere, B., Georgoulis, S., Proesmans, M., Van Gool, L.: Towards end-to-end lane detection: an instance segmentation approach. In: 2018 IEEE Intelligent Vehicles Symposium (IV), pp. 286–291. IEEE (2018)

Pan, X., Shi, J., Luo, P., Wang, X., Tang, X.: Spatial as deep: spatial CNN for traffic scene understanding. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

Pizzati, F., Allodi, M., Barrera, A., García, F.: Lane detection and classification using cascaded CNNs. In: Moreno-Díaz, R., Pichler, F., Quesada-Arencibia, A. (eds.) EUROCAST 2019. LNCS, vol. 12014, pp. 95–103. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-45096-0_12

Qin, Z., Wang, H., Li, X.: Ultra fast structure-aware deep lane detection. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12369, pp. 276–291. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58586-0_17

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, vol. 28 (2015)

Tabelini, L., Berriel, R., Paixao, T.M., Badue, C., De Souza, A.F., Oliveira-Santos, T.: Keep your eyes on the lane: real-time attention-guided lane detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 294–302 (2021)

Tabelini, L., Berriel, R., Paixao, T.M., Badue, C., De Souza, A.F., Oliveira-Santos, T.: PolylaneNet: lane estimation via deep polynomial regression. In: 2020 25th International Conference on Pattern Recognition (ICPR), pp. 6150–6156. IEEE (2021)

Wang, Y., et al.: End-to-end video instance segmentation with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8741–8750 (2021)

Wu, B., et al.: Visual transformers: token-based image representation and processing for computer vision. arXiv preprint arXiv:2006.03677 (2020)

Yoo, S, et al.: End-to-end lane marker detection via row-wise classification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 1006–1007 (2020)

Zhang, H., Gu, Y., Wang, X., Wang, M., Pan, J.: SololaneNet: instance segmentation-based lane detection method using locations. In: 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), pp. 2725–2731. IEEE (2021)

Zhang, J., Deng, T., Yan, F., Liu, W.: Lane detection model based on spatio-temporal network with double convolutional gated recurrent units. IEEE Trans. Intell. Transp. Syst., 1–13 (2021). https://doi.org/10.1109/tits.2021.3060258

Zhang, Y., et al.: VIL-100: a new dataset and a baseline model for video instance lane detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 15681–15690 (2021)

Zheng, T., et al.: RESA: recurrent feature-shift aggregator for lane detection. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 3547–3554 (2021)

Zou, Q., Jiang, H., Dai, Q., Yue, Y., Chen, L., Wang, Q.: Robust lane detection from continuous driving scenes using deep neural networks. IEEE Trans. Veh. Technol. 69(1), 41–54 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, H., Gu, Y., Wang, X., Pan, J., Wang, M. (2022). Lane Detection Transformer Based on Multi-frame Horizontal and Vertical Attention and Visual Transformer Module. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13699. Springer, Cham. https://doi.org/10.1007/978-3-031-19842-7_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-19842-7_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19841-0

Online ISBN: 978-3-031-19842-7

eBook Packages: Computer ScienceComputer Science (R0)