Abstract

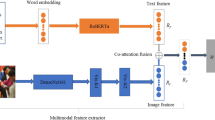

In recent years, posting offensive content on social media platforms has increased, and memes play a vital role in continuing this trend. Memes usually come in multimodal forms and can often promulgate offensive views by implicitly expressing a sense of humour. A meme’s visual and textual information is often disparate and can easily evade surveillance systems. Therefore, an automatic offensive meme detection system is required to combat malign content and maintain social media harmony. Past studies explored various multimodal fusion techniques to combine the visual and textual information of the memes. However, they fail to learn the multimodal content’s significant relationships and perform poorly in offensive meme classification. This paper proposes an inter-modal attention framework that synergistically fuses the visual and textual information by focusing on the modality-specific features. The state-of-the-art pre-trained VGG19 and BERT models are employed to extract the visual and textual features, respectively. The proposed method is compared against several baseline models on a publicly available multimodal offensive dataset. The results demonstrated that the proposed approach outperformed all the models by achieving the highest weighted f1-score of 0.635.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

Dhingra, B., Liu, H., Yang, Z., Cohen, W.W., Salakhutdinov, R.: Gated-attention readers for text comprehension. arXiv preprint arXiv:1606.01549 (2016)

Gadzicki, K., Khamsehashari, R., Zetzsche, C.: Early vs late fusion in multimodal convolutional neural networks. In: 2020 IEEE 23rd International Conference on Information Fusion (FUSION), pp. 1–6. IEEE (2020)

Hossain, E., Sharif, O., Hoque, M.M.: Nlp-cuet@ dravidianlangtech-eacl2021: investigating visual and textual features to identify trolls from multimodal social media memes. In: Proceedings of the First Workshop on Speech and Language Technologies for Dravidian Languages, pp. 300–306 (2021)

Hs, C., et al.: Trollmeta@ dravidianlangtech-eacl2021: meme classification using deep learning. In: Proceedings of the First Workshop on Speech and Language Technologies for Dravidian Languages, pp. 277–280 (2021)

Huang, B., Bai, Y.: Hub@ dravidianlangtech-eacl2021: Meme classification for tamil text-image fusion. In: Proceedings of the First Workshop on Speech and Language Technologies for Dravidian Languages, pp. 210–215 (2021)

Khedkar, S., Karsi, P., Ahuja, D., Bahrani, A.: Hateful memes, offensive or non-offensive! In: Khanna, A., Gupta, D., Bhattacharyya, S., Hassanien, A.E., Anand, S., Jaiswal, A. (eds.) International Conference on Innovative Computing and Communications. AISC, vol. 1388, pp. 609–621. Springer, Singapore (2022). https://doi.org/10.1007/978-981-16-2597-8_52

Kumari, K., Singh, J.P.: Identification of cyberbullying on multi-modal social media posts using genetic algorithm. Trans. Emerg. Telecommun. Technol. 32(2), e3907 (2021)

Mishra, A.K., Saumya, S.: Iiit_dwd@ eacl2021: Identifying troll meme in tamil using a hybrid deep learning approach. In: Proceedings of the First Workshop on Speech and Language Technologies for Dravidian Languages, pp. 243–248 (2021)

Pavlopoulos, J., Thain, N., Dixon, L., Androutsopoulos, I.: Convai at semeval-2019 task 6: Offensive language identification and categorization with perspective and bert. In: Proceedings of the 13th international Workshop on Semantic Evaluation, pp. 571–576 (2019)

Perifanos, K., Goutsos, D.: Multimodal hate speech detection in greek social media. Multimodal Technol. Interact. 5(7), 34 (2021)

Que, Q.: Simon@ dravidianlangtech-eacl2021: meme classification for tamil with bert. In: Proceedings of the First Workshop on Speech and Language Technologies for Dravidian Languages, pp. 287–290 (2021)

Sharif, O., Hoque, M.M.: Identification and classification of textual aggression in social media: resource creation and evaluation. In: International Workshop on Combating On line Hostile Posts in Regional Languages during Emergency Situation, pp. 9–20. Springer (2021). https://doi.org/10.1007/978-3-030-73696-5_2

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Singh, B., Upadhyay, N., Verma, S., Bhandari, S.: Classification of hateful memes using multimodal models. In: Data Intelligence and Cognitive Informatics, pp. 181–192. Springer (2022). https://doi.org/10.1007/978-981-16-6460-1_13

Suryawanshi, S., Chakravarthi, B.R., Arcan, M., Buitelaar, P.: Multimodal meme dataset (MultiOFF) for identifying offensive content in image and text. In: Proceedings of the Second Workshop on Trolling, Aggression and Cyberbullying, pp. 32–41. European Language Resources Association (ELRA), Marseille, France (2020). https://aclanthology.org/2020.trac-1.6

Suryawanshi, S., Chakravarthi, B.R., Verma, P., Arcan, M., McCrae, J.P., Buitelaar, P.: A dataset for troll classification of tamilmemes. In: Proceedings of the WILDRE5-5th Workshop on Indian Language Data: Resources and Evaluation, pp. 7–13 (2020)

Tan, C., Sun, F., Kong, T., Zhang, W., Yang, C., Liu, C.: A survey on deep transfer learning. In: Kůrková, V., Manolopoulos, Y., Hammer, B., Iliadis, L., Maglogiannis, I. (eds.) ICANN 2018. LNCS, vol. 11141, pp. 270–279. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01424-7_27

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Hossain, E., Hoque, M.M., Hossain, M.A. (2023). An Inter-modal Attention Framework for Multimodal Offense Detection. In: Vasant, P., Weber, GW., Marmolejo-Saucedo, J.A., Munapo, E., Thomas, J.J. (eds) Intelligent Computing & Optimization. ICO 2022. Lecture Notes in Networks and Systems, vol 569. Springer, Cham. https://doi.org/10.1007/978-3-031-19958-5_81

Download citation

DOI: https://doi.org/10.1007/978-3-031-19958-5_81

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19957-8

Online ISBN: 978-3-031-19958-5

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)