Abstract

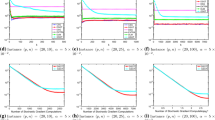

This paper addresses distributed optimization problems over digraphs in which multiple agents cooperatively minimize the finite sum of their local objective functions via local communication. To improve the computation efficiency, we propose a novel algorithm named SSGT-PP by combining Snapshot Gradient Tracking technique with Push-Pull method. In SSGT-PP, agents compute the full-gradient intermittently under the control of a random variable, so that the frequency of gradient computation is reduced. As a result, the proposed algorithm can save computing resources especially for large-scale distributed optimization problems. We theoretically show that SSGT-PP can achieve linear convergence rate on strongly convex functions. Finally, we substantiate the effectiveness of SSGT-PP by numerical experiments.

This work was supported in part by the National Natural Science Foundation of China under Grant 62176056, and is supported in part by Young Elite Scientists Sponsorship Program by CAST, 2021QNRC001.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Assran, B.M., Aytekin, A., Feyzmahdavian, H.R., Johansson, M., Rabbat, M.G.: Advances in asynchronous parallel and distributed optimization. Proc. IEEE 108(11), 2013–2031 (2020)

Horn, R.A., Johnson, C.R.: Matrix Analysis (1990)

Kempe, D., Dobra, A., Gehrke, J.: Gossip-based computation of aggregate information. In: Annual Symposium on Foundations of Computer Science, pp. 482–491 (2003)

Li, H., Lin, Z.: Accelerated gradient tracking over time-varying graphs for decentralized optimization (2021). http://arxiv.org/abs/2104.02596

Li, Z., Shi, W., Yan, M.: A decentralized proximal-gradient method with network independent step-sizes and separated convergence rates. IEEE Trans. Signal Process. 67, 4494–4506 (2019)

Nedic, A., Olshevsky, A.: Stochastic gradient-push for strongly convex functions on time-varying directed graphs. IEEE Trans. Autom. Control 61, 3936–3947 (2016)

Nedić, A., Olshevsky, A., Shi, W.: Achieving geometric convergence for distributed optimization over time-varying graphs. SIAM J. Optim. 27, 2597–2633 (2017)

Nedić, A., Olshevsky, A.: Distributed optimization over time-varying directed graphs. IEEE Trans. Autom. Control 60, 601–615 (2015)

Nedić, A., Ozdaglar, A.: Distributed subgradient methods for multi-agent optimization. IEEE Trans. Autom. Control 54, 48–61 (2009)

Pu, S., Nedić, A.: Distributed stochastic gradient tracking methods. Math. Program. 187, 409–457 (2021)

Pu, S., Shi, W., Xu, J., Nedic, A.: Push-pull gradient methods for distributed optimization in networks. IEEE Trans. Autom. Control 66, 1–16 (2021)

Qu, G., Li, N.: Harnessing smoothness to accelerate distributed optimization. IEEE Trans. Control Network Syst. 5(3), 1245–1260 (2018)

Qu, G., Li, N.: Accelerated distributed nesterov gradient descent. IEEE Trans. Autom. Control 65, 2566–2581 (2020)

Qureshi, M.I., Xin, R.: Push-SAGA: a decentralized stochastic algorithm with variance reduction over directed graphs (2020). http://arxiv.org/abs/2008.06082

Qureshi, M.I., Xin, R., Kar, S., Khan, U.A.: S-ADDOPT: decentralized stochastic first-order optimization over directed graphs. IEEE Control Syst. Lett. 5, 953–958 (2021)

Ren, C., Lyu, X., Ni, W., Tian, H., Song, W., Liu, R.P.: Distributed online optimization of fog computing for internet of things under finite device buffers. IEEE Internet Things J. 7(6), 5434–5448 (2020)

Saadatniaki, F., Xin, R., Khan, U.A.: Decentralized optimization over time-varying directed graphs with row and column-stochastic matrices. IEEE Trans. Autom. Control 65, 4769–4780 (2020)

Shi, W., Ling, Q., Wu, G., Yin, W.: EXTRA: an exact first-order algorithm for decentralized consensus optimization. SIAM J. Optim. 25(2), 944–966 (2015)

Song, Z., Shi, L., Pu, S., Yan, M.: Optimal gradient tracking for decentralized optimization, pp. 1–48 (2021). http://arxiv.org/abs/2110.05282

Xi, C., Mai, V.S., Xin, R., Abed, E.H., Khan, U.A.: Linear convergence in optimization over directed graphs with row-stochastic matrices. IEEE Trans. Autom. Control 63, 3558–3565 (2018)

Xin, R., Khan, U.A.: A linear algorithm for optimization over directed graphs with geometric convergence. IEEE Control Syst. Lett. 2, 313–318 (2018)

Xin, R., Khan, U.A., Kar, S.: Variance-reduced decentralized stochastic optimization with accelerated convergence. IEEE Trans. Signal Process. 68, 6255–6271 (2020)

Xin, R., Xi, C., Khan, U.A.: FROST–Fast row-stochastic optimization with uncoordinated step-sizes. In: Eurasip Journal on Advances in Signal Processing, vol. 2019, pp. 1–14. EURASIP Journal on Advances in Signal Processing (2019)

Zeng, J., Li, M., Liu, J., Wu, J.: Global coordinative optimization for energy management in distributed renewable energy generation system. In: Proceedings of the 29th Chinese Control Conference, pp. 1797–1801 (2010)

Zhang, J., You, K.: Fully asynchronous distributed optimization with linear convergence in directed networks 2, 1–14 (2021). http://arxiv.org/abs/1901.08215

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Che, K., Yang, S. (2022). A Snapshot Gradient Tracking for Distributed Optimization over Digraphs. In: Fang, L., Povey, D., Zhai, G., Mei, T., Wang, R. (eds) Artificial Intelligence. CICAI 2022. Lecture Notes in Computer Science(), vol 13606. Springer, Cham. https://doi.org/10.1007/978-3-031-20503-3_28

Download citation

DOI: https://doi.org/10.1007/978-3-031-20503-3_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-20502-6

Online ISBN: 978-3-031-20503-3

eBook Packages: Computer ScienceComputer Science (R0)