Abstract

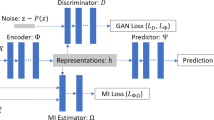

Estimating the average treatment effect (ATE) from observational data is challenging due to selection bias. Existing works mainly tackle this challenge in two ways. Some researchers propose constructing a score function that satisfies the orthogonal condition, which guarantees that the established ATE estimator is “orthogonal" to be more robust. The others explore representation learning models to achieve a balanced representation between the treated and the controlled groups. However, existing studies fail to 1) discriminate treated units from controlled ones in the representation space to avoid the over-balanced issue; 2) fully utilize the “orthogonality information". In this paper, we propose a moderately-balanced representation learning (MBRL) framework based on recent covariates balanced representation learning methods and orthogonal machine learning theory. This framework protects the representation from being over-balanced via multi-task learning. Simultaneously, MBRL incorporates the noise orthogonality information in the training and validation stages to achieve a better ATE estimation. The comprehensive experiments on benchmark and simulated datasets show the superiority and robustness of our method on treatment effect estimations compared with existing state-of-the-art methods.

Y. Huang and C. H. Leung—Co-first authors are in alphabetical order.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Almond, D., Chay, K.Y., Lee, D.S.: The costs of low birth weight. Q. J. Econ. 120(3), 1031–1083 (2005)

Athey, S., Wager, S.: Estimating treatment effects with causal forests: an application. Observat. Stud. 5(2), 37–51 (2019)

Chernozhukov, V., et al.: Double/debiased machine learning for treatment and structural parameters. Econ. J. 21(1), C1–C68 (2018)

Chipman, H.A., George, E.I., McCulloch, R.E.: Bart: Bayesian additive regression trees. Ann. Appl. Stat. 4(1), 266–298 (2010)

Chu, Z., Rathbun, S.L., Li, S.: Graph infomax adversarial learning for treatment effect estimation with networked observational data. In: KDD, pp. 176–184 (2021). https://doi.org/10.1145/3447548.3467302

Dorie, V.: Nonparametric methods for causal inference. https://github.com/vdorie/npci (2021)

Farrell, M.H.: Robust inference on average treatment effects with possibly more covariates than observations. J. Econ. 189(1), 1–23 (2015)

Glass, T.A., Goodman, S.N., Hernán, M.A., Samet, J.M.: Causal inference in public health. Ann. Rev. Public Health 34, 61–75 (2013)

Guo, R., Cheng, L., Li, J., Hahn, P.R., Liu, H.: A survey of learning causality with data: problems and methods. ACM Comput. Surv. (CSUR) 53(4), 1–37 (2020)

Guo, R., Li, J., Li, Y., Candan, K.S., Raglin, A., Liu, H.: Ignite: a minimax game toward learning individual treatment effects from networked observational data. In: IJCAI, pp. 4534–4540 (2020)

Hatt, T., Feuerriegel, S.: Estimating Average Treatment Effects via Orthogonal Regularization, pp. 680–689. Association for Computing Machinery, New York, NY, USA (2021). https://doi.org/10.1145/3459637.3482339

Hill, J., Su, Y.S.: Assessing lack of common support in causal inference using Bayesian nonparametrics: implications for evaluating the effect of breastfeeding on children’s cognitive outcomes. The Annals of Applied Statistics, pp. 1386–1420 (2013)

Hill, J.L.: Bayesian nonparametric modeling for causal inference. J. Comput. Graph. Stat. 20(1), 217–240 (2011)

Huang, Y., et al.: Robust causal learning for the estimation of average treatment effects. In: 2022 International Joint Conference on Neural Networks (IJCNN 2022). IEEE (2022)

Huang, Y., et al.: The causal learning of retail delinquency. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 204–212 (2021)

Johansson, F., Shalit, U., Sontag, D.: Learning representations for counterfactual inference. In: International Conference on Machine Learning, pp. 3020–3029. PMLR (2016)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Li, S., Fu, Y.: Matching on balanced nonlinear representations for treatment effects estimation. In: NIPS (2017)

Louizos, C., Shalit, U., Mooij, J., Sontag, D., Zemel, R., Welling, M.: Causal effect inference with deep latent-variable models. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 6449–6459 (2017)

Neyman, J.: C (\(\alpha \)) tests and their use. Sankhyā Indian J. Stat. Ser. A, 1–21 (1979)

Pearl, J.: Causal inference in statistics: an overview. Stat. Surv. 3, 96–146 (2009)

Rubin, D.B.: Causal inference using potential outcomes: design, modeling, decisions. J. Am. Stat. Assoc. 100(469), 322–331 (2005)

Shalit, U., Johansson, F.D., Sontag, D.: Estimating individual treatment effect: generalization bounds and algorithms. In: International Conference on Machine Learning, pp. 3076–3085. PMLR (2017)

Shi, C., Blei, D., Veitch, V.: Adapting neural networks for the estimation of treatment effects. In: Advances in Neural Information Processing Systems, pp. 2503–2513 (2019)

Wager, S., Athey, S.: Estimation and inference of heterogeneous treatment effects using random forests. J. Am. Stat. Assoc. 113(523), 1228–1242 (2018)

Yao, L., Chu, Z., Li, S., Li, Y., Gao, J., Zhang, A.: A survey on causal inference. ACM Trans. Knowl. Discov. Data 15(5), 1–46 (2021). https://doi.org/10.1145/3444944

Yao, L., Li, S., Li, Y., Huai, M., Gao, J., Zhang, A.: Representation learning for treatment effect estimation from observational data. In: Advances in Neural Information Processing Systems 31 (2018)

Yoon, J., Jordon, J., Van Der Schaar, M.: GANITE: estimation of individualized treatment effects using generative adversarial nets. In: International Conference on Learning Representations (2018)

Acknowledgement

Qi Wu acknowledges the support from the Hong Kong Research Grants Council [General Research Fund 14206117, 11219420, and 11200219], CityU SRG-Fd fund 7005300, and the support from the CityU-JD Digits Laboratory in Financial Technology and Engineering, HK Institute of Data Science. The work described in this paper was partially supported by the InnoHK initiative, The Government of the HKSAR, and the Laboratory for AI-Powered Financial Technologies.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Huang, Y., Leung, C.H., Ma, S., Wu, Q., Wang, D., Huang, Z. (2022). Moderately-Balanced Representation Learning for Treatment Effects with Orthogonality Information. In: Khanna, S., Cao, J., Bai, Q., Xu, G. (eds) PRICAI 2022: Trends in Artificial Intelligence. PRICAI 2022. Lecture Notes in Computer Science, vol 13630. Springer, Cham. https://doi.org/10.1007/978-3-031-20865-2_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-20865-2_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-20864-5

Online ISBN: 978-3-031-20865-2

eBook Packages: Computer ScienceComputer Science (R0)