Abstract

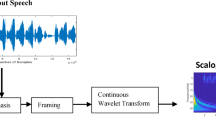

Dysarthria is a neuro-motor speech defect that causes speech to be unintelligible and is largely unnoticeable to humans at various severity-levels. Dysarthric speech classification is used as a diagnostic method to assess the progression of a patient’s severity of the condition, as well as to aid with automatic dysarthric speech recognition systems (an important assistive speech technology). This study investigates the significance of Generalized Morse Wavelet (GMW)-based scalogram features for capturing the discriminative acoustic cues of dysarthric severity-level classification for low-frequency regions, using Convolutional Neural Network (CNN). The performance of scalogram-based features is compared with Short-Time Fourier Transform (STFT)-based features, and Mel spectrogram-based features. Compared to the STFT-based baseline features with a classification accuracy of \(91.76\%\), the proposed Continuous Wavelet Transform (CWT)-based scalogram features achieve significantly improved classification accuracy of \(95.17\%\) on standard and statistically meaningful UA-Speech corpus. The remarkably improved results signify that for better dysarthric severity-level classification, the information in the low-frequency regions is more discriminative, as the proposed CWT-based time-frequency representation (scalogram) has a high-frequency resolution in the lower frequencies. On the other hand, STFT-based representations have constant resolution across all the frequency bands and therefore, are not as better suited for dysarthric severity-level classification, as the proposed Morse wavelet-based CWT features. In addition, we also perform experiments on the Mel spectrogram to demonstrate that even though the Mel spectrogram also has a high frequency resolution in the lower frequencies with a classification accuracy of \(92.65\%\), the proposed system is better suited. We see an increase of \(3.41\%\) and \(2.52\%\) in classification accuracy of the proposed system to STFT and Mel spectrogram respectively. To that effect, the performance of the STFT, Mel spectrogram, and scalogram are analyzed using F1-Score, Matthew’s Correlation Coefficients (MCC), Jaccard Index, Hamming Loss, and Linear Discriminant Analysis (LDA) scatter plots.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Al-Qatab, B.A., Mustafa, M.B.: Classification of dysarthric speech according to the severity of impairment: an analysis of acoustic features. IEEE Access 9, 18183–18194 (2021)

Bouchard, M., Jousselme, A.L., Doré, P.E.: A proof for the positive definiteness of the Jaccard index matrix. Int. J. Approx. Reason. 54(5), 615–626 (2013)

Chen, H., Zhang, P., Bai, H., Yuan, Q., Bao, X., Yan, Y.: Deep convolutional neural network with scalogram for audio scene modeling. In: INTERSPEECH, Hyderabad India, pp. 3304–3308 (2018)

Darley, F.L., Aronson, A.E., Brown, J.R.: Differential diagnostic patterns of dysarthria. J. Speech Hear. Res. (JSLHR) 12(2), 246–269 (1969)

Daubechies, I.: The wavelet transform, time-frequency localization and signal analysis. IEEE Trans. Inf. Theory 36(5), 961–1005 (1990)

Dembczyński, K., Waegeman, W., Cheng, W., Hüllermeier, E.: Regret analysis for performance metrics in multi-label classification: the case of hamming and subset zero-one loss. In: Balcázar, J.L., Bonchi, F., Gionis, A., Sebag, M. (eds.) ECML PKDD 2010. LNCS (LNAI), vol. 6321, pp. 280–295. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15880-3_24

Fawcett, T.: An introduction to ROC analysis. Pattern Recognit. Lett. 27(8), 861–874 (2006)

Gillespie, S., Logan, Y.Y., Moore, E., Laures-Gore, J., Russell, S., Patel, R.: Cross-database models for the classification of dysarthria presence. In: INTERSPEECH, Stockholm, Sweden, pp. 3127–31 (2017)

Gupta et al., S.: Residual neural network precisely quantifies dysarthria severity-level based on short-duration speech segments. Neural Netw. 139, 105–117 (2021)

Holschneider, M.: Wavelets. An analysis tool (1995)

Izenman, A.J.: Linear discriminant analysis. In: Izenman, A.J. (ed.) Modern Multivariate Statistical Techniques. Springer Texts in Statistics, pp. 237–280. Springer, New York (2013). https://doi.org/10.1007/978-0-387-78189-1_8

Joshy, A.A., Rajan, R.: Automated dysarthria severity classification using deep learning frameworks. In: 28th European Signal Processing Conference (EUSIPCO), Amsterdam, Netherlands, pp. 116–120 (2021)

Knutsson, H., Westin, C.F., Granlund, G.: Local multiscale frequency and bandwidth estimation. In: Proceedings of 1st International Conference on Image Processing, Austin, TX, USA, vol. 1, pp. 36–40, 13–16 November 1994

LeCun, Y., Kavukcuoglu, K., Farabet, C.: Convolutional networks and applications in vision. In: Proceedings of 2010 IEEE International Symposium on Circuits and Systems, Paris, France, pp. 253–256 (2010)

Lieberman, P.: Primate vocalizations and human linguistic ability. J. Acoust. Soci. Am. (JASA) 44(6), 1574–1584 (1968)

Lilly, J.M., Olhede, S.C.: Generalized Morse wavelets as a superfamily of analytic wavelets. IEEE Trans. Signal Process. 60(11), 6036–6041 (2012)

Lilly, J.M., Olhede, S.C.: Higher-order properties of analytic wavelets. IEEE Trans. Signal Process. 57(1), 146–160 (2008)

Lilly, J.M., Olhede, S.C.: On the analytic wavelet transform. IEEE Trans. Inf. Theory 56(8), 4135–4156 (2010)

Mackenzie, C., Lowit, A.: Behavioural intervention effects in dysarthria following stroke: communication effectiveness, intelligibility and dysarthria impact. Int. J. Lang. Commun. Disord. 42(2), 131–153 (2007)

Mallat, S.: A Wavelet Tour of Signal Processing, 2nd edn. Elsevier, Amsterdam (1999)

Matthews, B.W.: Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochimica et Biophysica Acta (BBA) Prot. Struct. 405(2), 442–451 (1975)

Ren, Z., Qian, K., Zhang, Z., Pandit, V., Baird, A., Schuller, B.: Deep scalogram representations for acoustic scene classification. IEEE/CAA J. Automatica Sinica 5(3), 662–669 (2018)

Vásquez-Correa, J.C., Orozco-Arroyave, J.R., Nöth, E.: Convolutional neural network to model articulation impairments in patients with Parkinson’s disease. In: INTERSPEECH, Stockholm, pp. 314–318 (2017)

Young, V., Mihailidis, A.: Difficulties in automatic speech recognition of dysarthric speakers and implications for speech-based applications used by the elderly: A literature review. Assist. Technol. 22(2), 99–112 (2010)

Yu, J., et al.: Development of the CUHK dysarthric speech recognition system for the UA speech corpus. In: INTERSPEECH, Hyderabad, India, pp. 2938–2942 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

A.1. Energy Conservation in STFT

The energy conservation in STFT for any signal \(f(t)\in L^2(R)\) is given by

Here, u and \(\zeta \) indicate the time-frequency indices that vary across R and hence, covers the entire time-frequency plane. The reconstruction of signal can then be given by

Applying Parseval’s formula to Eq. (17) w.r.t. to the integration in u, we get

Here, \(g_{\zeta }(t)=g(t)e^{i\zeta t}\). Hence, Fourier Transform of \(Sf(u,\zeta )\) is \(\hat{f}(\omega _ \zeta )\hat{g}(\omega )\). Furthermore, after applying the Plancherel’s formula to Eq. (16) gives

Finally, the Plancheral formula and the Fubini theorem result in \(\frac{1}{2\pi }\int _{-\infty }^{+\infty }|\hat{f}(\omega +\zeta )|^2 d\zeta =||f||^2\), which validates STFT’s energy conservation as demonstrated in Eq. (16), It explains why the overall signal energy is the same as the time-frequency sum of the STFT.

A.2. Energy Conservation in CWT

Using the same derivations as in the discussion of Eq. 17, one can verify that the inverse wavelet formula reconstructs the analytic part of f :

Applying the Plancherel formula for energy conservation for the analytic part of \(f_a\) given by

Since \(Wf_a(u,s)\) = 2Wf(u, s) and \(||f_a||^2\) = \(2||f||^2\).

If f is real, and the variable change \(\zeta \) = \(\frac{1}{s}\) in energy conservation denotes that

It reinforces the notion that a scalogram represents a time-frequency energy density.

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Kachhi, A., Therattil, A., Gupta, P., Patil, H.A. (2022). Continuous Wavelet Transform for Severity-Level Classification of Dysarthria. In: Prasanna, S.R.M., Karpov, A., Samudravijaya, K., Agrawal, S.S. (eds) Speech and Computer. SPECOM 2022. Lecture Notes in Computer Science(), vol 13721. Springer, Cham. https://doi.org/10.1007/978-3-031-20980-2_27

Download citation

DOI: https://doi.org/10.1007/978-3-031-20980-2_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-20979-6

Online ISBN: 978-3-031-20980-2

eBook Packages: Computer ScienceComputer Science (R0)