Abstract

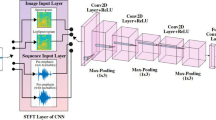

Dysarthria is a neuro-motor speech disorder that affects the intelligibility of speech, which is often imperceptible depending on its severity-level. Patients’ advancement in the dysarthric severity-level are diagnosed using the classification system, which also aids in automatic dysarthric speech recognition (an important assistive speech technology). This study investigates presence of the linear vs. non-linear components in the dysarthic speech for severity-level classification using the Squared Energy Cepstral Coefficients (SECC) and Teager Energy Cepstral Coefficients (TECC), which captures the linear and non-linear production features of the speech signal, respectively. The comparison of the TECC and SECC is presented w.r.t the baseline STFT and MFCC features using three deep learning architectures, namely, Convolutional Neural Network (CNN), Light-CNN (LCNN), and Residual Neural Network (ResNet) as pattern classifiers. SECC gave improved classification accuracy by \(6.28\%\) (\(7.89\%\)/\(3.60\%\)) than baseline STFT system, \(1.7\%\) (\(4.23\%\)/\(0.99\%\)) than MFCC and \(0.1.41\%\) (\(0.56\%\)/\(0.28\%\)) than TECC on CNN (LCNN/ResNet) classifier systems, respectively. Finally, the analysis of feature discrimination power is presented using Linear Discriminant Analysis (LDA), Jaccard index, Matthew’s Correlation Coefficient (MCC), F1-score, and Hamming loss followed by analysis of latency period in order to investigate practical significance of proposed approach.

This work was done when Dr. Hardik B. Sailor was at Samsung Research Institute, Bangalore, India in collaboration with DA-IICT, Gandhinagar.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bouchard, M., Jousselme, A.L., Doré, P.E.: A proof for the positive definiteness of the Jaccard index matrix. Int. J. Approx. Reason. 54(5), 615–626 (2013)

Darley, F.L., Aronson, A.E., Brown, J.R.: Differential diagnostic patterns of dysarthria. J. Speech Hear. Res. (JSLHR) 12(2), 246–269 (1969)

Dembczyński, K., Waegeman, W., Cheng, W., Hüllermeier, E.: Regret analysis for performance metrics in multi-label classification: the case of hamming and subset zero-one loss. In: Balcázar, J.L., Bonchi, F., Gionis, A., Sebag, M. (eds.) ECML PKDD 2010. LNCS (LNAI), vol. 6321, pp. 280–295. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15880-3_24

Dimitriadis, D., Maragos, P., Potamianos, A.: Auditory Teager energy cepstrum coefficients for robust speech recognition. In: INTERSPEECH, Lisbon, Portugal, pp. 3013–3016, September 2005

Fawcett, T.: An introduction to ROC analysis. Pattern Recognit. Lett. 27(8), 861–874 (2006)

Gillespie, S., Logan, Y.Y., Moore, E., Laures-Gore, J., Russell, S., Patel, R.: Cross-database models for the classification of dysarthria presence. In: INTERSPEECH, Stockholm, Sweden, pp. 3127–31 (2017)

Grozdic, D.T., Jovicic, S.T.: Whispered speech recognition using deep denoising autoencoder and inverse filtering. IEEE/ACM Trans. Audio Speech Lang. Process. (TASLP) 25(12), 2313–2322 (2017)

Gupta et al., S.: Residual neural network precisely quantifies dysarthria severity-level based on short-duration speech segments. Neural Netw. 139, 105–117 (2021)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), LV, Nevada, USA, pp. 770–778 (2016)

Izenman, A.J.: Linear discriminant analysis. In: Izenman, A.J. (ed.) Modern Multivariate Statistical Techniques, pp. 237–280. Springer Texts in Statistics. Springer, New York (2013). https://doi.org/10.1007/978-0-387-78189-1_8

Joshy, A.A., Rajan, R.: Automated dysarthria severity classification using deep learning frameworks. In: 28th European Signal Processing Conference (EUSIPCO), Amsterdam, Netherlands, pp. 116–120 (2021)

Kain, A.B., Hosom, J.P., Niu, X., Van Santen, J.P., Fried-Oken, M., Staehely, J.: Improving the intelligibility of dysarthric speech. Speech Commun. 49(9), 743–759 (2007)

Kaiser, J.F.: On a simple algorithm to calculate the energy of a signal. In: International Conference on Acoustics. Speech, and Signal Processing (ICASSP), New Mexico, USA, pp. 381–384 (1990)

Lavrentyeva, G., Novoselov, S., Malykh, E., Kozlov, A., Kudashev, O., Shchemelinin, V.: Audio replay attack detection with deep learning frameworks. In: INTERSPEECH, Stockholm, Sweden, pp. 82–86, August 2017

Lavrentyeva, G., Novoselov, S., Tseren, A., Volkova, M., Gorlanov, A., Kozlov, A.: STC Antispoofing systems for the ASVSpoof2019 challenge. In: INTERSPEECH, Graz, Austria, pp. 1033–37, September 2019

LeCun, Y., Kavukcuoglu, K., Farabet, C.: Convolutional networks and applications in vision. In: Proceedings of 2010 IEEE International Symposium on Circuits and Systems, Paris, France, pp. 253–256 (2010)

Lieberman, P.: Primate vocalizations and human linguistic ability. J. Acoust. Soc. Am. (JASA) 44(6), 1574–1584 (1968)

Matthews, B.W.: Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochimica et Biophysica Acta (BBA) Protein Struct. 405(2), 442–451 (1975)

Teager, H.M., Teager, S.M.: Evidence for nonlinear sound production mechanisms in the vocal tract. In: Hardcastle, W.J., Marchal, A. (eds.) Speech Production and Speech Modelling. NATO ASI Series, vol. 55, pp. 241–261. Springer, Dordrecht (1990). https://doi.org/10.1007/978-94-009-2037-8_10

Narendra, N., Alku, P.: Dysarthric speech classification using glottal features computed from non-words, words, and sentences. In: INTERSPEECH, Hyderabad, India, pp. 3403–3407 (2018)

Oppenheim, A.V., Willsky, A.S., Nawab, S.H., Hernández, G.M., et al.: Signals & Systems, 1st edn. Pearson Educación (1997)

Patil, H.A., Parhi, K.K.: Development of TEO phase for speaker recognition. In: 2010 International Conference on Signal Processing and Communications (SPCOM), pp. 1–5. IEEE (2010)

Quatieri, T.F.: Discrete-Time Speech Signal Processing: Principles and Practice, 3rd edn. Pearson Education, India (2006)

Strand, O.M., Egeberg, A.: Cepstral mean and variance normalization in the model domain. In: COST278 and ISCA Tutorial and Research Workshop (ITRW) on Robustness Issues in Conversational Interaction, Norwich, United Kingdom, pp. 30–31, August 2004

Szeliski, R.: Computer Vision: Algorithms and Applications. Springer, London (2010). https://doi.org/10.1007/978-1-84882-935-0

Teager, H.M.: Some observations on oral air flow during phonation. IEEE Trans. Acoust. Speech Signal Process. 28(5), 599–601 (1980)

Wu, X., He, R., Sun, Z., Tan, T.: A light CNN for deep face representation with noisy labels. IEEE Trans. Inf. Forensics Secur. 13(11), 2884–2896 (2018)

Young, V., Mihailidis, A.: Difficulties in automatic speech recognition of dysarthric speakers and implications for speech-based applications used by the elderly: a literature review. Assist. Technol. 22(2), 99–112 (2010)

Yu, J., et al.: Development of the CUHK dysarthric speech recognition system for the UA speech corpus, In: INTERSPEECH, Hyderabad, India, pp. 2938–2942 (2018)

Acknowledgments

The authors sincerely thank the PRISM team at Samsung R &D Institute, Bangalore (SRI-B), India. We would also like to thank the authorities of DA-IICT Gandhinagar for kind support and cooperation to carry out this research work.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Kachhi, A., Therattil, A., Patil, A.T., Sailor, H.B., Patil, H.A. (2022). Significance of Energy Features for Severity Classification of Dysarthria. In: Prasanna, S.R.M., Karpov, A., Samudravijaya, K., Agrawal, S.S. (eds) Speech and Computer. SPECOM 2022. Lecture Notes in Computer Science(), vol 13721. Springer, Cham. https://doi.org/10.1007/978-3-031-20980-2_28

Download citation

DOI: https://doi.org/10.1007/978-3-031-20980-2_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-20979-6

Online ISBN: 978-3-031-20980-2

eBook Packages: Computer ScienceComputer Science (R0)