Abstract

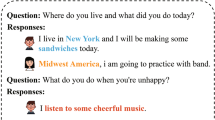

The development of various kinds of interactive assistants at present is highly in demand. In this field, one critical problem is the personalization of these dialog assistants seeking to increase user loyalty and involvement in a conversation, which may be a competitive advantage for enterprises employing them. This paper presents a study of retrieve models for a personalized dialogue agent. To train models the Persona Chat and Toloka Persona Chat Rus datasets are used. The study found the most effective models among the retrieval models, learning strategies. Also, to solve one of the major limitations of the personalization of dialogue assistants—the lack of large data sets with dialogues containing person characteristics—a text data augmentation method was developed that preserves individual speech patterns and vocabulary.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Anaby-Tavor, A., Carmeli, B., Goldbraich, E., Kantor, A., Kour, G., Shlomov, S., Tepper, N., Zwerdling, N.: Not enough data? deep learning to the rescue! (2019). http://arxiv.org/abs/1911.03118

Andreas, J.: Good-enough compositional data augmentation (2019). http://arxiv.org/abs/1904.09545

Chalkidis, I., Androutsopoulos, I., Michos, A.: Extracting contract elements. In: Proceedings of the 16th Edition of the International Conference on Articial Intelligence and Law, pp. 19–28. ICAIL ’17, Association for Computing Machinery, New York, NY, USA (2017). https://doi.org/10.1145/3086512.3086515

Coulombe, C.: Text data augmentation made simple by leveraging NLP cloud apis (2018). http://arxiv.org/abs/1812.04718

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, vol.1 (Long and Short Papers), pp. 4171–4186. Association for Computational Linguistics, Minneapolis, Minnesota (2019). https://doi.org/10.18653/v1/N19-1423, https://aclanthology.org/N19-1423

Edunov, S., Ott, M., Auli, M., Grangier, D.: Understanding back-translation at scale. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pp. 489–500. Association for Computational Linguistics, Brussels, Belgium (2018). https://doi.org/10.18653/v1/D18-1045, https://aclanthology.org/D18-1045

Floridi, L., Chiriatti, M.: GPT-3: its nature, scope, limits, and consequences. Mind. Mach. 30(4), 681–694 (2020). https://doi.org/10.1007/s11023-020-09548-1

Giridhara, P.K.B., Mishra, C., Venkataramana, R.K.M., Bukhari, S.S., Dengel, A.R.: A study of various text augmentation techniques for relation classification in free text. In: ICPRAM (2019)

Guo, H., Mao, Y., Zhang, R.: Augmenting data with mixup for sentence classification: an empirical study (2019). arXiv:abs/1905.08941

Hancock, B., Bordes, A., Mazare, P.E., Weston, J.: Learning from dialogue after deployment: feed yourself, chatbot! pp. 3667–3684 (2019). https://doi.org/10.18653/v1/P19-1358

Humeau, S., Shuster, K., Lachaux, M., Weston, J.: Real-time inference in multi-sentence tasks with deep pretrained transformers (2019). http://arxiv.org/abs/1905.01969

Humeau, S., Shuster, K., Lachaux, M.A., Weston, J.: Poly-encoders: architectures and pre-training strategies for fast and accurate multi-sentence scoring. In: International Conference on Learning Representations (2020). https://openreview.net/forum?id=SkxgnnNFvH

Iyyer, M., Wieting, J., Gimpel, K., Zettlemoyer, L.: Adversarial example generation with syntactically controlled paraphrase networks. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, vol. 1 (Long Papers), pp. 1875–1885. Association for Computational Linguistics, New Orleans, Louisiana (2018). https://doi.org/10.18653/v1/N18-1170, https://aclanthology.org/N18-1170

Kobayashi, S.: Contextual augmentation: data augmentation by words with paradigmatic relations (2018). arXiv:abs/1805.06201

Kumar, V., Choudhary, A., Cho, E.: Data augmentation using pre-trained transformer models (2020). arXiv:abs/2003.02245

Lin, Z., Liu, Z., Winata, G.I., Cahyawijaya, S., Madotto, A., Bang, Y., Ishii, E., Fung, P.: XPersona: evaluating multilingual personalized chatbot. In: Proceedings of the 3rd Workshop on Natural Language Processing for Conversational AI. pp. 102–112. Association for Computational Linguistics, Online (2021). https://doi.org/10.18653/v1/2021.nlp4convai-1.10, https://aclanthology.org/2021.nlp4convai-1.10

Matveev, A., Makhnytkina, O., Matveev, Y., Svischev, A., Korobova, P., Rybin, A., Akulov, A.: Virtual dialogue assistant for remote exams. Mathematics 9(18) (2021). https://doi.org/10.3390/math9182229, https://www.mdpi.com/2227-7390/9/18/2229

Ni, J., Young, T., Pandelea, V., Xue, F., Adiga, V., Cambria, E.: Recent advances in deep learning-based dialogue systems (2021)

Papadaki, M., Chalkidis, I., Michos, A.: Data augmentation techniques for legal text analytics (2017)

Posokhov, P., Apanasovich, K., Matveeva, A., Makhnytkina, O., Matveev, A.: Personalizing dialogue agents for Russian: retrieve and refine, vol. 2022, pp. 245–252 (2022). https://doi.org/10.23919/FRUCT54823.2022.9770895

Roller, S., Dinan, E., Goyal, N., Ju, D., Williamson, M., Liu, Y., Xu, J., Ott, M., Smith, E.M., Boureau, Y.L., Weston, J.: Recipes for building an open-domain chatbot. In: Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, pp. 300–325. Association for Computational Linguistics, Online (2021). https://doi.org/10.18653/v1/2021.eacl-main.24, https://aclanthology.org/2021.eacl-main.24

Sennrich, R., Haddow, B., Birch, A.: Neural machine translation of rare words with subword units. CoRR abs/1508.07909 (2015), http://arxiv.org/abs/1508.07909

Shen, T., Lei, T., Barzilay, R., Jaakkola, T.S.: Style transfer from non-parallel text by cross-alignment (2017). arXiv:abs/1705.09655

Sugiyama, H., Mizukami, M., Arimoto, T., Narimatsu, H., Chiba, Y., Nakajima, H., Meguro, T.: Empirical analysis of training strategies of transformer-based Japanese chit-chat systems (2021). arXiv:abs/2109.05217

Wei, J.W., Zou, K.: EDA: easy data augmentation techniques for boosting performance on text classification tasks (2019). arXiv:abs/1901.11196

Wu, X., Xia, Y., Zhu, J., Wu, L., Xie, S., Fan, Y., Qin, T.: Mixseq: a simple data augmentation method for neural machine translation, pp. 192–197 (2021). https://doi.org/10.18653/v1/2021.iwslt-1.23

Yang, Z., Hu, Z., Dyer, C., Xing, E.P., Berg-Kirkpatrick, T.: Unsupervised text style transfer using language models as discriminators (2018). arXiv:abs/1805.11749

Zhang, S., Dinan, E., Urbanek, J., Szlam, A., Kiela, D., Weston, J.: Personalizing dialogue agents: I have a dog, do you have pets too? In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (vol. 1: Long Papers), pp. 2204–2213. Association for Computational Linguistics, Melbourne, Australia (2018). https://doi.org/10.18653/v1/P18-1205, https://aclanthology.org/P18-1205

Zhang, Z., Zweigenbaum, P.: Gneg: graph-based negative sampling for word2vec (2018). https://doi.org/10.18653/v1/P18-2090

Zhong, P., Sun, Y., Liu, Y., Zhang, C., Wang, H., Nie, Z., Miao, C.: Endowing empathetic dialogue systems with personas (2020). arXiv:abs/2004.12316

Acknowledgments

The research was financially supported the Russian Science Foundations (project 22-11-00128).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Posokhov, P., Matveeva, A., Makhnytkina, O., Matveev, A., Matveev, Y. (2022). Personalizing Retrieval-Based Dialogue Agents. In: Prasanna, S.R.M., Karpov, A., Samudravijaya, K., Agrawal, S.S. (eds) Speech and Computer. SPECOM 2022. Lecture Notes in Computer Science(), vol 13721. Springer, Cham. https://doi.org/10.1007/978-3-031-20980-2_47

Download citation

DOI: https://doi.org/10.1007/978-3-031-20980-2_47

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-20979-6

Online ISBN: 978-3-031-20980-2

eBook Packages: Computer ScienceComputer Science (R0)