Abstract

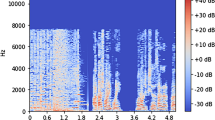

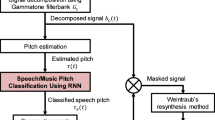

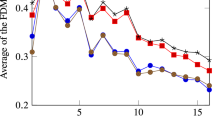

Speech-music overlap detection in audio signals is an essential preprocessing step for many high-level audio processing applications. Speech and music spectrograms exhibit characteristic harmonic striations that can be used as a feature for detecting their overlap. Hence, this work proposes two features generated using a spectral peak tracking algorithm to capture prominent harmonic patterns in spectrograms. One feature consists of the spectral peak amplitude evolutions in an audio interval. The second feature is designed as a Mel-scaled spectrogram obtained by suppressing non-peak spectral components. In addition, a one-dimensional convolutional neural network architecture is proposed to learn the temporal evolution of spectral peaks. Mel-spectrogram is used as a baseline feature to compare performances. A popular public dataset MUSAN with 102 h of data has been used to perform experiments. A late fusion of the proposed features with baseline is observed to provide better performance.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Anemüller, J., Schmidt, D., Bach, J.H.: Detection of speech embedded in real acoustic background based on amplitude modulation spectrogram features. In: Proceedings of the 9th Annual Conference of the International Speech Communication Association (2008)

Bach, J.H., Anemüller, J., Kollmeier, B.: Robust speech detection in real acoustic backgrounds with perceptually motivated features. Speech Commun. 53(5), 690–706 (2011)

Bhattacharjee, M., Prasanna, S.R.M., Guha, P.: Speech/music classification using features from spectral peaks. IEEE/ACM Trans. Audio Speech Lang. Process. 28, 1549–1559 (2020)

Butko, T., Nadeu, C.: Audio segmentation of broadcast news in the Albayzin-2010 evaluation: overview, results, and discussion. EURASIP J. Audio Speech Music Process. 2011(1), 1–10 (2011)

Castán, D., Ortega, A., Miguel, A., Lleida, E.: Audio segmentation-by-classification approach based on factor analysis in broadcast news domain. EURASIP J. Audio Speech Music Process. 2014(1), 34 (2014)

Gimeno, P., Mingote, V., Ortega, A., Miguel, A., Lleida, E.: Partial AUC Optimisation using Recurrent Neural Networks for Music Detection with Limited Training Data. Proceedings. Interspeech, pp. 3067–3071 (2020)

Hershey, S., et al.: CNN architectures for large-scale audio classification. In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, pp. 131–135 (2017)

Izumitani, T., Mukai, R., Kashino, K.: A background music detection method based on robust feature extraction. In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, pp. 13–16 (2008)

Jia, B., Lv, J., Peng, X., Chen, Y., Yang, S.: Hierarchical regulated iterative network for joint task of music detection and music relative loudness estimation. IEEE/ACM Trans. Audio Speech Lang. Process. (2020)

Lee, K., Ellis, D.P.: Detecting music in ambient audio by long-window autocorrelation. In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, pp. 9–12 (2008)

Lopez-Otero, P., Docio-Fernandez, L., Garcia-Mateo, C.: Ensemble audio segmentation for radio and television programmes. Multimedia Tools Appl. 76(5), 7421–7444 (2017)

Maaten, L.V.D., Hinton, G.: Visualizing data using t-SNE. J. Mach. Learn. Res. 9(Nov), 2579–2605 (2008)

Meng, H., Yan, T., Yuan, F., Wei, H.: Speech emotion recognition from 3D log-mel spectrograms with deep learning network. IEEE Access 7, 125868–125881 (2019)

Mohammed, D.Y., Li, F.F.: Overlapped soundtracks segmentation using singular spectrum analysis and random forests. In: Proceedings of the 2nd International Conference on Knowledge Discovery and Data Mining, pp. 49–54 (2017)

Raj, B., Parikh, V.N., Stern, R.M.: The effects of background music on speech recognition accuracy. In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 2, pp. 851–854 (1997)

Seyerlehner, K., Pohle, T., Schedl, M., Widmer, G.: Automatic music detection in television productions. In: Proceedings of the 10th International Conference on Digital Audio Effects (DAFx 2007). Citeseer (2007)

Sigurdsson, S., Petersen, K.B., Lehn-Schiøler, T.: Mel Frequency Cepstral Coefficients: An Evaluation of Robustness of MP3 Encoded Music. In: ISMIR, pp. 286–289 (2006)

Sinha, H., Awasthi, V., Ajmera, P.K.: Audio classification using braided convolutional neural networks (2020)

Snyder, D., Chen, G., Povey, D.: Musan: a music, speech, and noise corpus. arXiv preprint arXiv:1510.08484 (2015)

Taniguchi, T., Tohyama, M., Shirai, K.: Detection of speech and music based on spectral tracking. Speech Commun. 50(7), 547–563 (2008)

Umesh, S., Cohen, L., Nelson, D.: Fitting the Mel scale. In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 1, pp. 217–220 (1999)

Vanroose, P.: Blind source separation of speech and background music for improved speech recognition. In: Proceedings of the 24th Symposium on Information Theory, pp. 103–108 (2003)

Vavrek, J., Fecil’ak, P., Juhár, J., Čižmár, A.: Classification of broadcast news audio data employing binary decision architecture. Comput. Inform. 36(4), 857–886 (2017)

Vavrek, J., Vozáriková, E., Pleva, M., Juhár, J.: Broadcast news audio classification using SVM binary trees. In: Proceedings of the 35th International Conference on Telecommunications and Signal Processing, pp. 469–473 (2012)

Weninger, F., Feliu, J., Schuller, B.: Supervised and semi-supervised suppression of background music in monaural speech recordings. In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, pp. 61–64 (2012)

Zhang, T., Kuo, C.C.J.: Audio content analysis for online audiovisual data segmentation and classification. IEEE Trans. Speech Audio Process. 9(4), 441–457 (2001)

Acknowledgments

Supported by Visvesvaraya PhD Scheme, MeitY, Govt. of India - MEITY-PHD-1230.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Bhattacharjee, M., Prasanna, S.R.M., Guha, P. (2022). Speech Music Overlap Detection Using Spectral Peak Evolutions. In: Prasanna, S.R.M., Karpov, A., Samudravijaya, K., Agrawal, S.S. (eds) Speech and Computer. SPECOM 2022. Lecture Notes in Computer Science(), vol 13721. Springer, Cham. https://doi.org/10.1007/978-3-031-20980-2_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-20980-2_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-20979-6

Online ISBN: 978-3-031-20980-2

eBook Packages: Computer ScienceComputer Science (R0)