Abstract

This work proposes a human motion prediction model for handover operations. The model uses a multi-headed attention architecture to process the human skeleton data together with contextual data from the operation. This contextual data consists on the position of the robot’s End Effector (REE). The model input is a sequence of 5 s skeleton position and it outputs the predicted 2.5 future seconds position. We provide results of the human upper body and the human right hand or Human End Effector (HEE).

The attention deep learning based model has been trained and evaluated with a dataset created using human volunteers and an anthropomorphic robot, simulating handover operations where the robot is the giver and the human the receiver. For each operation, the human skeleton is obtained using OpenPose with an Intel RealSense D435i camera set inside the robot’s head. The results show a great improvement of the human’s right hand prediction and 3D body compared with other methods.

All authors work in the Institut de Robótica i Informática Industrial de Barcelona (IRI), Catalonia, Spain.

Work supported under the Spanish State Research Agency through the ROCOTRANSP project (PID2019-106702RB-C21/AEI/10.13039/501100011033)) and the EU project CANOPIES (H2020- ICT-2020-2-101016906).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Aksan, E., Kaufmann, M., Hilliges. O.: Structured prediction helps 3D human motion modelling. CoRR abs/1910.09070 (2019). arXiv: 1910.09070

Barsoum, E., Kender, J., Liu, Z.: HP-GAN: probabilistic 3D human motion prediction via GAN. CoRR abs/1711.09561 (2017). arXiv: 1711.09561

Basili, P., et al.: Investigating human-human approach and hand-over. In: Human Centered Robot Systems, Cognition, Interaction, Technology (2009)

Bütepage, J., Kjellström, H., Kragic, D.: Anticipating many futures: online human motion prediction and generation for human-robot interaction, pp. 1–9, May 2018. https://doi.org/10.1109/ICRA.2018.8460651

Cao, Z., et al.: OpenPose: realtime multi-person 2D pose estimation using part affinity fields. CoRR abs/1812.08008 (2018). arXiv:1812.08008

Corona, E., et al.: Context-aware human motion prediction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020

Fong, T., Nourbakhsh, I., Dautenhahn, K.: A survey of socially interactive robots. Robot. Auton. Syst. 42(3/4), 143–166 (2003)

Fragkiadaki, K., Levine, S., Malik. J.: Recurrent network models for kinematic tracking. CoRR abs/1508.00271 (2015). arXiv:1508.00271

Hernandez, A., Gall, J., Moreno-Noguer, F.: Human motion prediction via spatio-temporal inpainting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019

Hoffman, G., Breazeal, C.: Cost-based anticipatory action selection for human–robot fluency. IEEE Trans. Robot. 23(5), 952961 (2007). https://doi.org/10.1109/TRO.2007.907483

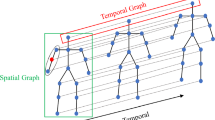

Jain, A., et al.: Structural-RNN: deep learning on spatio-temporal graphs. CoRR abs/1511.05298 (2015). arXiv:1511.05298

Lang, M., et al.: Object handover prediction using gaussian processes clustered with trajectory classification (2017). arXiv:1707.02745[cs.RO]

Laplaza, J., et al.: Attention deep learning based model for predicting the 3D Human Body Pose using the Robot Human Handover Phases. In: 2021 30th IEEE International Conference on Robot Human Interactive Communication (RO-MAN), pp. 161–166 (2021). https://doi.org/10.1109/ROMAN50785.2021.9515402

Mao, W., Liu, M., Salzmann, M.: History repeats itself: human motion prediction via motion attention (2020). arXiv: 2007.11755[cs.CV]

Martinez, J., Black, M.J., Romero, J.: On human motion prediction using recurrent neural networks. In: CVPR (2017)

Parastegari, S., et al.: Modeling human reaching phase in human-human object handover with application in robot-human handover. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3597–3602 (2017). https://doi.org/10.1109/IROS.2017.8206205

Petrovich, M., Black, M.J., Varol, G.: Action-conditioned 3D human motion synthesis with transformer VAE (2021). arXiv:2104.05670[cs.CV]

Vaswani, A., et al.: Attention is all you need. CoRR abs/1706.03762 (2017). arXiv:1706.03762

Villani, V., et al.: Survey on human–robot collaboration in industrial settings: safety, intuitive interfaces and applications. Mechatronics 55, 248–266 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Laplaza, J., Moreno-Noguer, F., Sanfeliu, A. (2023). Context Attention: Human Motion Prediction Using Context Information and Deep Learning Attention Models. In: Tardioli, D., Matellán, V., Heredia, G., Silva, M.F., Marques, L. (eds) ROBOT2022: Fifth Iberian Robotics Conference. ROBOT 2022. Lecture Notes in Networks and Systems, vol 589. Springer, Cham. https://doi.org/10.1007/978-3-031-21065-5_9

Download citation

DOI: https://doi.org/10.1007/978-3-031-21065-5_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-21064-8

Online ISBN: 978-3-031-21065-5

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)