Abstract

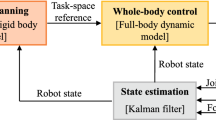

Model-free reinforcement learning (RL) for legged locomotion commonly relies on a physics simulator that can accurately predict the behaviors of every degree of freedom of the robot. In contrast, approximate reduced-order models are commonly used for many model predictive control strategies. In this work we abandon the conventional use of high-fidelity dynamics models in RL and we instead seek to understand what can be achieved when using RL with a much simpler centroidal model when applied to quadrupedal locomotion. We show that RL-based control of the accelerations of a centroidal model is surprisingly effective, when combined with a quadratic program to realize the commanded actions via ground contact forces. It allows for a simple reward structure, reduced computational costs, and robust sim-to-real transfer. We show the generality of the method by demonstrating flat-terrain gaits, stepping-stone locomotion, two-legged in-place balance, balance beam locomotion, and direct sim-to-real transfer.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Laikago and A1 are quadrupedal robots made by Unitree Robotics.

References

Agrawal, A., Chen, S., Rai, A., Sreenath, K.: Vision-aided dynamic quadrupedal locomotion on discrete terrain using motion libraries. In: 2022 International Conference on Robotics and Automation (ICRA), pp. 4708–4714. IEEE (2022)

Allshire, A., Martín-Martín, R., Lin, C., Manuel, S., Savarese, S., Garg, A.: Laser: learning a latent action space for efficient reinforcement learning. In: 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE (2021)

Berseth, G., Xie, C., Cernek, P., Van de Panne, M.: Progressive reinforcement learning with distillation for multi-skilled motion control (2018). arXiv:1802.04765

Bharadhwaj, H., Kumar, A., Rhinehart, N., Levine, S., Shkurti, F., Garg, A.: Conservative safety critics for exploration (2020). arXiv:2010.14497

Bledt, G., Kim, S.: Extracting legged locomotion heuristics with regularized predictive control. In: 2020 IEEE International Conference on Robotics and Automation (ICRA), pp. 406–412. IEEE (2020)

Bledt, G., Powell, M.J., Katz, B., Di Carlo, J., Wensing, P.M., Kim, S.: Mit cheetah 3: Design and control of a robust, dynamic quadruped robot. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press (2004)

Carius, J., Farshidian, F., Hutter, M.: Mpc-net: a first principles guided policy search. IEEE Robot. Autom. Lett. 5(2), 2897–2904 (2020)

Da, X., Grizzle, J.: Combining trajectory optimization, supervised machine learning, and model structure for mitigating the curse of dimensionality in the control of bipedal robots. Int. J. Robot. Res. 38(9) (2019)

Da, X., Xie, Z., Hoeller, D., Boots, B., Anandkumar, A., Zhu, Y., Babich, B., Garg, A.: Learning a contact-adaptive controller for robust, efficient legged locomotion (2020). arXiv:2009.10019

Di Carlo, J., Wensing, P.M., Katz, B., Bledt, G., Kim, S.: Dynamic locomotion in the mit cheetah 3 through convex model-predictive control. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE (2018)

Ding, Y., Pandala, A., Park, H.W.: Real-time model predictive control for versatile dynamic motions in quadrupedal robots. In: 2019 International Conference on Robotics and Automation (ICRA), pp. 8484–8490. IEEE (2019)

Duan, H., Dao, J., Green, K., Apgar, T., Fern, A., Hurst, J.: Learning task space actions for bipedal locomotion. In: 2021 IEEE International Conference on Robotics and Automation (ICRA), pp. 1276–1282. IEEE (2021)

Feng, S., Xinjilefu, X., Atkeson, C.G., Kim, J.: Optimization based controller design and implementation for the atlas robot in the darpa robotics challenge finals. In: 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), pp. 1028–1035. IEEE (2015)

Gangapurwala, S., Geisert, M., Orsolino, R., Fallon, M., Havoutis, I.: Rloc: terrain-aware legged locomotion using reinforcement learning and optimal control. IEEE Trans. Robot. (2022)

Gangapurwala, S., Mitchell, A., Havoutis, I.: Guided constrained policy optimization for dynamic quadrupedal robot locomotion. IEEE Robot. Autom. Lett. 5(2), 3642–3649 (2020)

García, G., Griffin, R., Pratt, J.: Mpc-based locomotion control of bipedal robots with line-feet contact using centroidal dynamics. In: 2020 IEEE-RAS 20th International Conference on Humanoid Robots (Humanoids), pp. 276–282. IEEE (2021)

García, G., Griffin, R., Pratt, J.: Time-varying model predictive control for highly dynamic motions of quadrupedal robots. In: 2021 IEEE International Conference on Robotics and Automation (ICRA), pp. 7344–7349. IEEE (2021)

Gehring, C., Coros, S., Hutter, M., Bloesch, M., Hoepflinger, M.A., Siegwart, R.: Control of dynamic gaits for a quadrupedal robot. In: 2013 IEEE international conference on Robotics and automation, pp. 3287–3292. IEEE (2013)

Gong, Y., Grizzle, J.: Angular momentum about the contact point for control of bipedal locomotion: validation in a lip-based controller (2020). arXiv:2008.10763

Gonzalez, C., Barasuol, V., Frigerio, M., Featherstone, R., Caldwell, D.G., Semini, C.: Line walking and balancing for legged robots with point feet. In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3649–3656. IEEE (2020)

Grandia, R., Farshidian, F., Dosovitskiy, A., Ranftl, R., Hutter, M.: Frequency-aware model predictive control. IEEE Robot. Autom. Lett. 4(2) (2019)

Grandia, R., Taylor, A.J., Ames, A.D., Hutter, M.: Multi-layered safety for legged robots via control barrier functions and model predictive control. In: 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE (2021)

Green, K., Godse, Y., Dao, J., Hatton, R.L., Fern, A., Hurst, J.: Learning spring mass locomotion: guiding policies with a reduced-order model. IEEE Robot. Autom. Lett. 6(2), 3926–3932 (2021)

Heiden, E., Millard, D., Coumans, E., Sheng, Y., Sukhatme, G.S.: Neuralsim: augmenting differentiable simulators with neural networks. In: 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE (2021)

Hwangbo, J., Lee, J., Dosovitskiy, A., Bellicoso, D., Tsounis, V., Koltun, V., Hutter, M.: Learning agile and dynamic motor skills for legged robots. Sci. Robot. 4(26), eaau5872 (2019)

Jain, D., Iscen, A., Caluwaerts, K.: From pixels to legs: hierarchical learning of quadruped locomotion (2020). arXiv:2011.11722

Kajita, S., Tani, K.: Study of dynamic biped locomotion on rugged terrain-derivation and application of the linear inverted pendulum mode. In: Proceedings of the 1991 IEEE International Conference on Robotics and Automation, pp. 1405–1406. IEEE Computer Society (1991)

Koolen, T., Bertrand, S., Thomas, G., De Boer, T., Wu, T., Smith, J., Englsberger, J., Pratt, J.: Design of a momentum-based control framework and application to the humanoid robot atlas. Int. J. Hum. Robot. 13(01), 1650007 (2016)

Koolen, T., De Boer, T., Rebula, J., Goswami, A., Pratt, J.: Capturability-based analysis and control of legged locomotion, part 1: theory and application to three simple gait models. Int. J. Robot. Res. 31(9) (2012)

Kuindersma, S., Deits, R., Fallon, M., Valenzuela, A., Dai, H., Permenter, F., Koolen, T., Marion, P., Tedrake, R.: Optimization-based locomotion planning, estimation, and control design for the atlas humanoid robot. Auton. Robot. 40(3) (2016)

Lee, J., Hwangbo, J., Wellhausen, L., Koltun, V., Hutter, M.: Learning quadrupedal locomotion over challenging terrain. Sci. Robot. 5(47) (2020)

Li, T., Calandra, R., Pathak, D., Tian, Y., Meier, F., Rai, A.: Planning in learned latent action spaces for generalizable legged locomotion. IEEE Robot. Autom. Lett. 6(2), 2682–2689 (2021)

Li, T., Geyer, H., Atkeson, C.G., Rai, A.: Using deep reinforcement learning to learn high-level policies on the atrias biped. In: 2019 International Conference on Robotics and Automation (ICRA), pp. 263–269. IEEE (2019)

Li, T., Srinivasan, K., Meng, M.Q.H., Yuan, W., Bohg, J.: Learning hierarchical control for robust in-hand manipulation. In: 2020 IEEE International Conference on Robotics and Automation (ICRA), pp. 8855–8862. IEEE (2020)

Lin, Y.C., Ponton, B., Righetti, L., Berenson, D.: Efficient humanoid contact planning using learned centroidal dynamics prediction. In: 2019 International Conference on Robotics and Automation (ICRA), pp. 5280–5286. IEEE (2019)

Makoviychuk, V., et al.: Isaac gym: high performance gpu-based physics simulation for robot learning (2021). arXiv:2108.10470

Martín-Martín, R., Lee, M.A., Gardner, R., Savarese, S., Bohg, J., Garg, A.: Variable impedance control in end-effector space: an action space for reinforcement learning in contact-rich tasks. In: 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 1010–1017. IEEE (2019)

Mastalli, C., Havoutis, I., Focchi, M., Caldwell, D.G., Semini, C.: Motion planning for quadrupedal locomotion: coupled planning, terrain mapping, and whole-body control. IEEE Trans. Robot. 36(6), 1635–1648 (2020)

Meduri, A., Khadiv, M., Righetti, L.: Deepq stepper: a framework for reactive dynamic walking on uneven terrain. In: 2021 IEEE International Conference on Robotics and Automation (ICRA), pp. 2099–2105. IEEE (2021)

Paigwar, K., Krishna, L., Tirumala, S., Khetan, N., Sagi, A., Joglekar, A., Bhatnagar, S., Ghosal, A., Amrutur, B., Kolathaya, S.: Robust quadrupedal locomotion on sloped terrains: a linear policy approach (2020). arXiv:2010.16342

Paszke, A., et al.: Pytorch: an imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 32 (2019)

Peng, X.B., Coumans, E., Zhang, T., Lee, T.W., Tan, J., Levine, S.: Learning agile robotic locomotion skills by imitating animals (2020). arXiv:2004.00784

Raibert, M.H.: Legged Robots that Balance. MIT Press (1986)

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., Klimov, O.: Proximal policy optimization algorithms (2017). arXiv:1707.06347

Tan, J., Zhang, T., Coumans, E., Iscen, A., Bai, Y., Hafner, D., Bohez, S., Vanhoucke, V.: Sim-to-real: Learning agile locomotion for quadruped robots (2018). arXiv:1804.10332

Tsounis, V., Alge, M., Lee, J., Farshidian, F., Hutter, M.: Deepgait: planning and control of quadrupedal gaits using deep reinforcement learning. IEEE Robot. Autom. Lett. 5(2), 3699–3706 (2020)

Viereck, J., Righetti, L.: Learning a centroidal motion planner for legged locomotion. In: 2021 IEEE International Conference on Robotics and Automation (ICRA) (2021)

Xie, Z., Da, X., van de Panne, M., Babich, B., Garg, A.: Dynamics randomization revisited: a case study for quadrupedal locomotion. In: 2021 IEEE International Conference on Robotics and Automation (ICRA), pp. 4955–4961. IEEE (2021)

Xiong, X., Ames, A.: 3-d underactuated bipedal walking via h-lip based gait synthesis and stepping stabilization. IEEE Trans. Robot. (2022)

Yang, C., Yuan, K., Zhu, Q., Yu, W., Li, Z.: Multi-expert learning of adaptive legged locomotion. Sci. Robot. 5(49), eabb2174 (2020)

Yang, Y., Caluwaerts, K., Iscen, A., Zhang, T., Tan, J., Sindhwani, V.: Data efficient reinforcement learning for legged robots. In: Conference on Robot Learning, pp. 1–10. PMLR (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Xie, Z., Da, X., Babich, B., Garg, A., de Panne, M.v. (2023). GLiDE: Generalizable Quadrupedal Locomotion in Diverse Environments with a Centroidal Model. In: LaValle, S.M., O’Kane, J.M., Otte, M., Sadigh, D., Tokekar, P. (eds) Algorithmic Foundations of Robotics XV. WAFR 2022. Springer Proceedings in Advanced Robotics, vol 25. Springer, Cham. https://doi.org/10.1007/978-3-031-21090-7_31

Download citation

DOI: https://doi.org/10.1007/978-3-031-21090-7_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-21089-1

Online ISBN: 978-3-031-21090-7

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)