Abstract

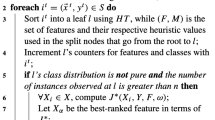

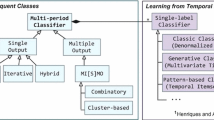

As time passes by, the performance of real-world predictive models degrades due to distributional shifts and learned spurious correlations. Typical countermeasures, such as retraining and online learning, can be costly and challenging in production, especially when accounting for business constraints and culture. Causality-based approaches aim to identify invariant mechanisms from data, thus leading to more robust predictors at the possible expense of decreasing short-term performance. However, most such approaches scale poorly to high dimensions or require extra knowledge such as data segmentation in representative environments. In this work, we develop the Time Robust Trees, a new algorithm for inducing decision trees with an inductive bias towards learning time-invariant rules. The algorithm’s main innovation is to replace the usual information-gain split criterion (or similar) with a new criterion that examines the imbalance among classes induced by the split through time. Experiments with real data show that our approach improves long-term generalization, thus offering an exciting alternative for classification problems under distributional shift.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

- 2.

The source code and datasets used and install instructions are available on GitHub at (https://github.com/lgmoneda/time-robust-tree-paper).

References

Arjovsky, M., Bottou, L., Gulrajani, I., Lopez-Paz, D.: Invariant risk minimization (2019)

Bagnell, J.A.: Robust supervised learning. In: AAAI, pp. 714–719 (2005)

Bareinboim, E., Pearl, J.: Transportability from multiple environments with limited experiments: completeness results. Adv. Neural. Inf. Process. Syst. 27, 280–288 (2014)

Bishop, C.M.: Pattern recognition and machine learning. springer (2006)

Breiman, L.: Random forest. Mach. Learn. 45(1), 5–32 (2001)

Cartwright, N.: Two theorems on invariance and causality. Philos. Sci. 70(1), 203–224 (2003)

City of Chicago : Chicago crime - bigquery dataset (2021), version 1. Accessed 13 Mar 2021. https://www.kaggle.com/chicago/chicago-crime

D’Amour, A., et al.: Underspecification presents challenges for credibility in modern machine learning. CoRR (2020). http://arxiv.org/abs/2011.03395v1

Daoud, J.: Animal shelter dataset (2021), version 1. Accessed 13 Mar 2021. https://www.kaggle.com/jackdaoud/animal-shelter-analytics

Goyal, A., et al.: Recurrent independent mechanisms. arXiv preprint arXiv:1909.10893 (2019)

Gulrajani, I., Lopez-Paz, D.: In search of lost domain generalization. arXiv preprint arXiv:2007.01434 (2020)

Hu, W., Niu, G., Sato, I., Sugiyama, M.: Does distributionally robust supervised learning give robust classifiers? In: International Conference on Machine Learning, pp. 2029–2037. PMLR (2018)

Karimi, K., Hamilton, H.J.: Generation and interpretation of temporal decision rules. arXiv preprint arXiv:1004.3334 (2010)

Karimi, K., Hamilton, H.J.: Temporal rules and temporal decision trees: A C4. 5 approach. Department of Computer Science, University of Regina Regina, Saskatchewan \(\ldots \) (2001)

Ke, N.R., et al.: Learning neural causal models from unknown interventions. arXiv preprint arXiv:1910.01075 (2019)

Locatello, F., Poole, B., Rätsch, G., Schölkopf, B., Bachem, O., Tschannen, M.: Weakly-supervised disentanglement without compromises. In: International Conference on Machine Learning, pp. 6348–6359. PMLR (2020)

Mitchell, T.M., et al.: Machine learning (1997)

Moneda, L.: Globo esporte news dataset (2020), version 11. Accessed 31 Mar 2021. https://www.kaggle.com/lgmoneda/ge-soccer-clubs-news

Mouillé, M.: Kickstarter projects dataset (2018), version 7. Accessed 13 Mar 2021. https://www.kaggle.com/kemical/kickstarter-projects?select=ks-projects-201612.csv

Pearl, J.: Causality. Cambridge University Press, Cambridge, UK, 2nd edn. (2009). https://doi.org/10.1017/CBO9780511803161

Pearson, K.: On a form of spurious correlation which may arise when indices are useed in the measurement of organs. In: Royal Society of London Proceedings, vol. 60, pp. 489–502 (1897)

Peters, J., Bühlmann, P., Meinshausen, N.: Causal inference using invariant prediction: identification and confidence intervals. arXiv preprint arXiv:1501.01332 (2015)

Peters, J., Janzing, D., Schlkopf, B.: Elements of causal inference: foundations and learning algorithms. The MIT Press (2017)

Rabanser, S., Günnemann, S., Lipton, Z.C.: Failing loudly: an empirical study of methods for detecting dataset shift (2018)

Ribeiro, M.T., Singh, S., Guestrin, C.: why should i trust you? explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1135–1144 (2016)

Schölkopf, B., Janzing, D., Peters, J., Sgouritsa, E., Zhang, K., Mooij, J.: On causal and anticausal learning. arXiv preprint arXiv:1206.6471 (2012)

Shastry, A.: San francisco building permits dataset (2018), version 1. Accessed 13 Mar 2021. https://www.kaggle.com/aparnashastry/building-permit-applications-data

Sionek, A.: Brazilian e-commerce public dataset by olist (2019), version 7. Accessed 13 Mar 2021. https://www.kaggle.com/olistbr/brazilian-ecommerce

Stone, M.: Cross-validatory choice and assessment of statistical predictions. J. Roy. Stat. Soc.: Ser. B (Methodol.) 36(2), 111–133 (1974)

Vaswani, A., et al.: Attention is all you need. arXiv preprint arXiv:1706.03762 (2017)

Wager, S., Athey, S.: Estimation and inference of heterogeneous treatment effects using random forests. J. Am. Stat. Assoc. 113(523), 1228–1242 (2018)

Wilson, A.C., Roelofs, R., Stern, M., Srebro, N., Recht, B.: The marginal value of adaptive gradient methods in machine learning. arXiv preprint arXiv:1705.08292 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Moneda, L., Mauá, D. (2022). Time Robust Trees: Using Temporal Invariance to Improve Generalization. In: Xavier-Junior, J.C., Rios, R.A. (eds) Intelligent Systems. BRACIS 2022. Lecture Notes in Computer Science(), vol 13653. Springer, Cham. https://doi.org/10.1007/978-3-031-21686-2_27

Download citation

DOI: https://doi.org/10.1007/978-3-031-21686-2_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-21685-5

Online ISBN: 978-3-031-21686-2

eBook Packages: Computer ScienceComputer Science (R0)