Abstract

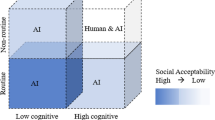

Despite the growing popularity of using Artificial Intelligence-based (AI-based) models to assist human decision-makers, little is known about how managers in business environments approach AI-assisted decision-making. To this end, our research is guided by two questions: (1) What facets make the Human (Manager)-AI decision-making process trustworthy, and (2) Does trust in AI depend on the degree to which the AI agent is humanized? We blended the business and human-computer interaction fields by considering AI applications’ design from both a social and a technological angle to answer these research questions. Our results show that (a) AI is preferred for operational versus strategic decisions, as well as for decisions that indirectly affect individuals, (b) the ability to interpret the decision-making process of AI agents would help improve user trust and alleviate calibration bias, (c) humanoid interaction styles such as conversations were believed to improve the interpretability of the decision-making process, and (d) organizational change management was essential for adopting AI technologies, more so than with previous emerging technologies. Additionally, our survey analysis indicates that when interpretability and model confidence are present in the decision-making process involving an AI agent, higher trustworthiness scores are observed.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Łapińska, J., Escher, I., Górka, J., Sudolska, A., Brzustewicz, P.: Employees’ trust in artificial intelligence in companies: the case of energy and chemical industries in Poland. Energies 14, 1942 (2021). https://doi.org/10.3390/en14071942

Rakova, B., Yang, J., Cramer, H., Chowdhury, R.: Where responsible AI meets reality: practitioner perspectives on enablers for shifting organizational practices. In: Proceedings of ACM Human-Computer Interaction, vol. 5, pp. 7:1–7:23 (2021). https://doi.org/10.1145/3449081

Asan, O., Bayrak, A.E., Choudhury, A.: Artificial intelligence and human trust in healthcare: focus on clinicians. J. Med. Internet Res. 22, e15154 (2020). https://doi.org/10.2196/15154

Ferreira, J.J., Monteiro, M.: The human-AI relationship in decision-making: AI explanation to support people on justifying their decisions. arXiv:2102.05460 [cs] (2021)

Shneiderman, B.: Human-centered artificial intelligence: three fresh ideas. AIS Trans. Hum.-Comput. Interact. 12, 109–124 (2020). https://doi.org/10.17705/1thci.00131

Xu, W., Dainoff, M.J., Ge, L., Gao, Z.: Transitioning to human interaction with AI systems: new challenges and opportunities for HCI professionals to enable human-centered AI. Int. J. Hum.–Comput. Interact. 1–25 (2022). https://doi.org/10.1080/10447318.2022.2041900

Harwood, T., Garry, T.: Internet of Things: understanding trust in techno-service systems. J. Serv. Manag. 28, 442–475 (2017). https://doi.org/10.1108/JOSM-11-2016-0299

Ferrario, A., Loi, M., Viganò, E.: In AI we trust incrementally: a multi-layer model of trust to analyze human-artificial intelligence interactions. Philosophy Technol. 33(3), 523–539 (2019). https://doi.org/10.1007/s13347-019-00378-3

Lee, J.D., See, K.A.: Trust in automation: designing for appropriate reliance. Hfes 46, 50–80 (2004). https://doi.org/10.1518/hfes.46.1.50.30392

Hoffman, R., Mueller, S.T., Klein, G., Litman, J.: Metrics for Explainable AI: Challenges and Prospects. ArXiv (2018)

Kiffin-Petersen, S., Cordery, J.: Trust, individualism and job characteristics as predictors of employee preference for teamwork. Int. J. Hum. Resource Manage. 14, 93–116 (2003). https://doi.org/10.1080/09585190210158538

Lancelot Miltgen, C., Popovič, A., Oliveira, T.: Determinants of end-user acceptance of biometrics: integrating the “Big 3” of technology acceptance with privacy context. Decis. Support Syst. 56, 103–114 (2013). https://doi.org/10.1016/j.dss.2013.05.010

Glikson, E., Woolley, A.W.: Human trust in artificial intelligence: review of empirical research. ANNALS 14, 627–660 (2020). https://doi.org/10.5465/annals.2018.0057

Doran, D., Schulz, S., Besold, T.R.: What does explainable ai really mean? a new conceptualization of perspectives. arXiv:1710.00794 [cs] (2017)

Kim, T.W., Routledge, B.R.: Informational privacy, a right to explanation, and interpretable AI. In: 2018 IEEE Symposium on Privacy-Aware Computing (PAC), pp. 64–74. IEEE, Washington, DC (2018). https://doi.org/10.1109/PAC.2018.00013

Shneiderman, B.: Human-centered artificial intelligence: reliable, safe & trustworthy. Int. J. Hum.-Comput. Inter. 36, 495–504 (2020). https://doi.org/10.1080/10447318.2020.1741118

Xu, W.: Toward human-centered AI: a perspective from human-computer interaction. Interactions 26, 42–46 (2019). https://doi.org/10.1145/3328485

Jarrahi, M.H.: Artificial intelligence and the future of work: human-AI symbiosis in organizational decision making. Bus. Horiz. 61, 577–586 (2018). https://doi.org/10.1016/j.bushor.2018.03.007

Shrestha, Y.R., Ben-Menahem, S.M., von Krogh, G.: Organizational decision-making structures in the age of artificial intelligence. Calif. Manage. Rev. 61, 66–83 (2019). https://doi.org/10.1177/0008125619862257

Yablonsky, S.A.: Multidimensional data-driven artificial intelligence innovation. TIM Rev. 9, 16–28 (2019). https://doi.org/10.22215/timreview/1288

Parry, K., Cohen, M., Bhattacharya, S.: Rise of the machines: a critical consideration of automated leadership decision making in organizations. Group Org. Manag. 41, 571–594 (2016). https://doi.org/10.1177/1059601116643442

Agrawal, A., Gans, J.S., Goldfarb, A.: Exploring the impact of artificial Intelligence: prediction versus judgment. Inf. Econ. Policy 47, 1–6 (2019). https://doi.org/10.1016/j.infoecopol.2019.05.001

Trunk, A., Birkel, H., Hartmann, E.: On the current state of combining human and artificial intelligence for strategic organizational decision making. Bus. Res. 13(3), 875–919 (2020). https://doi.org/10.1007/s40685-020-00133-x

Parasuraman, R., Sheridan, T.B., Wickens, C.D.: Situation awareness, mental workload, and trust in automation: viable, empirically supported cognitive engineering constructs. J. Cognitive Eng. Decis. Making. 2, 140–160 (2008). https://doi.org/10.1518/155534308X284417

Nakatsu, R.T.: Explanatory power of intelligent systems. In: Gupta, J.N.D., Forgionne, G.A., Mora T., M. (eds.) Intelligent Decision-making Support Systems: Foundations, Applications and Challenges, pp. 123–143. Springer, London (2006). https://doi.org/10.1007/1-84628-231-4_7

Tomsett, R., et al.: Rapid trust calibration through interpretable and uncertainty-aware AI. Patterns 1, 100049 (2020). https://doi.org/10.1016/j.patter.2020.100049

Floridi, L.: Establishing the rules for building trustworthy AI. Nat. Mach. Intell. 1, 261–262 (2019). https://doi.org/10.1038/s42256-019-0055-y

Thiebes, S., Lins, S., Sunyaev, A.: Trustworthy artificial intelligence. Electron. Mark. 31(2), 447–464 (2020). https://doi.org/10.1007/s12525-020-00441-4

Veale, M.: A critical take on the policy recommendations of the EU high-level expert group on artificial intelligence. Eur. J. Risk Regul. 11, e1 (2020). https://doi.org/10.1017/err.2019.65

Ashoori, M., Weisz, J.D.: In AI We Trust? Factors That Influence Trustworthiness of AI-infused Decision-Making Processes. arXiv:1912.02675 [cs] (2019)

Review, M.S.M.: Artificial intelligence in business gets real: pioneering companies aim for AI at scale - MIT SMR store. https://shop.sloanreview.mit.edu/store/artificial-intelligence-in-business-gets-real-pioneering-companies-aim-for-ai-at-scale. Accessed 30 May 2022

It’s 2021. Do You Know What Your AI Is Doing? https://www.fico.com/blogs/its-2021-do-you-know-what-your-ai-doing. Accessed 12 Apr 2022

Simon, H.A.: The Sciences of the Artificial. MIT Press, Cambridge (1996)

Pomerol, J.-C., Adam, F.: On the legacy of Herbert Simon and his contribution to Decision Making Support Systems and Artificial Intelligence. In: Intelligent Decision-Making Support Systems (i-DMSS): Foundations, Applications and Challenges, pp. 25–44. Springer (2005). https://doi.org/10.1007/1-84628-231-4_2

Shneiderman, B.: Human-Centered AI. Oxford University Press, London (2022)

Adadi, A., Berrada, M.: peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE Access. 6, 52138–52160 (2018). https://doi.org/10.1109/ACCESS.2018.2870052

Rudin, C.: Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. arXiv:1811.10154 [cs, stat] (2019)

Chong, L., Zhang, G., Goucher-Lambert, K., Kotovsky, K., Cagan, J.: Human confidence in artificial intelligence and in themselves: the evolution and impact of confidence on adoption of AI advice. Comput. Hum. Behav. 127, 107018 (2022). https://doi.org/10.1016/j.chb.2021.107018

Bansal, G., Nushi, B., Kamar, E., Lasecki, W.S., Weld, D.S., Horvitz, E.: Beyond Accuracy: The Role of Mental Models in Human-AI Team Performance. undefined (2019)

Zhang, Y., Liao, Q.V., Bellamy, R.K.E.: Effect of confidence and explanation on accuracy and trust calibration in ai-assisted decision making. In: Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, pp. 295–305 (2020). https://doi.org/10.1145/3351095.3372852

van der Waa, J., Schoonderwoerd, T., van Diggelen, J., Neerincx, M.: Interpretable confidence measures for decision support systems. Int. J. Hum Comput Stud. 144, 102493 (2020). https://doi.org/10.1016/j.ijhcs.2020.102493

Natarajan, M., Gombolay, M.: Effects of anthropomorphism and accountability on trust in human robot interaction. In: Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, pp. 33–42. ACM, Cambridge (2020). https://doi.org/10.1145/3319502.3374839

Robert, L.P.: The Growing Problem of Humanizing Robots. IRATJ. 3 (2017). https://doi.org/10.15406/iratj.2017.03.00043

Turner, A., Kaushik, M., Huang, M.-T., Varanasi, S.: Calibrating trust in AI-assisted decision making. https://www.semanticscholar.org/paper/Calibrating-Trust-in-AI-Assisted-Decision-Making-Turner-Kaushik/2234f479630f174296dfb9cbab6478e205e8011c. Accessed 06 Feb 2022

Ivankova, N.V., Creswell, J.W., Stick, S.L.: Using mixed-methods sequential explanatory design: from theory to practice. Field Methods 18, 3–20 (2006). https://doi.org/10.1177/1525822X05282260

Research Methods in Human-Computer Interaction, 2nd edn. Elsevier, New York

Rosson, M.B., Carroll, J.M.: Usability Engineering: Scenario-Based Development of Human-Computer Interaction. Academic Press, San Francisco (2002)

Scenario planning: A tool for strategic thinking Paul J. H. Schoemaker, Sloan Management Review (Winter 1995), pp. 25–40. Journal of Product Innovation Management. 12, 355–356 (1995). https://doi.org/10.1016/0737-6782(95)97416-S

Borgonovo, E., Peccati, L.: Managerial insights from service industry models: a new scenario decomposition method. Ann. Oper. Res. 185, 161–179 (2011)

Sollner, M., Leimeister, J.M.: Opening up the black box the importance of different kinds of trust in recommender system usage. SSRN J. (2012). https://doi.org/10.2139/ssrn.2485185

Benbasat, I., Wang, W.: Trust in and adoption of online recommendation agents. J. Assoc. Inf. Syst. 6 (2005). https://doi.org/10.17705/1jais.00065

Kline, R.: Principles and Practice of Structural Equation Modeling, 4th edn. Guilford Press, New York (1998)

Brown, T.: Confirmatory Factor Analysis for Applied Research, 2nd edn. Guilford Press, New York (2006)

Tabachnick, B.G., Fidell, L.S.: Using Multivariate Statistics, 4th edn. Allyn and Bacon, Boston (2001)

Sullivan, G.M., Feinn, R.: Using effect size—or why the P value is not enough. J. Grad Med. Educ. 4, 279–282 (2012). https://doi.org/10.4300/JGME-D-12-00156.1

Cohen, J.: Statistical Power Analysis for the Behavioral Sciences. Routledge, London (1988)

Crolic, C., Thomaz, F., Hadi, R., Stephen, A.T.: Blame the bot: anthropomorphism and anger in customer-chatbot interactions. J. Mark. 86, 132–148 (2022). https://doi.org/10.1177/00222429211045687

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Tuncer, S., Ramirez, A. (2022). Exploring the Role of Trust During Human-AI Collaboration in Managerial Decision-Making Processes. In: Chen, J.Y.C., Fragomeni, G., Degen, H., Ntoa, S. (eds) HCI International 2022 – Late Breaking Papers: Interacting with eXtended Reality and Artificial Intelligence. HCII 2022. Lecture Notes in Computer Science, vol 13518. Springer, Cham. https://doi.org/10.1007/978-3-031-21707-4_39

Download citation

DOI: https://doi.org/10.1007/978-3-031-21707-4_39

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-21706-7

Online ISBN: 978-3-031-21707-4

eBook Packages: Computer ScienceComputer Science (R0)