Abstract

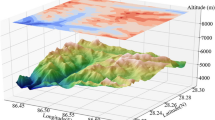

Wilderness Search and Rescue (WiSAR) operations require navigating large unknown environments and locating missing victims with high precision and in a timely manner. Several studies used deep reinforcement learning (DRL) to allow for the autonomous navigation of Unmanned Aerial Vehicles (UAVs) in unknown search and rescue environments. However, these studies focused on indoor environments and used fixed altitude navigation which is a significantly less complex setting than realistic WiSAR operations. This paper uses a DRL-powered approach for WiSAR in an unknown mountain landscape environment. To manage the complexity of the problem, the proposed approach breaks up the problem into five modules: Information Map, DRL-based Navigation, DRL-based Exploration Planner (waypoint generator), Obstacle Detection, and Human Detection. Curriculum learning has been used to enable the Navigation module to learn 3D navigation. The proposed approach was evaluated both under semi-autonomous operations where waypoints are externally provided by a human and under full autonomy. The results demonstrate the ability of the system to detect all humans when waypoints are generated randomly or by a human, whereas DRL-based waypoint generation led to a lower recall of 75%.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Achiam, J.: Proximal policy optimization (2018). https://spinningup.openai.com/en/latest/algorithms/ppo.html

Adams, S.M., Friedland, C.J.: A survey of unmanned aerial vehicle (uav) usage for imagery collection in disaster research and management. In: 9th International Workshop on Remote Sensing for Disaster Response, vol. 8, pp. 1–8 (2011)

Bayerlein, H., De Kerret, P., Gesbert, D.: Trajectory optimization for autonomous flying base station via reinforcement learning. In: 2018 IEEE 19th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), pp. 1–5. IEEE (2018)

Becerra, V.M.: Autonomous control of unmanned aerial vehicles (2019)

Carlson, D.F., Rysgaard, S.: Adapting open-source drone autopilots for real-time iceberg observations. MethodsX 5, 1059–1072 (2018)

Degrave, J., et al.: Magnetic control of tokamak plasmas through deep reinforcement learning. Nature 602(7897), 414–419 (2022)

Ebrahimi, D., Sharafeddine, S., Ho, P.H., Assi, C.: Autonomous UAV trajectory for localizing ground objects: a reinforcement learning approach. IEEE Trans. Mob. Comput. 20(4), 1312–1324 (2020)

Eyerman, J., Crispino, G., Zamarro, A., Durscher, R.: Drone efficacy study (des): Evaluating the impact of drones for locating lost persons in search and rescue events brussels. DJI and, Belgium (2018)

Hocraffer, A., Nam, C.S.: A meta-analysis of human-system interfaces in unmanned aerial vehicle (UAV) swarm management. Appl. Ergon. 58, 66–80 (2017). https://doi.org/10.1016/j.apergo.2016.05.011

Hodge, V.J., Hawkins, R., Alexander, R.: Deep reinforcement learning for drone navigation using sensor data. Neural Comput. Appl. 33(6), 2015–2033 (2021)

Hussein, A., Petraki, E., Elsawah, S., Abbass, H.: Autonomous swarm shepherding using curriculum-based reinforcement learning. In: Proceedings of the 2022 International Conference on Autonomous Agents and MultiAgent Systems, May 2022

Ibarz, J., Tan, J., Finn, C., Kalakrishnan, M., Pastor, P., Levine, S.: How to train your robot with deep reinforcement learning: lessons we have learned. Int. J. Robot. Res. 40(4–5), 698–721 (2021)

Imanberdiyev, N., Fu, C., Kayacan, E., Chen, I.M.: Autonomous navigation of UAV by using real-time model-based reinforcement learning. In: 2016 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), pp. 1–6. IEEE (2016)

Kanellakis, C., Nikolakopoulos, G.: Survey on computer vision for UAVs: current developments and trends. J. Intell. Robot. Syst. 87(1), 141–168 (2017)

Karaca, Y., et al.: The potential use of unmanned aircraft systems (drones) in mountain search and rescue operations. Am. J. Emerg. Med. 36(4), 583–588 (2018)

Kersandt, K., Muñoz, G., Barrado, C.: Self-training by reinforcement learning for full-autonomous drones of the future. In: 2018 IEEE/AIAA 37th Digital Avionics Systems Conference (DASC), pp. 1–10. IEEE (2018)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Lee, Y.H., Kim, Y.: Comparison of CNN and yolo for object detection. J. Semiconductor Display Technol. 19(1), 85–92 (2020)

Mnih, V., et al.: Asynchronous methods for deep reinforcement learning. In: International Conference on Machine Learning, pp. 1928–1937. PMLR (2016)

Mnih, V., et al.: Playing ATARI with deep reinforcement learning. arXiv preprint arXiv:1312.5602 (2013)

Persson, E., Heikkilä, F.: Autonomous mapping of unknown environments using a UAV (2020)

Pham, H.X., La, H.M., Feil-Seifer, D., Van Nguyen, L.: Reinforcement learning for autonomous UAV navigation using function approximation. In: 2018 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), pp. 1–6. IEEE (2018)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection (2015). https://doi.org/10.48550/ARXIV.1506.02640

Scherer, J., et al.: An autonomous multi-UAV system for search and rescue. In: Proceedings of the First Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use, pp. 33–38 (2015)

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., Klimov, O.: Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 (2017)

Shah, S., Dey, D., Lovett, C., Kapoor, A.: AirSim: high-fidelity visual and physical simulation for autonomous vehicles. In: Hutter, M., Siegwart, R. (eds.) Field and Service Robotics. SPAR, vol. 5, pp. 621–635. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-67361-5_40

Silvagni, M., Tonoli, A., Zenerino, E., Chiaberge, M.: Multipurpose UAV for search and rescue operations in mountain avalanche events. Geomat. Nat. Haz. Risk 8(1), 18–33 (2017)

Sturm, P.: Pinhole Camera Model, pp. 610–613. Springer, Boston (2014). https://doi.org/10.1007/978-0-387-31439-6_472

Tomic, T., et al.: Toward a fully autonomous UAV: research platform for indoor and outdoor urban search and rescue. IEEE Robot. Autom. Mag. 19(3), 46–56 (2012)

Torresan, C., et al.: Forestry applications of UAVs in Europe: a review. Int. J. Remote Sens. 38(8–10), 2427–2447 (2017)

Wijmans, E., et al.: DD-PPO: learning near-perfect pointgoal navigators from 2.5 billion frames. arXiv preprint arXiv:1911.00357 (2019)

Acknowledgement

This work is partially supported by the Australian Research Council Grant DP200101211.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Talha, M., Hussein, A., Hossny, M. (2022). Autonomous UAV Navigation in Wilderness Search-and-Rescue Operations Using Deep Reinforcement Learning. In: Aziz, H., Corrêa, D., French, T. (eds) AI 2022: Advances in Artificial Intelligence. AI 2022. Lecture Notes in Computer Science(), vol 13728. Springer, Cham. https://doi.org/10.1007/978-3-031-22695-3_51

Download citation

DOI: https://doi.org/10.1007/978-3-031-22695-3_51

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-22694-6

Online ISBN: 978-3-031-22695-3

eBook Packages: Computer ScienceComputer Science (R0)