Abstract

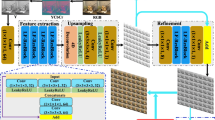

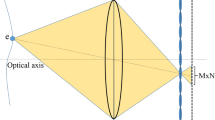

Light field (LF) angular super-resolution (SR) aims at reconstructing a densely sampled LF from a sparsely sampled one. To achieve accurate angular SR, it is important but challenging to incorporate the complementary information among input views, especially when dealing with large disparities. In this paper, we propose to reconstruct dense correspondence field among different views for LF angular SR. According to the LF geometry structure, we first capture correspondences along the horizontal and vertical axes of input views with a global receptive field. We then incorporate the linear structure prior among angular viewpoints to reconstruct a dense correspondence field. With the reconstructed dense correspondence field, the relationship between each target view and the input views is constructed. Next, we develop a view projection approach to project input views to the target positions. Moreover, a projection loss is introduced to preserve the LF parallax structure. Extensive experiments demonstrate that our proposed network can recover accurate details and preserve LF parallax structure. Comparative results show the advantage of our method over state-of-the-art methods on synthetic and real-world datasets.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Shin, C., Jeon, H.G., Yoon, Y., So Kweon, I., Joo Kim, S.: EPINET: a fully-convolutional neural network using epipolar geometry for depth from light field images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4748–4757 (2018)

Wang, Y., Wang, L., Liang, Z., Yang, J., An, W., Guo, Y.: Occlusion-aware cost constructor for light field depth estimation. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 19809–19818 (CVPR) (2022)

Chao, W., Wang, X., Wang, Y., Chang, L., Duan, F.: Learning sub-pixel disparity distribution for light field depth estimation. arXiv preprint (2022)

Wang, Y., Yang, J., Guo, Y., Xiao, C., An, W.: Selective light field refocusing for camera arrays using bokeh rendering and superresolution. IEEE Signal Process. Lett. 26(1), 204–208 (2018)

Viganò, N., Gil, P.M., Herzog, C., de la Rochefoucauld, O., van Liere, R., Batenburg, K.J.: Advanced light-field refocusing through tomographic modeling of the photographed scene. Opt. Express 27(6), 7834–7856 (2019)

Jayaweera, S.S., Edussooriya, C.U., Wijenayake, C., Agathoklis, P., Bruton, L.T.: Multi-volumetric refocusing of light fields. IEEE Signal Process. Lett. 28, 31–35 (2020)

Wang, Y., Wang, L., Yang, J., An, W., Yu, J., Guo, Y.: Spatial-angular interaction for light field image super-resolution. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12368, pp. 290–308. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58592-1_18

Cheng, Z., Xiong, Z., Chen, C., Liu, D., Zha, Z.J.: Light field super-resolution with zero-shot learning. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 10010–10019 (2021)

Wang, Y., Liang, Z., Wang, L., Yang, J., An, W., Guo, Y.: Learning a degradation-adaptive network for light field image super-resolution. arXiv preprint arXiv:2206.06214 (2022)

Zhu, H., Guo, M., Li, H., Wang, Q., Robles-Kelly, A.: Revisiting spatio-angular trade-off in light field cameras and extended applications in super-resolution. IEEE Trans. Visual Comput. Graphics 27(6), 3019–3033 (2019)

Shi, L., Hassanieh, H., Davis, A., Katabi, D., Durand, F.: Light field reconstruction using sparsity in the continuous Fourier domain. ACM Trans. Graph. 34(1), 1–13 (2014)

Vagharshakyan, S., Bregovic, R., Gotchev, A.: Light field reconstruction using shearlet transform. IEEE Trans. Pattern Anal. Mach. Intell. 40(1), 133–147 (2017)

Wu, G., et al.: Light field image processing: an overview. IEEE J. Sel. Top. Signal Process. 11(7), 926–954 (2017)

Wang, Y., Liu, F., Wang, Z., Hou, G., Sun, Z., Tan, T.: End-to-end view synthesis for light field imaging with pseudo 4DCNN. In: European Conference on Computer Vision, pp. 333–348 (2018)

Wu, G., Wang, Y., Liu, Y., Fang, L., Chai, T.: Spatial-angular attention network for light field reconstruction. IEEE Trans. Image Process. 30, 8999–9013 (2021)

Meng, N., So, H.K.H., Sun, X., Lam, E.: High-dimensional dense residual convolutional neural network for light field reconstruction. IEEE Trans. Pattern Anal. Mach. Intell. 43, 873–886 (2019)

Meng, N., Li, K., Liu, J., Lam, E.Y.: Light field view synthesis via aperture disparity and warping confidence map. IEEE Trans. Image Process. 30, 3908–3921 (2021)

Kalantari, N.K., Wang, T.C., Ramamoorthi, R.: Learning-based view synthesis for light field cameras. ACM Trans. Graph. 35(6), 1–10 (2016)

Jin, J., Hou, J., Yuan, H., Kwong, S.: Learning light field angular super-resolution via a geometry-aware network. In: AAAI Conference on Artificial Intelligence (2020)

Shi, J., Jiang, X., Guillemot, C.: Learning fused pixel and feature-based view reconstructions for light fields. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2555–2564 (2020)

Wu, G., Liu, Y., Fang, L., Chai, T.: Revisiting light field rendering with deep anti-aliasing neural network. IEEE Trans. Pattern Anal. Mach. Intell. 44, 5430–5444 (2021)

Wang, Y., Wang, L., Wu, G., Yang, J., An, W., Yu, J., Guo, Y.: Disentangling light fields for super-resolution and disparity estimation. IEEE Trans. Pattern Anal. Mach. Intell. 45, 425–443 (2022)

Long, J., Ning, Z., Darrell, T.: Do convnets learn correspondence? Adv. Neural Inf. Process. Syst. 27, 1601–1609 (2014)

Wang, Y., Liu, F., Zhang, K., Hou, G., Sun, Z., Tan, T.: LFNet: a novel bidirectional recurrent convolutional neural network for light-field image super-resolution. IEEE Trans. Image Process. 27(9), 4274–4286 (2018)

Yoon, Y., Jeon, H.G., Yoo, D., Lee, J.Y., Kweon, I.S.: Light-field image super-resolution using convolutional neural network. IEEE Signal Process. Lett. 24(6), 848–852 (2017)

Zhang, S., Lin, Y., Sheng, H.: Residual networks for light field image super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 11046–11055 (2019)

Meng, N., Wu, X., Liu, J., Lam, E.Y.: High-order residual network for light field super-resolution. In: AAAI Conference on Artificial Intelligence (2020)

Jin, J., Hou, J., Chen, J., Kwong, S.: Light field spatial super-resolution via deep combinatorial geometry embedding and structural consistency regularization. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2260–2269 (2020)

Yoon, Y., Jeon, H.G., Yoo, D., Lee, J.Y., So Kweon, I.: Learning a deep convolutional network for light-field image super-resolution. In: IEEE International Conference on Computer Vision Workshops, pp. 24–32 (2015)

Wing Fung Yeung, H., Hou, J., Chen, J., Ying Chung, Y., Chen, X.: Fast light field reconstruction with deep coarse-to-fine modeling of spatial-angular clues. In: European Conference on Computer Vision, pp. 137–152 (2018)

Wu, G., Zhao, M., Wang, L., Dai, Q., Chai, T., Liu, Y.: Light field reconstruction using deep convolutional network on EPI. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 6319–6327 (2017)

Liu, D., Huang, Y., Wu, Q., Ma, R., An, P.: Multi-angular epipolar geometry based light field angular reconstruction network. IEEE Trans. Comput. Imaging 6, 1507–1522 (2020)

Wang, Y., Liu, F., Zhang, K., Wang, Z., Sun, Z., Tan, T.: High-fidelity view synthesis for light field imaging with extended pseudo 4DCNN. IEEE Trans. Comput. Imaging 6, 830–842 (2020)

Wanner, S., Goldluecke, B.: Variational light field analysis for disparity estimation and super-resolution. IEEE Trans. Pattern Anal. Mach. Intell. 36(3), 606–619 (2014)

Wu, G., Liu, Y., Dai, Q., Chai, T.: Learning sheared epi structure for light field reconstruction. IEEE Trans. Image Process. 28(7), 3261–3273 (2019)

Mildenhall, B., et al.: Local light field fusion: practical view synthesis with prescriptive sampling guidelines. ACM Trans. Graph. 38(4), 1–14 (2019)

Jin, J., Hou, J., Chen, J., Zeng, H., Kwong, S., Yu, J.: Deep coarse-to-fine dense light field reconstruction with flexible sampling and geometry-aware fusion. IEEE Trans. Pattern Anal. Mach. Intell. 44, 1819–1836 (2020)

Levoy, M., Hanrahan, P.: Light field rendering. In: Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, pp. 31–42 (1996)

Wang, L., et al.: Learning parallax attention for stereo image super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition (2019)

Honauer, K., Johannsen, O., Kondermann, D., Goldluecke, B.: A dataset and evaluation methodology for depth estimation on 4D light fields. In: Lai, S.-H., Lepetit, V., Nishino, K., Sato, Y. (eds.) ACCV 2016. LNCS, vol. 10113, pp. 19–34. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-54187-7_2

Wanner, S., Meister, S., Goldluecke, B.: Datasets and benchmarks for densely sampled 4D light fields. In: Vision, Modelling and Visualization, pp. 225–226. Citeseer (2013)

Raj, A.S., Lowney, M., Shah, R., Wetzstein, G.: Stanford lytro light field archive (2016)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: International Conference on Learning and Representation (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Mo, Y., Wang, Y., Wang, L., Yang, J., An, W. (2023). Light Field Angular Super-Resolution via Dense Correspondence Field Reconstruction. In: Karlinsky, L., Michaeli, T., Nishino, K. (eds) Computer Vision – ECCV 2022 Workshops. ECCV 2022. Lecture Notes in Computer Science, vol 13802. Springer, Cham. https://doi.org/10.1007/978-3-031-25063-7_25

Download citation

DOI: https://doi.org/10.1007/978-3-031-25063-7_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-25062-0

Online ISBN: 978-3-031-25063-7

eBook Packages: Computer ScienceComputer Science (R0)