Abstract

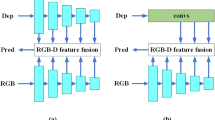

RGB-D salient object detection (SOD) is used to detect the most attractive object in the scene. There is a problem in front of the existing RGB-D SOD task: how to integrate the different context information between the RGB and depth map effectively. In this work, we propose the Siamese Residual Interactive Refinement Network (SiamRIR) equipped with the encoder and decoder to handle the above problem. Concretely, we adopt the Siamese Network shared parameters to encode two modalities and fuse them during decoding phase. Then, we design the Multi-scale Residual Interactive Refinement Block (RIRB) which contains Residual Interactive Module (RIM) and Residual Refinement Module (RRM). This block utilizes the multi-type cues to fuse and refine features, where RIM takes interaction between modalities to integrate the complementary regions with residual manner, and RRM refines features during fusion phase by incorporating spatial detail context with multi-scale manner. Extensive experiments on five benchmarks demonstrate that our method outperforms the state-of-the-art RGB-D SOD methods both quantitatively and qualitatively.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Wang, W., Lai, Q., Fu, H., Shen, J., Ling, H., Yang, R.: Salient object detection in the deep learning era: an in-depth survey. IEEE Trans. Pattern Anal. Mach. Intell. 44(6), 3239–3259 (2021)

Cheng, M.M., Mitra, N.J., Huang, X., Torr, P.H., Hu, S.M.: Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 569–582 (2014)

Borji, A., Cheng, M.-M., Hou, Q., Jiang, H., Li, J.: Salient object detection: a survey. Comput. Vis. Media 5(2), 117–150 (2019). https://doi.org/10.1007/s41095-019-0149-9

Wang, P., et al.: Understanding convolution for semantic segmentation, pp. 1451–1460 (2018)

Noh, H., Hong, S., Han, B.: Learning deconvolution network for semantic segmentation, pp. 1520–1528 (2015)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation, pp. 3431–3440 (2015)

Chen, Z.M., Jin, X., Zhao, B.R., Zhang, X., Guo, Y.: HCE: hierarchical context embedding for region-based object detection. IEEE Trans. Image Process. 30, 6917–6929 (2021)

Hu, H., Gu, J., Zhang, Z., Dai, J., Wei, Y.: Relation networks for object detection, pp. 3588–3597 (2018)

Liu, W.: Pair-based uncertainty and diversity promoting early active learning for person re-identification. ACM Trans. Intell. Syst. Technol. (TIST) 11(2), 1–15 (2020)

Zheng, Z., Zheng, L., Yang, Y.: A discriminatively learned CNN embedding for person reidentification. ACM Trans. Multimed. Comput. Commun. Appl. 14(1), 1–20 (2017)

Mahadevan, V., Vasconcelos, N.: Biologically inspired object tracking using center-surround saliency mechanisms. IEEE Trans. Pattern Anal. Mach. Intell. 35(3), 541–554 (2012)

Yilmaz, A., Javed, O., Shah, M.: Object tracking: a survey. ACM Comput. Surv. 38(4), 13-es (2006)

Wang, W., Zhao, S., Shen, J., Hoi, S.C., Borji, A.: Salient object detection with pyramid attention and salient edges, pp. 1448–1457 (2019)

Hou, Q., Cheng, M.M., Hu, X., Borji, A., Tu, Z., Torr, P.H.: Deeply supervised salient object detection with short connections, pp. 3203–3212 (2017)

Chen, S., Tan, X., Wang, B., Hu, X.: Reverse attention for salient object detection, pp. 234–250 (2018)

Zhang, X., Wang, T., Qi, J., Lu, H., Wang, G.: Progressive attention guided recurrent network for salient object detection, pp. 714–722 (2018)

Jiang, H., Wang, J., Yuan, Z., Wu, Y., Zheng, N., Li, S.: Salient object detection: a discriminative regional feature integration approach, pp. 2083–2090 (2013)

Zhao, J.X., Liu, J.J., Fan, D.P., Cao, Y., Yang, J., Cheng, M.M.: EGNet: edge guidance network for salient object detection, pp. 8779–8788 (2019)

Cheng, Y., Fu, H., Wei, X., Xiao, J., Cao, X.: Depth enhanced saliency detection method, pp. 23–27 (2014)

Ren, J., Gong, X., Yu, L., Zhou, W., Ying Yang, M.: Exploiting global priors for RGB-D saliency detection, pp. 25–32 (2015)

Cong, R., Lei, J., Fu, H., Hou, J., Huang, Q., Kwong, S.: Going from RGB to RGBD saliency: a depth-guided transformation model. IEEE Trans. Cybern. 50(8), 3627–3639 (2019)

Song, H., Liu, Z., Du, H., Sun, G., Le Meur, O., Ren, T.: Depth-aware salient object detection and segmentation via multiscale discriminative saliency fusion and bootstrap learning. IEEE Trans. Image Process. 26(9), 4204–4216 (2017)

Zhao, J.X., Cao, Y., Fan, D.P., Cheng, M.M., Li, X.Y., Zhang, L.: Contrast prior and fluid pyramid integration for RGBD salient object detection, pp. 3927–3936 (2019)

Chen, H., Li, Y.: Three-stream attention-aware network for RGB-D salient object detection. IEEE Trans. Image Process. 28(6), 2825–2835 (2019)

Ciptadi, A., Hermans, T., Rehg, J.M.: An in depth view of saliency (2013)

Zhao, S., Chen, M., Wang, P., Cao, Y., Zhang, P., Yang, X.: RGB-D salient object detection via deep fusion of semantics and details. Comput. Animation Virtual Worlds 31(4–5), e1954 (2020)

Chen, H., Li, Y., Su, D.: Multi-modal fusion network with multi-scale multi-path and cross-modal interactions for RGB-D salient object detection. Pattern Recogn. 86, 376–385 (2019)

Qu, L., He, S., Zhang, J., Tian, J., Tang, Y., Yang, Q.: RGBD salient object detection via deep fusion. IEEE Trans. Image Process. 26(5), 2274–2285 (2017)

Fan, D.P., Lin, Z., Zhang, Z., Zhu, M., Cheng, M.M.: Rethinking RGB-D salient object detection: models, data sets, and large-scale benchmarks. IEEE Trans. Neural Netw. Learn. Syst. 32(5), 2075–2089 (2020)

Chen, H., Deng, Y., Li, Y., Hung, T.Y., Lin, G.: RGBD salient object detection via disentangled cross-modal fusion. IEEE Trans. Image Process. 29, 8407–8416 (2020)

Peng, H., Li, B., Xiong, W., Hu, W., Ji, R.: RGBD salient object detection: a benchmark and algorithms, pp. 92–109 (2014)

Liu, Z., Shi, S., Duan, Q., Zhang, W., Zhao, P.: Salient object detection for RGB-D image by single stream recurrent convolution neural network. Neurocomputing 363, 46–57 (2019)

Liu, D., Hu, Y., Zhang, K., Chen, Z.: Two-stream refinement network for RGB-D saliency detection, pp. 3925–3929 (2019)

Zhang, Z., Lin, Z., Xu, J., Jin, W.D., Lu, S.P., Fan, D.P.: Bilateral attention network for RGB-D salient object detection. IEEE Trans. Image Process. 30, 1949–1961 (2021)

Huang, N., Luo, Y., Zhang, Q., Han, J.: Discriminative unimodal feature selection and fusion for RGB-D salient object detection. Pattern Recogn. 122, 108359 (2022)

Chen, Q., et al.: EF-Net: a novel enhancement and fusion network for RGB-D saliency detection. Pattern Recogn. 112, 107740 (2021)

Wang, J., Chen, S., Lv, X., Xu, X., Hu, X.: Guided residual network for RGB-D salient object detection with efficient depth feature learning. Vis. Comput. 38(5), 1803–1814 (2022)

Bromley, J., et al.: Signature verification using a “siamese’’ time delay neural network. Int. J. Pattern Recogn. Artif. Intell. 7(04), 669–688 (1993)

Chan, S., Tao, J., Zhou, X., Bai, C., Zhang, X.: Siamese implicit region proposal network with compound attention for visual tracking. IEEE Trans. Image Process. 31, 1882–1894 (2022)

Fan, H., Ling, H.: Siamese cascaded region proposal networks for real-time visual tracking, pp. 7952–7961 (2019)

Zhao, X., Zhang, L., Pang, Y., Lu, H., Zhang, L.: A single stream network for robust and real-time RGB-D salient object detection, pp. 646–662 (2020)

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: Imagenet: a large-scale hierarchical image database, pp. 248–255 (2009)

Fu, K., Fan, D.P., Ji, G.P., Zhao, Q.: JL-DCF: joint learning and densely-cooperative fusion framework for RGB-D salient object detection, pp. 3052–3062 (2020)

Zhang, P., Liu, W., Zeng, Y., Lei, Y., Lu, H.: Looking for the detail and context devils: high-resolution salient object detection. IEEE Trans. Image Process. 30, 3204–3216 (2021)

Ju, R., Ge, L., Geng, W., Ren, T., Wu, G.: Depth saliency based on anisotropic center-surround difference, pp. 1115–1119 (2014)

Niu, Y., Geng, Y., Li, X., Liu, F.: Leveraging stereopsis for saliency analysis, pp. 454–461 (2012)

Liu, C., Yuen, J., Torralba, A.: Sift flow: dense correspondence across scenes and its applications. IEEE Trans. Pattern Anal. Mach. Intell. 33(5), 978–994 (2010)

Chen, H., Li, Y.: Progressively complementarity-aware fusion network for RGB-D salient object detection, pp. 3051–3060 (2018)

Piao, Y., Ji, W., Li, J., Zhang, M., Lu, H.: Depth-induced multi-scale recurrent attention network for saliency detection, pp. 7254–7263 (2019)

Huang, Z., Chen, H.X., Zhou, T., Yang, Y.Z., Liu, B.Y.: Multi-level cross-modal interaction network for RGB-D salient object detection. Neurocomputing 452, 200–211 (2021)

Li, C., et al.: Asif-net: attention steered interweave fusion network for RGB-D salient object detection. IEEE Trans. Cybern. 51(1), 88–100 (2020)

Zhou, X., Wen, H., Shi, R., Yin, H., Zhang, J., Yan, C.: FANet: feature aggregation network for RGBD saliency detection. Signal Process.: Image Commun. 102, 116591 (2022)

Jin, X., Guo, C., He, Z., Xu, J., Wang, Y., Su, Y.: FCMNet: frequency-aware cross-modality attention networks for RGB-D salient object detection. Neurocomputing 491, 414–425 (2022)

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China [grant nos. 61922064, U2033210, 62101387] and Zhejiang Xinmiao Talents Program [grant nos. 2022R429B046].

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Hu, M., Zhang, X., Zhao, L. (2023). Multi-scale Residual Interaction for RGB-D Salient Object Detection. In: Wang, L., Gall, J., Chin, TJ., Sato, I., Chellappa, R. (eds) Computer Vision – ACCV 2022. ACCV 2022. Lecture Notes in Computer Science, vol 13843. Springer, Cham. https://doi.org/10.1007/978-3-031-26313-2_35

Download citation

DOI: https://doi.org/10.1007/978-3-031-26313-2_35

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26312-5

Online ISBN: 978-3-031-26313-2

eBook Packages: Computer ScienceComputer Science (R0)