Abstract

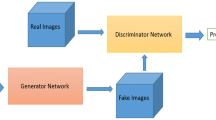

Despite unconditional feature inversion being the foundation of many image synthesis applications, training an inverter demands a high computational budget, large decoding capacity and imposing conditions such as autoregressive priors. To address these limitations, we propose the use of adversarially robust representations as a perceptual primitive for feature inversion. We train an adversarially robust encoder to extract disentangled and perceptually-aligned image representations, making them easily invertible. By training a simple generator with the mirror architecture of the encoder, we achieve superior reconstruction quality and generalization over standard models. Based on this, we propose an adversarially robust autoencoder and demonstrate its improved performance on style transfer, image denoising and anomaly detection tasks. Compared to recent ImageNet feature inversion methods, our model attains improved performance with significantly less complexity. Code available at https://github.com/renanrojasg/adv_robust_autoencoder.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Gatys, L.A., Ecker, A.S., Bethge, M.: Image style transfer using convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2414–2423 (2016)

Li, Y., Fang, C., Yang, J., Wang, Z., Lu, X., Yang, M.H.: Universal style transfer via feature transforms. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 385–395 NIPS’17, Red Hook, NY, USA, Curran Associates Inc. (2017)

Yoo, J., Uh, Y., Chun, S., Kang, B., Ha, J.W.: Photorealistic style transfer via wavelet transforms. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 9036–9045 (2019)

Yang, C., Lu, X., Lin, Z., Shechtman, E., Wang, O., Li, H.: High-resolution image inpainting using multi-scale neural patch synthesis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6721–6729 (2017)

Nguyen, A., Clune, J., Bengio, Y., Dosovitskiy, A., Yosinski, J.: Plug & play generative networks: Conditional iterative generation of images in latent space. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4467–4477 (2017)

Shocher, A., et al.: Semantic pyramid for image generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7457–7466 (2020)

Nguyen, A., Dosovitskiy, A., Yosinski, J., Brox, T., Clune, J.: Synthesizing the preferred inputs for neurons in neural networks via deep generator networks. In: Advances in Neural Information Processing Systems, pp. 3387–3395 (2016)

Rombach, R., Esser, P., Ommer, B.: Network-to-network translation with conditional invertible neural networks. Adv. Neural. Inf. Process. Syst. 33, 2784–2797 (2020)

Santurkar, S., Ilyas, A., Tsipras, D., Engstrom, L., Tran, B., Madry, A.: Image synthesis with a single (robust) classifier. In: Advances in Neural Information Processing Systems, pp. 1262–1273 (2019)

Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 586–595 (2018)

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning Book, MIT Press, 521, 800 (2016)

Deecke, L., Vandermeulen, R., Ruff, L., Mandt, S., Kloft, M.: Image anomaly detection with generative adversarial networks. In: Berlingerio, M., Bonchi, F., Gärtner, T., Hurley, N., Ifrim, G. (eds.) ECML PKDD 2018. LNCS (LNAI), vol. 11051, pp. 3–17. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-10925-7_1

Golan, I., El-Yaniv, R.: Deep anomaly detection using geometric transformations. arXiv preprint arXiv:1805.10917 (2018)

Nguyen, A., Yosinski, J., Clune, J.: Understanding neural networks via feature visualization: a survey. In: Samek, W., Montavon, G., Vedaldi, A., Hansen, L.K., Müller, K.-R. (eds.) Explainable AI: Interpreting, Explaining and Visualizing Deep Learning. LNCS (LNAI), vol. 11700, pp. 55–76. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-28954-6_4

Ponce, C.R., Xiao, W., Schade, P.F., Hartmann, T.S., Kreiman, G., Livingstone, M.S.: Evolving images for visual neurons using a deep generative network reveals coding principles and neuronal preferences. Cell 177, 999–1009 (2019)

Rombach, R., Esser, P., Blattmann, A., Ommer, B.: Invertible neural networks for understanding semantics of invariances of cnn representations. In: Deep Neural Networks and Data for Automated Driving. Springer, pp. 197–224 (2022). https://doi.org/10.1007/978-3-031-01233-4_7

Donahue, J., Simonyan, K.: Large scale adversarial representation learning. In: Advances in Neural Information Processing Systems (2019)

Dosovitskiy, A., T.Brox: Inverting visual representations with convolutional networks. In: CVPR (2016)

Dosovitskiy, A., Brox, T.: Generating images with perceptual similarity metrics based on deep networks. In: Advances in Neural Information Processing Systems, pp. 658–666 (2016)

Esser, P., Rombach, R., Ommer, B.: Taming transformers for high-resolution image synthesis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12873–12883 (2021)

Esser, P., Rombach, R., Blattmann, A., Ommer, B.: Imagebart: bidirectional context with multinomial diffusion for autoregressive image synthesis. Adv. Neural. Inf. Process. Syst. 34, 3518–3532 (2021)

Madry, A., Makelov, A., Schmidt, L., Tsipras, D., Vladu, A.: Towards deep learning models resistant to adversarial attacks. iclr. arXiv preprint arXiv:1706.06083 (2018)

Engstrom, L., Ilyas, A., Santurkar, S., Tsipras, D., Tran, B., Madry, A.: Adversarial robustness as a prior for learned representations. arXiv preprint arXiv:1906.00945 (2019)

Razavi, A., van den Oord, A., Vinyals, O.: Generating diverse high-fidelity images with vq-vae-2. In: Advances in Neural Information Processing Systems, pp. 14866–14876 (2019)

Van Den Oord, A., Vinyals, O., et al.: Neural discrete representation learning. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Krizhevsky, A., Hinton, G., et al.: Learning multiple layers of features from tiny images (2009)

Russakovsky, O., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vision 115, 211–252 (2015)

Agustsson, E., Timofte, R.: Ntire 2017 challenge on single image super-resolution: Dataset and study. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 126–135 (2017)

Dosovitskiy, A., Brox, T.: Inverting convolutional networks with convolutional networks. arXiv preprint arXiv:1506.02753 4 (2015)

Mahendran, A., Vedaldi, A.: Understanding deep image representations by inverting them. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5188–5196 (2015)

Mahendran, A., Vedaldi, A.: Visualizing deep convolutional neural networks using natural pre-images. Int. J. Comput. Vision 120, 233–255 (2016)

Salman, H., Ilyas, A., Engstrom, L., Kapoor, A., Madry, A.: Do adversarially robust imagenet models transfer better? arXiv preprint arXiv:2007.08489 (2020)

Zhang, Y., Jia, R., Pei, H., Wang, W., Li, B., Song, D.: The secret revealer: Generative model-inversion attacks against deep neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 253–261 (2020)

Jun, H., et al.: Distribution augmentation for generative modeling. In: International Conference on Machine Learning, pp. 5006–5019 PMLR (2020)

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114 (2013)

Ng, A., et al.: Sparse autoencoder. CS294A Lecture notes 72, 1–19 (2011)

Pan, S.J., Yang, Q.: A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359 (2009)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 694–711. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_43

Simonyan, K., Vedaldi, A., Zisserman, A.: Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034 (2013)

Goodfellow, I.J., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572 (2014)

Athalye, A., Carlini, N., Wagner, D.: Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples. arXiv preprint arXiv:1802.00420 (2018)

Ulyanov, D., Vedaldi, A., Lempitsky, V.: Deep image prior. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9446–9454 (2018)

Vincent, P., Larochelle, H., Lajoie, I., Bengio, Y., Manzagol, P.A., Bottou, L.: Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 11(12), 3371–3408 (2010)

Kessy, A., Lewin, A., Strimmer, K.: Optimal whitening and decorrelation. Am. Stat. 72, 309–314 (2018)

Mao, X.J., Shen, C., Yang, Y.B.: Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. arXiv preprint arXiv:1603.09056 (2016)

El Helou, M., Süsstrunk, S.: Blind universal bayesian image denoising with gaussian noise level learning. IEEE Trans. Image Process. 29, 4885–4897 (2020)

Zhang, K., Zuo, W., Zhang, L.: Ffdnet: toward a fast and flexible solution for cnn-based image denoising. IEEE Trans. Image Process. 27, 4608–4622 (2018)

Moeller, M., Diebold, J., Gilboa, G., Cremers, D.: Learning nonlinear spectral filters for color image reconstruction. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 289–297 (2015)

Liu, X., Li, Y., Wu, C., Hsieh, C.J.: Adv-bnn: Improved adversarial defense through robust bayesian neural network. arXiv preprint arXiv:1810.01279 (2018)

Zhang, J., Zhu, J., Niu, G., Han, B., Sugiyama, M., Kankanhalli, M.: Geometry-aware instance-reweighted adversarial training. arXiv preprint arXiv:2010.01736 (2020)

Croce, F., Hein, M.: Reliable evaluation of adversarial robustness with an ensemble of diverse parameter-free attacks. In: International Conference on Machine Learning, pp. 2206–2216 PMLR (2020)

Sosnovik, I., Szmaja, M., Smeulders, A.: Scale-equivariant steerable networks. arXiv preprint arXiv:1910.11093 (2019)

Fan, Y., Yu, J., Liu, D., Huang, T.S.: Scale-wise convolution for image restoration. Proc. AAAI Conf. Artif. Intell. 34, 10770–10777 (2020)

Chen, P., Agarwal, C., Nguyen, A.: The shape and simplicity biases of adversarially robust imagenet-trained cnns. arXiv preprint arXiv:2006.09373 (2020)

Rifai, S., Vincent, P., Muller, X., Glorot, X., Bengio, Y.: Contractive auto-encoders: Explicit invariance during feature extraction. In: Icml (2011)

Chen, Y., Pock, T.: Trainable nonlinear reaction diffusion: a flexible framework for fast and effective image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 39, 1256–1272 (2016)

Burger, H.C., Schuler, C.J., Harmeling, S.: Image denoising: Can plain neural networks compete with bm3d? In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2392–2399 IEEE (2012) 2392–2399

Zhang, L., Wu, X., Buades, A., Li, X.: Color demosaicking by local directional interpolation and nonlocal adaptive thresholding. J. Electron. Imaging 20, 023016 (2011)

Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings of the 8th International Conference Computer Vision. Vol. 2, pp. 416–423 (2001)

Anwar, S., Barnes, N.: Real image denoising with feature attention. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3155–3164 (2019)

Guo, S., Yan, Z., Zhang, K., Zuo, W., Zhang, L.: Toward convolutional blind denoising of real photographs. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1712–1722 (2019)

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1125–1134 (2017)

Ruff, L., Vandermeulen, R.A., Görnitz, N., Binder, A., Müller, E., Müller, K.R., Kloft, M.: Deep semi-supervised anomaly detection. arXiv preprint arXiv:1906.02694 (2019)

Wang, S., et al.: Effective end-to-end unsupervised outlier detection via inlier priority of discriminative network. In: NeurIPS, pp. 5960–5973 (2019)

Parkhi, O.M., Vedaldi, A., Zisserman, A., Jawahar, C.: Cats and dogs. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3498–3505. IEEE (2012)

Engstrom, L., Ilyas, A., Salman, H., Santurkar, S., Tsipras, D.: Robustness (python library) (2019)

Miyato, T., Kataoka, T., Koyama, M., Yoshida, Y.: Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957 (2018)

Acknowledgements

AN was supported by NSF Grant No. 1850117 & 2145767, and donations from NaphCare Foundation & Adobe Research. We are grateful for Kelly Price’s tireless assistance with our GPU servers at Auburn University.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Rojas-Gomez, R.A., Yeh, R.A., Do, M.N., Nguyen, A. (2023). Inverting Adversarially Robust Networks for Image Synthesis. In: Wang, L., Gall, J., Chin, TJ., Sato, I., Chellappa, R. (eds) Computer Vision – ACCV 2022. ACCV 2022. Lecture Notes in Computer Science, vol 13846. Springer, Cham. https://doi.org/10.1007/978-3-031-26351-4_24

Download citation

DOI: https://doi.org/10.1007/978-3-031-26351-4_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26350-7

Online ISBN: 978-3-031-26351-4

eBook Packages: Computer ScienceComputer Science (R0)