Abstract

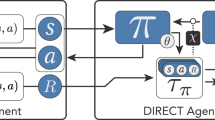

Imitation learning algorithms have been interpreted as variants of divergence minimization problems. The ability to compare occupancy measures between experts and learners is crucial in their effectiveness in learning from demonstrations. In this paper, we present tractable solutions by formulating imitation learning as minimization of the Sinkhorn distance between occupancy measures. The formulation combines the valuable properties of optimal transport metrics in comparing non-overlapping distributions with a cosine distance cost defined in an adversarially learned feature space. This leads to a highly discriminative critic network and optimal transport plan that subsequently guide imitation learning. We evaluate the proposed approach using both the reward metric and the Sinkhorn distance metric on a number of MuJoCo experiments. For the implementation and reproducing results please refer to the following repository https://github.com/gpapagiannis/sinkhorn-imitation.

G. Papagiannis—Work done as a student at the University of Surrey.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Abbeel, P., Dolgov, D., Ng, A.Y., Thrun, S.: Apprenticeship learning for motion planning with application to parking lot navigation. In: Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 1083–1090 (2008)

Abbeel, P., Coates, A., Ng, A.Y.: Autonomous helicopter aerobatics through apprenticeship learning. Int. J. Robot. Res. 29(13), 1608–1639 (2010)

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein generative adversarial networks. In: Proceedings of the International Conference on Machine Learning (ICML), pp. 214–223. Sydney, Australia (2017)

Blondé, L., Kalousis, A.: Sample-efficient imitation learning via generative adversarial nets. In: Proceedings of the International Conference on Artificial Intelligence and Statistics (AISTATS). Okinawa, Japan (2019)

Coates, A., Abbeel, P., Ng, A.Y.: Learning for control from multiple demonstrations. In: Proceedings of the International Conference on Machine Learning (ICML), pp. 144–151. Helsinki, Finland (2008)

Cuturi, M.: Sinkhorn distances: lightspeed computation of optimal transport. In: Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), pp. 2292–2300. Lake Tahoe, Nevada, USA (2013)

Dadashi, R., Hussenot, L., Geist, M., Pietquin, O.: Primal Wasserstein imitation learning. In: Proceedings of the International Conference on Learning Representations (ICLR) (2021)

Fu, J., Luo, K., Levine, S.: Learning robust rewards with adverserial inverse reinforcement learning. In: Proceedings of the International Conference on Learning Representations (ICLR). Vancouver, Canada (2018)

Ghasemipour, S.K.S., Zemel, R., Gu, S.: A divergence minimization perspective on imitation learning methods. In: Proceedings of the Conference on Robot Learning (CoRL). Osaka, Japan (2019)

Goodfellow, I., et al.: Generative adversarial nets. In: Proc. In: Advances in Neural Information Processing Systems (NeurIPS), pp. 2672–2680. Montréal, Canada (2014)

Ho, J., Ermon, S.: Generative adversarial imitation learning. In: Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), pp. 4565–4573. Barcelona, Spain (2016)

Ke, L., Barnes, M., Sun, W., Lee, G., Choudhury, S., Srinivasa, S.S.: Imitation learning as f-divergence minimization. arXiv:1905.12888 (2019)

Kober, J., Peters, J.: Learning motor primitives for robotics. In: Proceedings of the IEEE International Conference on Robotics and Automation, pp. 2112–2118. Kobe, Japan (2009)

Kostrikov, I., Agrawal, K.K., Levine, S., Tompson, J.: Discriminator-actor-critic: addressing sample inefficiency and reward bias in adversarial imitation learning. In: Proceedings of the International Conference on Learning Representations (ICLR). New Orleans, USA (2019)

Kostrikov, I., Nachum, O., Tompson, J.: Imitation learning via off-policy distribution matching. In: Proceedings of the International Conference on Learning Representations (ICLR) (2020)

Kuderer, M., Kretzschmar, H., Burgard, W.: Teaching mobile robots to cooperatively navigate in populated environments. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 3138–3143 (2013)

Laskey, M., Lee, J., Fox, R., Dragan, A., Goldberg, K.: DART: noise injection for robust imitation learning. In: Proceedings of the Conference on Robot Learning (CoRL). Mountain View, USA (2017)

Mnih, V., Kavukcuoglu, K., Silver, D., et al.: Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015)

Ng, A.Y., Russell, S.: Algorithms for inverse reinforcement learning. In: Proceedings of the International Conference on Machine Learning (ICML), pp. 663–670. Stanford, CA, USA (2000)

Osa, T., Pajarinen, J., Neumann, G., Bagnell, J.A., Abbeel, P., Peters, J.: An algorithmic perspective on imitation learning. Now Foundations and Trends (2018)

Osa, T., Sugita, N., Mitsuishi, M.: Online trajectory planning in dynamic environments for surgical task automation. In: Proceedings of the Robotics: Science and Systems. Berkley, CA, USA (2014)

Park, D., Noseworthy, M., Paul, R., Roy, S., Roy, N.: Inferring task goals and constraints using Bayesian nonparametric inverse reinforcement learning. In: Proceedings of the Conference on Robot Learning (CoRL). Osaka, Japan (2019)

Pomerleau, D.A.: ALVINN: an autonomous land vehicle in a neural network. In: Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), pp. 305–313 (1989)

Rajeswaran, A., et al.: Learning complex dexterous manipulation with deep reinforcement learning and demonstrations. In: Proceedings of the Robotics: Science and Systems (RSS). Pittsburgh, Pennsylvania (2018)

Reddy, S., Dragan, A.D., Levine, S.: SQIL: imitation learning via reinforcement learning with sparse rewards. In: Proceedings of the International Conference on Learning Representations (ICLR) (2020)

Ross, S., Gordon, G., Bagnell, J.: A reduction of imitation learning and structured prediction to no-regret online learning. In: Proceedings of the Conference on Artificial Intelligence and Statistics, pp. 627–635. Fort Lauderdale, FL, USA (2011)

Ross, S., Bagnell, A.: Efficient reductions for imitation learning. In: Proceedings of the International Conference on Artificial Intelligence and Statistics, pp. 661–668, 13–15 May 2010. Chia Laguna Resort, Sardinia, Italy

Salimans, T., et al.: Improved techniques for training GANs. In: Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), pp. 2234–2242. Barcelona, Spain (2016)

Salimans, T., Zhang, H., Radford, A., Metaxas, D.N.: Improving GANs using optimal transport. In: Proceedings of the International Conference on Learning Representation (ICLR). Vancouver, Canada (2018)

Sammut, C., Hurst, S., Kedzier, D., Michie, D.: Learning to fly. In: Proceedings of the International Conference on Machine Learning (ICML), pp. 385–393. Aberdeen, Scotland, United Kingdom (1992)

Sasaki, F., Yohira, T., Kawaguchi, A.: Sample efficient imitation learning for continuous control. In: Proceedings of the International Conference on Learning Representations (ICLR) (2019)

Schulman, J., Levine, S., Abbeel, P., Jordan, M., Moritz, P.: Trust region policy optimization. In: Proceedings of the International Conference on Machine Learning (ICML), pp. 1889–1897. Lille, France (2015)

Silver, D., et al.: Mastering the game of go with deep neural networks and tree search. Nature 529, 484–503 (2016)

Sriperumbudur, B.K., Gretton, A., Fukumizu, K., Lanckriet, G.R.G., Schölkopf, B.: A note on integral probability metrics and \(\phi \)-divergences. arXiv:0901.2698 (2009)

Todorov, E., Erez, T., Tassa, Y.: MuJoCo: a physics engine for model-based control. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5026–5033 (2012)

Torabi, F., Geiger, S., Warnell, G., Stone, P.: Sample-efficient adversarial imitation learning from observation. arXiv:1906.07374 (2019)

Villani, C.: Optimal Transport: Old and New, vol. 338. Springer Science & Business Media, Berlin, Germany (2008). https://doi.org/10.1007/978-3-540-71050-9

Vinyals, O., et al.: Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature 575, 350–354 (2019)

Xiao, H., Herman, M., Wagner, J., Ziesche, S., Etesami, J., Linh, T.H.: Wasserstein adversarial imitation learning. arXiv:1906.08113 (2019)

Zhu, Y., et al.: Reinforcement and imitation learning for diverse visuomotor skills. arXiv:1802.09564 (2018)

Ziebart, B., Bagnell, A.J.: Modeling interaction via the principle of maximum causal entropy. In: Proceedings of the International Conference on Machine Learning (ICML), pp. 1255–1262. Haifa, Israel (2010)

Ziebart, B., Mass, A., Bagnell, A.J., Dey, A.K.: Maximum entropy inverse reinforcement learning. In: Proceedings of the AAAI Conference on Artificial intelligence, pp. 1433–1438 (2008)

Zucker, M., et al.: Optimization and learning for rough terrain legged locomotion. Int. J. Robot. Res. 30(2), 175–191 (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Papagiannis, G., Li, Y. (2023). Imitation Learning with Sinkhorn Distances. In: Amini, MR., Canu, S., Fischer, A., Guns, T., Kralj Novak, P., Tsoumakas, G. (eds) Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2022. Lecture Notes in Computer Science(), vol 13716. Springer, Cham. https://doi.org/10.1007/978-3-031-26412-2_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-26412-2_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26411-5

Online ISBN: 978-3-031-26412-2

eBook Packages: Computer ScienceComputer Science (R0)