Abstract

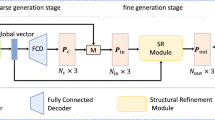

Existing sparse-to-dense methods for point cloud completion generally focus on designing refinement and expansion modules to expand the point cloud from sparse to dense. This ignores to preserve a well-performed generation process for the points at the sparse level, which leads to the loss of shape priors to the dense point cloud. To resolve this challenge, we introduce Transformer to both feature extraction and point generation processes, and propose a Context-based Point Generation Network (CPGNet) with Point Context Extraction (PCE) and Context-based Point Transformation (CPT) to control the point generation process at the sparse level. Our CPGNet can infer the missing point clouds at the sparse level via PCE and CPT blocks, which provide the well-arranged center points for generating the dense point clouds. The PCE block can extract both local and global context features of the observed points. Multiple PCE blocks in the encoder hierarchically offer geometric constraints and priors for the point completion. The CPT block can fully exploit geometric contexts existing in the observed point clouds, and then transform them into context features of the missing points. Multiple CPT blocks in the decoder progressively refine the context features, and finally generate the center points for the missing shapes. Quantitative and visual comparisons on PCN and ShapeNet-55 datasets demonstrate our model outperforms the state-of-the-art methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Atzmon, M., Maron, H., Lipman, Y.: Point convolutional neural networks by extension operators. ACM Trans. Graph. 37(4), 71 (2018)

Dosovitskiy, A., et al.: An image is worth 16x16 words: Ttansformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Fan, S., Dong, Q., Zhu, F., Lv, Y., Ye, P., Wang, F.Y.: SCF-Net: learning spatial contextual features for large-scale point cloud segmentation. In: CVPR, pp. 14504–14513 (2021)

Groueix, T., Fisher, M., Kim, V., Russell, B., Aubry, M.: AtlasNet: a papier-mâché approach to learning 3d surface generation. arxiv 2018. arXiv preprint arXiv:1802.05384 (1802)

Groueix, T., Fisher, M., Kim, V.G., Russell, B.C., Aubry, M.: A papier-mâché approach to learning 3d surface generation. In: CVPR, pp. 216–224 (2018)

Guo, M.H., Cai, J.X., Liu, Z.N., Mu, T.J., Martin, R.R., Hu, S.M.: PCT: point cloud transformer. Comput. Vis. Media 7(2), 187–199 (2021)

He, C., Li, R., Li, S., Zhang, L.: Voxel set transformer: a set-to-set approach to 3D object detection from point clouds. In: CVPR, pp. 8417–8427 (2022)

Huang, Z., Yu, Y., Xu, J., Ni, F., Le, X.: PF-Net: point fractal network for 3D point cloud completion. In: CVPR, pp. 7662–7670 (2020)

Lai, X., et al.: Stratified transformer for 3D point cloud segmentation. In: CVPR, pp. 8500–8509 (2022)

Maturana, D., Scherer, S.: VoxNet: a 3D convolutional neural network for real-time object recognition. In: 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 922–928. IEEE (2015)

Pan, X., Xia, Z., Song, S., Li, L.E., Huang, G.: 3D object detection with pointformer. In: CVPR, pp. 7463–7472 (2021)

Qi, C.R., Su, H., Mo, K., Guibas, L.J.: PointNet: deep learning on point sets for 3D classification and segmentation. In: CVPR, pp. 652–660 (2017)

Qi, C.R., Yi, L., Su, H., Guibas, L.J.: Pointnet++: deep hierarchical feature learning on point sets in a metric space. arXiv preprint arXiv:1706.02413 (2017)

Tang, L., Zhan, Y., Chen, Z., Yu, B., Tao, D.: Contrastive boundary learning for point cloud segmentation. In: CVPR, pp. 8489–8499 (2022)

Tchapmi, L., Choy, C., Armeni, I., Gwak, J., Savarese, S.: SEGcloud: semantic segmentation of 3D point clouds. In: International conference on 3D vision (3DV), pp. 537–547. IEEE (2017)

Tchapmi, L.P., Kosaraju, V., Rezatofighi, H., Reid, I., Savarese, S.: TopNet: structural point cloud decoder. In: CVPR, pp. 383–392 (2019)

Vaswani, A., et al.: Attention is all you need. In: NeurIPS, pp. 5998–6008 (2017)

Wang, P., et al.: Omni-DETR: omni-supervised object detection with transformers. In: CVPR, pp. 9367–9376 (2022)

Wang, X., Ang Jr, M.H., Lee, G.H.: Cascaded refinement network for point cloud completion. In: CVPR, pp. 790–799 (2020)

Wang, Y., Sun, Y., Liu, Z., Sarma, S.E., Bronstein, M.M., Solomon, J.M.: Dynamic graph CNN for learning on point clouds. ACM Trans. Graph. 38(5), 1–12 (2019)

Wen, X., et al.: PMP-Net: point cloud completion by learning multi-step point moving paths. In: CVPR, pp. 7443–7452 (2021)

Wu, W., Qi, Z., Fuxin, L.: Pointconv: deep convolutional networks on 3D point clouds. In: CVPR, pp. 9621–9630 (2019)

Wu, Z., et al.: 3D shapeNets: a deep representation for volumetric shapes. In: CVPR, pp. 1912–1920 (2015)

Xiang, P., et al.: SnowflakeNet: point cloud completion by snowflake point deconvolution with skip-transformer. In: ICCV, pp. 5499–5509 (2021)

Xie, H., Yao, H., Zhou, S., Mao, J., Zhang, S., Sun, W.: GRNet: gridding residual network for dense point cloud completion. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12354, pp. 365–381. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58545-7_21

Yang, Y., Feng, C., Shen, Y., Tian, D.: FoldingNet: point cloud auto-encoder via deep grid deformation. In: CVPR, pp. 206–215 (2018)

Yew, Z.J., Lee, G.H.: REGTR: end-to-end point cloud correspondences with transformers. In: CVPR, pp. 6677–6686 (2022)

Yu, X., Rao, Y., Wang, Z., Liu, Z., Lu, J., Zhou, J.: PoinTr: diverse point cloud completion with geometry-aware transformers. In: ICCV, pp. 12498–12507 (2021)

Yuan, W., Khot, T., Held, D., Mertz, C., Hebert, M.: PCN: point completion network. In: International conference on 3D vision (3DV), pp. 728–737 (2018)

Zhang, W., Yan, Q., Xiao, C.: Detail preserved point cloud completion via separated feature aggregation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12370, pp. 512–528. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58595-2_31

Zheng, S., et al.: Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In: CVPR, pp. 6881–6890 (2021)

Zhou, T., Li, L., Bredell, G., Li, J., Konukoglu, E.: Volumetric memory network for interactive medical image segmentation. Med. Image Anal. 83, 102599 (2022)

Zhu, X., Su, W., Lu, L., Li, B., Wang, X., Dai, J.: Deformable DETR: deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159 (2020)

Acknowledgements

This work was supported by Shandong Provincial Natural Science Foundation under Grant ZR2021QF062.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Lu, L., Li, R., Wei, H., Zhao, Y., Li, R. (2023). Context-Based Point Generation Network for Point Cloud Completion. In: Tanveer, M., Agarwal, S., Ozawa, S., Ekbal, A., Jatowt, A. (eds) Neural Information Processing. ICONIP 2022. Lecture Notes in Computer Science, vol 13623. Springer, Cham. https://doi.org/10.1007/978-3-031-30105-6_37

Download citation

DOI: https://doi.org/10.1007/978-3-031-30105-6_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-30104-9

Online ISBN: 978-3-031-30105-6

eBook Packages: Computer ScienceComputer Science (R0)