Abstract

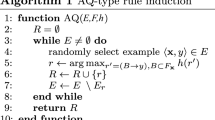

The goal of this paper is to present a new algorithm that filters out inconsistent instances from the training dataset for further usage with machine learning algorithms or learning of neural networks. The idea of this algorithm is based on the previous state-of-the-art algorithm, which uses the concept of local sets. Sophisticated modification of the definition of local sets changes the merits of the algorithm. It is additionally supported by locality-sensitive hashing used for searching for nearest neighbors, composing a new efficient (\(O(n\log n)\)), and an accurate algorithm.

Results prepared on many benchmarks show that the algorithm is as accurate as previous but strongly reduces the time complexity.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Arnaiz-González, A., Díez-Pastor, J.-F., Rodríguez, J.J., García-Osorio, C.: Instance selection of linear complexity for big data: Knowl.-Based Syst. 107, 83–95 (2016)

Bawa, M., Condie, T., Ganesan, P.: LSH forest: self-tuning indexes for similarity search. In: Proceedings of the 14th International Conference on World Wide Web, pp. 651–660. Chiba, Japan (2005)

Brighton, H., Mellish, C.: Advances in instance selection for instance-based learning algorithms. Data Min. Knowl. Disc. 6(2), 153–172 (2002)

Cover, T.M., Hart, P.E.: Nearest neighbor pattern classification. Instit. Electr. Electron. Eng. Trans. Inf. Theory 13(1), 21–27 (1967)

Garcia, S., Derrac, J., Cano, J., Herrera, F.: Prototype selection for nearest neighbor classification: taxonomy and empirical study. IEEE Trans. Pattern Anal. Mach. Intell. 34(3), 417–435 (2012)

Har-Peled, S., Indyk, P., Motwani, R.: Approximate nearest neighbor: towards removing the curse of dimensionality. Theory Comput. 8, 321–350 (2012)

Indyk, P., Motwani, R.: Approximate nearest neighbor—towards removing the curse of dimensionality. In: The Thirtieth Annual ACM Symposium on Theory of Computing, pp. 604–613 (1998)

Grochowski, M., Jankowski, N.: Comparison of instance selection algorithms II. results and comments. In: Rutkowski, L., Siekmann, J.H., Tadeusiewicz, R., Zadeh, L.A. (eds.) ICAISC 2004. LNCS (LNAI), vol. 3070, pp. 580–585. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-24844-6_87

Jankowski, N., Orliński, M.: Fast encoding length-based prototype selection algorithms. Australian J. Intell. Inf. Process. Syst. 16(3), 59–66 (2019). Special Issue: Neural Information Processing 26th International Conference on Neural Information Processing. http://ajiips.com.au/iconip2019/docs/ajiips/v16n3.pdf

Leyva, E., González, A., Pérez, R.: Three new instance selection methods based on local sets: a comparative study with several approaches from a bi-objective perspective. Pattern Recogn. 48(4), 1523–1537 (2015). https://doi.org/10.1016/j.patcog.2014.10.001

Merz, C.J., Murphy, P.M.: UCI repository of machine learning databases (1998). http://www.ics.uci.edu/~mlearn/MLRepository.html

Olvera-López, J.A., Carrasco-Ochoa, J.A., Martínez-Trinidad, J.F.: A new fast prototype selection method based on clustering. Pattern Anal. Appl. 13(2), 131–141 (2009)

Orliński, M., Jankowski, N.: Fast t-SNE algorithm with forest of balanced LSH trees and hybrid computation of repulsive forces. Knowl.-Based Syst. 206, 1–16 (2020). https://doi.org/10.1016/j.knosys.2020.106318

Orliński, M., Jankowski, N.: \(O(m \log m)\) instance selection algorithms–RR-DROPs. In: IEEE World Congress on Computational Intelligence, pp. 1–8. IEEE Press (2020). https://doi.org/10.1109/IJCNN48605.2020.9207158. http://www.is.umk.pl/~norbert/publications/20-FastDROP.pdf

Wilson, D.: Asymptotic properties of nearest neighbor rules using edited data. IEEE Trans. Syst. Man Cybern. 2(3), 408–421 (1972)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Jankowski, N. (2023). A Fast and Efficient Algorithm for Filtering the Training Dataset. In: Tanveer, M., Agarwal, S., Ozawa, S., Ekbal, A., Jatowt, A. (eds) Neural Information Processing. ICONIP 2022. Lecture Notes in Computer Science, vol 13623. Springer, Cham. https://doi.org/10.1007/978-3-031-30105-6_42

Download citation

DOI: https://doi.org/10.1007/978-3-031-30105-6_42

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-30104-9

Online ISBN: 978-3-031-30105-6

eBook Packages: Computer ScienceComputer Science (R0)