Abstract

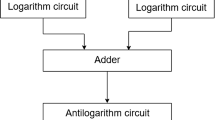

The posit numeric format is getting more and more attention in recent years. Its tapered precision makes it especially suitable in many applications including machine learning computation. However, due to its dynamic component bit-width, the cost of implementing posit arithmetic in hardware is more expensive than its floating-point counterpart. To solve this cost problem, in this paper, approximate logarithmic designs for posit multiplication, division, and square root are proposed. It is found that approximate logarithmic units are more suitable for applications that tolerate large errors, such as machine learning algorithms, but require less power consumption.

This work was supported by grant PID2021-123041OB-I00 funded by MCIN/AEI/ 10.13039/501100011033 and by “ERDF A way of making Europe”, by a 2020 Leonardo Grant for Researchers and Cultural Creators, from BBVA Foundation, whose id is PR2003_20/01, and by the CM under grant S2018/TCS-4423.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

- 2.

Accessed from https://archive.ics.uci.edu on 2023/05/03 02:54:05.

References

Burgess, N., Goodyer, C., Hinds, C.N., Lutz, D.R.: High-precision anchored accumulators for reproducible floating-point summation. IEEE Trans. Comput. 68(7), 967–978 (2019). https://doi.org/10.1109/TC.2018.2855729

Chaurasiya, R., et al.: Parameterized posit arithmetic hardware generator. In: 2018 IEEE 36th International Conference on Computer Design (ICCD), pp. 334–341. IEEE (2018). https://doi.org/10.1109/ICCD.2018.00057

de Dinechin, F., Forget, L., Muller, J.M., Uguen, Y.: Posits: the good, the bad and the ugly. In: Proceedings of the Conference for Next Generation Arithmetic (ACM 2019), Singapore (2019). https://doi.org/10.1145/3316279.3316285

Ercegovac, M.D., Lang, T.: Digital Arithmetic. Elsevier (2004). https://doi.org/10.1016/B978-1-55860-798-9.X5000-3

Fatemi Langroudi, S.H., Pandit, T., Kudithipudi, D.: Deep learning inference on embedded devices: fixed-point vs posit. In: 2018 1st Workshop on Energy Efficient Machine Learning and Cognitive Computing for Embedded Applications (EMC2), pp. 19–23. IEEE, Williamsburg, VA, USA (2018). https://doi.org/10.1109/EMC2.2018.00012

Goldberg, D.: What every computer scientist should know about floating-point arithmetic. ACM Comput. Surv. 23(1), 5–48 (1991). https://doi.org/10.1145/103162.103163

Posit Working Group: Standard for posit™ arithmetic (2022). https://github.com/posit-standard/Posit-Standard-Community-Feedback

Guntoro, A., et al.: Next generation arithmetic for edge computing. In: 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 1357–1365 (2020). https://doi.org/10.23919/DATE48585.2020.9116196

Hormigo, J., Villalba, J.: New formats for computing with real-numbers under round-to-nearest. IEEE Trans. Comput. 65(7), 2158–2168 (2016). https://doi.org/10.1109/TC.2015.2479623

IEEE Computer Society: IEEE standard for floating-point arithmetic. IEEE Std 754-2019 (Revision of IEEE 754-2008) 2019, 1–84 (2019). https://doi.org/10.1109/IEEESTD.2019.8766229

Jaiswal, M.K., So, H.K.: PACoGen: a hardware posit arithmetic core generator. IEEE Access 7, 74586–74601 (2019). https://doi.org/10.1109/ACCESS.2019.2920936

Johnson, J.: Rethinking floating point for deep learning. arXiv e-prints (2018). https://doi.org/10.48550/ARXIV.1811.01721

Kim, M.S., Del Barrio, A.A., Oliveira, L.T., Hermida, R., Bagherzadeh, N.: Efficient Mitchell’s approximate log multipliers for convolutional neural networks. IEEE Trans. Comput. 68(5), 660–675 (2018). https://doi.org/10.1109/TC.2018.2880742

Kim, M.S., Del Barrio Garcia, A.A., Kim, H., Bagherzadeh, N.: The effects of approximate multiplication on convolutional neural networks. IEEE Trans. Emerg. Top. Comput. 10(2), 904–916 (2022). https://doi.org/10.1109/TETC.2021.3050989

Klöwer, M., Düben, P.D., Palmer, T.N.: Number formats, error mitigation, and scope for 16-bit arithmetics in weather and climate modeling analyzed with a shallow water model. J. Adv. Model. Earth Syst. 12(10), e2020MS002246 (2020). https://doi.org/10.1029/2020MS002246

Köster, U., et al.: Flexpoint: an adaptive numerical format for efficient training of deep neural networks. In: Advances in Neural Information Processing Systems (NIPS), pp. 1742–1752. Curran Associates, Inc. (2017)

Mallasén, D., Murillo, R., Barrio, A.A.D., Botella, G., Piñuel, L., Prieto-Matias, M.: PERCIVAL: open-source posit RISC-V core with quire capability. In: IEEE Transactions on Emerging Topics in Computing, pp. 1–12 (2022). https://doi.org/10.1109/TETC.2022.3187199

Malossi, A.C.I., et al.: The transprecision computing paradigm: concept, design, and applications. In: 2018 Design, Automation Test in Europe Conference Exhibition (DATE), pp. 1105–1110 (2018). https://doi.org/10.23919/DATE.2018.8342176

Mitchell, J.N.: Computer multiplication and division using binary logarithms. IRE Trans. Electron. Comput. EC-11(4), 512–517 (1962). https://doi.org/10.1109/TEC.1962.5219391

Murillo, R., Del Barrio, A.A., Botella, G.: Customized posit adders and multipliers using the FloPoCo core generator. In: 2020 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 1–5 (2020). https://doi.org/10.1109/ISCAS45731.2020.9180771

Murillo, R., Del Barrio, A.A., Botella, G.: Deep PeNSieve: a deep learning framework based on the posit number system. Digit. Signal Process. 102, 102762 (2020). https://doi.org/10.1016/j.dsp.2020.102762

Murillo, R., Del Barrio Garcia, A.A., Botella, G., Kim, M.S., Kim, H., Bagherzadeh, N.: PLAM: a posit logarithm-approximate multiplier. IEEE Trans. Emerg. Topics Comput. 10(4), 2079–2085 (2022). https://doi.org/10.1109/TETC.2021.3109127

Murillo, R., Mallasén, D., Del Barrio, A.A., Botella, G.: Energy-efficient MAC units for fused posit arithmetic. In: 2021 IEEE 39th International Conference on Computer Design (ICCD), pp. 138–145 (2021). https://doi.org/10.1109/ICCD53106.2021.00032

Murillo, R., Mallasén, D., Del Barrio, A.A., Botella, G.: Comparing Different Decodings for Posit Arithmetic. In: Conference on Next Generation Arithmetic (CoNGA). pp. 84–99 (2022). https://doi.org/10.1007/978-3-031-09779-9_6

Norris, C.J., Kim, S.: An approximate and iterative posit multiplier architecture for FPGAs. In: 2021 IEEE International Symposium on Circuits and Systems (ISCAS), vol. 2021-May, pp. 1–5. IEEE (2021). https://doi.org/10.1109/ISCAS51556.2021.9401158

NVIDIA Corporation: NVIDIA A100 Tensor Core GPU Architecture. Tech. rep., NVIDIA Corporation (2020). https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/nvidia-ampere-architecture-whitepaper.pdf

Omtzigt, E.T.L., Quinlan, J.: Universal: reliable, reproducible, and energy-efficient numerics. In: Conference on Next Generation Arithmetic (CoNGA), pp. 100–116 (2022). https://doi.org/10.1007/978-3-031-09779-9_7

Pilipovic, R., Bulic, P.: On the design of logarithmic multiplier using radix-4 booth encoding. IEEE Access 8, 64578–64590 (2020). https://doi.org/10.1109/ACCESS.2020.2985345

Rao, D.N., Charan, G.S., Sairam, D.V.V., Kamatchi, S.: Posit number division using Newton-Raphson method. In: Proceedings of the 2021 1st International Conference on Advances in Electrical, Computing, Communications and Sustainable Technologies (ICAECT 2021), pp. 17–22 (2021). https://doi.org/10.1109/ICAECT49130.2021.9392582

Raveendran, A., Jean, S., Mervin, J., Vivian, D., Selvakumar, D.: A novel parametrized fused division and square-root POSIT arithmetic architecture. In: 2020 33rd International Conference on VLSI Design and 2020 19th International Conference on Embedded Systems (VLSID), pp. 207–212. IEEE (2020). https://doi.org/10.1109/VLSID49098.2020.00053

Rouhani, B.D., et al.: Pushing the limits of narrow precision inferencing at cloud scale with microsoft floating point. In: Advances in Neural Information Processing Systems (NIPS), pp. 10271–10281 (2020)

Saxena, V., et al.: Brightening the optical flow through posit arithmetic. In: 2021 22nd International Symposium on Quality Electronic Design (ISQED), pp. 463–468 (2021). https://doi.org/10.1109/ISQED51717.2021.9424360

Swartzlander, E.E., Alexopoulos, A.G.: The sign/logarithm number system. IEEE Trans. Comput. C-24(12), 1238–1242 (1975). https://doi.org/10.1109/T-C.1975.224172

Venkataramani, S., et al.: Efficient AI system design with cross-layer approximate computing. Proc. IEEE 108(12), 2232–2250 (2020). https://doi.org/10.1109/JPROC.2020.3029453

Xiao, F., Liang, F., Wu, B., Liang, J., Cheng, S., Zhang, G.: Posit arithmetic hardware implementations with the minimum cost divider and squareroot. Electronics 9, 1622 (2020). https://doi.org/10.3390/electronics9101622

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Murillo, R., Mallasén, D., Del Barrio, A.A., Botella, G. (2023). PLAUs: Posit Logarithmic Approximate Units to Implement Low-Cost Operations with Real Numbers. In: Gustafson, J., Leong, S.H., Michalewicz, M. (eds) Next Generation Arithmetic. CoNGA 2023. Lecture Notes in Computer Science, vol 13851. Springer, Cham. https://doi.org/10.1007/978-3-031-32180-1_11

Download citation

DOI: https://doi.org/10.1007/978-3-031-32180-1_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-32179-5

Online ISBN: 978-3-031-32180-1

eBook Packages: Computer ScienceComputer Science (R0)