Abstract

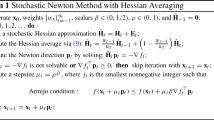

To solve large scale optimization problems arising from machine learning, stochastic Newton methods have been proposed for reducing the cost of computing Hessian and Hessian inverse, while still maintaining fast convergence. Recently, a second-order method named LiSSA [12] was proposed to approximate the Hessian inverse with Taylor expansion and achieves (almost) linear running time in optimization. The approach is very simple yet effective, but still could be further accelerated. In this paper, we resort to Chebyshev polynomial and its variants to approximate the Hessian inverse. Note that Chebyshev polynomial approximation is broadly acknowledged as the optimal polynomial approximation in the deterministic setting, in this paper we introduce it into the stochastic setting of Newton optimization. We provide a complete convergence analysis and the experiments on multiple benchmarks show that our proposed algorithms outperform LiSSA, which validates our theoretical insights.

Supported by NSFC grant 11771148 and Science and Technology Commission of Shanghai Municipality grant 20511100200, 21JC1402500 and 22DZ2229014.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Blackard, J.A., Dean, D.J.: Comparative accuracies of artificial neural networks and discriminant analysis in predicting forest cover types from cartographic variables. Comput. Electron. Agric. 24(3), 131–151 (1999)

Defazio, A., Bach, F., Lacoste-Julien, S.: SAGA: a fast incremental gradient method with support for non-strongly convex composite objectives. In: Advances in Neural Information Processing Systems, pp. 1646–1654 (2014)

Erdogdu, M.A., Montanari, A.: Convergence rates of sub-sampled newton methods, pp. 3034–3042. Advances in Neural Information Processing Systems (2015)

Golub, G.H., van Loan, C.F.: Matrix Computations, 2nd edn. The Johns Hopkins University Press, Baltimore and London (1989)

Golub, G.H., Varga, R.S.: Chebyshev semi-iterative methods, successive overrelaxation iterative methods, and second order Richardson iterative methods. Numer. Math. 3, 147–156 (1961)

Gower, R., Goldfarb, D., Richtárik, P.: Stochastic block BFGS: squeezing more curvature out of data. In: International Conference on Machine Learning, pp. 1869–1878. PMLR (2016)

Johnson, R., Zhang, T.: Accelerating stochastic gradient descent using predictive variance reduction. Adv. Neural. Inf. Process. Syst. 26, 315–323 (2013)

LeCun, Y., Cortes, C.: The MNIST database of handwritten digits (1998). http://yann.lecun.com/exdb/mnist/

Lichman, M.: UCI machine learning repository (2013). http://archive.ics.uci.edu/ml

McCallum, A.: Real-sim (1997). https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/binary.html#real-sim

Moritz, P., Nishihara, R., Jordan, M.: A linearly-convergent stochastic l-BFGS algorithm. In: Artificial Intelligence and Statistics, pp. 249–258. PMLR (2016)

Naman Agarwal, B.B., Hazan, E.: Second-order stochastic optimization for machine learning in linear time. J. Mach. Learn. Res. 18, 1–40 (2017)

Pilanci, M., Wainwright, M.J.: Newton sketch: a linear-time optimization algorithm with linear-quadratic convergence. SIAM J. Optim. 27(1), 205–245 (2017)

Byrd, R.H., Chin, G.M., Neveitt, W., Norcedal, J.: On the use of stochastic Hessian information in optimization methods for machine learning. SIAM J. Optimiz. 21(3), 977–995 (2011)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Statist. 22, 400–407 (1951)

Shalev-Shwartz, S., Zhang, T.: Accelerated proximal stochastic dual coordinate ascent for regularized loss minimization. In: International Conference on Machine Learning, pp. 64–72. PMLR (2014)

Tropp, J.A.: User-friendly tail bounds for sums of random matrices. Found. Comput. Math. 12(4), 389–434 (2012)

Varga, R.S.: Matrix Iterative Analysis, 2nd edn. Englewood Cliff, N.J. Prentice-Hall Inc., (2000). https://doi.org/10.1007/978-3-642-05156-2

Acknowledgements

We would like to thank the anonymous reviewers for their constructive comments.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Sha, F., Pan, J. (2023). Accelerating Stochastic Newton Method via Chebyshev Polynomial Approximation. In: Kashima, H., Ide, T., Peng, WC. (eds) Advances in Knowledge Discovery and Data Mining. PAKDD 2023. Lecture Notes in Computer Science(), vol 13936. Springer, Cham. https://doi.org/10.1007/978-3-031-33377-4_40

Download citation

DOI: https://doi.org/10.1007/978-3-031-33377-4_40

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-33376-7

Online ISBN: 978-3-031-33377-4

eBook Packages: Computer ScienceComputer Science (R0)