Abstract

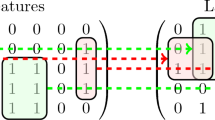

This paper proposes a new framework for learning a rule ensemble model that is both accurate and interpretable. A rule ensemble is an interpretable model based on the linear combination of weighted rules. In practice, we often face the trade-off between the accuracy and interpretability of rule ensembles. That is, a rule ensemble needs to include a sufficiently large number of weighted rules to maintain its accuracy, which harms its interpretability for human users. To avoid this trade-off and learn an interpretable rule ensemble without degrading accuracy, we introduce a new concept of interpretability, named local interpretability, which is evaluated by the total number of rules necessary to express individual predictions made by the model, rather than to express the model itself. Then, we propose a regularizer that promotes local interpretability and develop an efficient algorithm for learning a rule ensemble with the proposed regularizer by coordinate descent with local search. Experimental results demonstrated that our method learns rule ensembles that can explain individual predictions with fewer rules than the existing methods, including RuleFit, while maintaining comparable accuracy.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

References

Alvarez-Melis, D., Jaakkola, T.S.: On the robustness of interpretability methods. In: Proceedings of the 2018 ICML Workshop on Human Interpretability in Machine Learning, pp. 66–71 (2018)

Angelino, E., Larus-Stone, N., Alabi, D., Seltzer, M., Rudin, C.: Learning certifiably optimal rule lists. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 35–44 (2017)

Angwin, J., Larson, J., Mattu, S., Kirchner, L.: Machine Bias - ProPublica (2016). www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing. Accessed 20 June 2023

Bénard, C., Biau, G., da Veiga, S., Scornet, E.: Interpretable random forests via rule extraction. In: Proceedings of the 24th International Conference on Artificial Intelligence and Statistics, pp. 937–945 (2021)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Caruana, R., Lou, Y., Gehrke, J., Koch, P., Sturm, M., Elhadad, N.: Intelligible models for healthcare: predicting pneumonia risk and hospital 30-day readmission. In: Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1721–1730 (2015)

Dash, S., Günlük, O., Wei, D.: Boolean decision rules via column generation. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems, pp. 4660–4670 (2018)

Dedieu, A., Hazimeh, H., Mazumder, R.: Learning sparse classifiers: continuous and mixed integer optimization perspectives. J. Mach. Learn. Res. 22(135), 1–47 (2021)

Doshi-Velez, F., Kim, B.: Towards a rigorous science of interpretable machine learning. arXiv, arXiv:1702.08608 (2017)

Eckstein, J., Goldberg, N., Kagawa, A.: Rule-enhanced penalized regression by column generation using rectangular maximum agreement. In: Proceedings of the 34th International Conference on Machine Learning, pp. 1059–1067 (2017)

FICO, Google, Imperial College London, MIT, University of Oxford, UC Irvine, UC Berkeley: Explainable Machine Learning Challenge (2018). www.community.fico.com/s/explainable-machine-learning-challenge. Accessed 20 June 2023

Freitas, A.A.: Comprehensible classification models: a position paper. ACM SIGKDD Explor. Newsl. 15(1), 1–10 (2014)

Freund, Y., Schapire, R.E.: A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 55(1), 119–139 (1997)

Friedman, J., Popescu, B.E.: Gradient directed regularization for linear regression and classification. Statistics Department, Stanford University, Technical report (2003)

Friedman, J.H., Popescu, B.E.: Predictive learning via rule ensembles. Ann. Appl. Stat. 2(3), 916–954 (2008)

Hu, X., Rudin, C., Seltzer, M.: Optimal sparse decision trees. In: Proceedings of the 33rd International Conference on Neural Information Processing Systems, pp. 7265–7273 (2019)

Jacovi, A., Goldberg, Y.: Towards faithfully interpretable NLP systems: how should we define and evaluate faithfulness? In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 4198–4205 (2020)

Kanamori, K.: Learning locally interpretable rule ensemble. arXiv arXiv:2306.11481 (2023)

Kato, H., Hanada, H., Takeuchi, I.: Safe rulefit: learning optimal sparse rule model by meta safe screening. IEEE Trans. Pattern Anal. Mach. Intell. 45(2), 2330–2343 (2023)

Ke, G., et al.: LightGBM: a highly efficient gradient boosting decision tree. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 3149–3157 (2017)

Kelly, M., Longjohn, R., Nottingham, K.: The UCI machine learning repository (2023). www.archive.ics.uci.edu/. Accessed 20 June 2023

Lage, I., et al.: Human evaluation of models built for interpretability. In: Proceedings of the 7th AAAI Conference on Human Computation and Crowdsourcing, pp. 59–67 (2019)

Lakkaraju, H., Bach, S.H., Leskovec, J.: Interpretable decision sets: a joint framework for description and prediction. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1675–1684 (2016)

Lipton, Z.C.: The mythos of model interpretability: in machine learning, the concept of interpretability is both important and slippery. Queue 16(3), 31–57 (2018)

Liu, J., Zhong, C., Seltzer, M., Rudin, C.: Fast sparse classification for generalized linear and additive models. In: Proceedings of the 25th International Conference on Artificial Intelligence and Statistics, pp. 9304–9333 (2022)

Lundberg, S.M., Lee, S.I.: A unified approach to interpreting model predictions. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 4765–4774 (2017)

Miller, T.: Explanation in artificial intelligence: insights from the social sciences. Artif. Intell. 267, 1–38 (2019)

Mohri, M., Rostamizadeh, A., Talwalkar, A.: Foundations of Machine Learning. The MIT Press, Cambridge (2012)

Nakagawa, K., Suzumura, S., Karasuyama, M., Tsuda, K., Takeuchi, I.: Safe pattern pruning: an efficient approach for predictive pattern mining. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1785–1794 (2016)

Nalenz, M., Augustin, T.: Compressed rule ensemble learning. In: Proceedings of the 25th International Conference on Artificial Intelligence and Statistics, pp. 9998–10014 (2022)

Plumb, G., Al-Shedivat, M., Cabrera, A.A., Perer, A., Xing, E., Talwalkar, A.: Regularizing black-box models for improved interpretability. In: Proceedings of the 34th International Conference on Neural Information Processing Systems, pp. 10526–10536 (2020)

Ribeiro, M.T., Singh, S., Guestrin, C.: “Why Should I Trust You?”: explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1135–1144 (2016)

Ribeiro, M.T., Singh, S., Guestrin, C.: Anchors: high-precision model-agnostic explanations. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence, pp. 1527–1535 (2018)

Rieger, L., Singh, C., Murdoch, W., Yu, B.: Interpretations are useful: penalizing explanations to align neural networks with prior knowledge. In: Proceedings of the 37th International Conference on Machine Learning, pp. 8116–8126 (2020)

Ross, A.S., Hughes, M.C., Doshi-Velez, F.: Right for the right reasons: training differentiable models by constraining their explanations. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence, pp. 2662–2670 (2017)

Rudin, C.: Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1, 206–215 (2019)

Rudin, C., Chen, C., Chen, Z., Huang, H., Semenova, L., Zhong, C.: Interpretable machine learning: fundamental principles and 10 grand challenges. Stat. Surv. 16, 1–85 (2022)

Rudin, C., Shaposhnik, Y.: Globally-consistent rule-based summary-explanations for machine learning models: application to credit-risk evaluation. J. Mach. Learn. Res. 24(16), 1–44 (2023)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. Roy. Stat. Soc. Ser. B (Stat. Methodol.) 58, 267–288 (1994)

Ustun, B., Rudin, C.: Learning optimized risk scores. J. Mach. Learn. Res. 20(150), 1–75 (2019)

Wang, F., Rudin, C.: Falling rule lists. In: Proceedings of the 18th International Conference on Artificial Intelligence and Statistics, pp. 1013–1022 (2015)

Wei, D., Dash, S., Gao, T., Gunluk, O.: Generalized linear rule models. In: Proceedings of the 36th International Conference on Machine Learning, pp. 6687–6696 (2019)

Yang, H., Rudin, C., Seltzer, M.: Scalable bayesian rule lists. In: Proceedings of the 34th International Conference on Machine Learning, pp. 3921–3930 (2017)

Yang, J., Lindenbaum, O., Kluger, Y.: Locally sparse neural networks for tabular biomedical data. In: Proceedings of the 39th International Conference on Machine Learning, pp. 25123–25153 (2022)

Yoon, J., Arik, S.O., Pfister, T.: LIMIS: locally interpretable modeling using instance-wise subsampling. Transactions on Machine Learning Research (2022). www.openreview.net/forum?id=S8eABAy8P3

Zhang, G., Gionis, A.: Regularized impurity reduction: accurate decision trees with complexity guarantees. Data Min. Knowl. Disc. 37(1), 434–475 (2023)

Acknowledgement

We wish to thank Koji Maruhashi, Takuya Takagi, Ken Kobayashi, and Yuichi Ike for making a number of valuable suggestions. We also thank the anonymous reviewers for their insightful comments.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Kanamori, K. (2023). Learning Locally Interpretable Rule Ensemble. In: Koutra, D., Plant, C., Gomez Rodriguez, M., Baralis, E., Bonchi, F. (eds) Machine Learning and Knowledge Discovery in Databases: Research Track. ECML PKDD 2023. Lecture Notes in Computer Science(), vol 14171. Springer, Cham. https://doi.org/10.1007/978-3-031-43418-1_22

Download citation

DOI: https://doi.org/10.1007/978-3-031-43418-1_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-43417-4

Online ISBN: 978-3-031-43418-1

eBook Packages: Computer ScienceComputer Science (R0)