Abstract

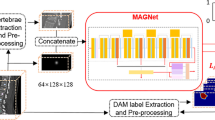

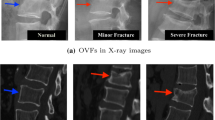

Hip fractures are a common cause of morbidity and mortality and are usually diagnosed from the X-ray images in clinical routine. Deep learning has achieved promising progress for automatic hip fracture detection. However, for fractures where displacement appears not obvious (i.e., non-displaced fracture), the single-view X-ray image can only provide limited diagnostic information and integrating features from cross-view X-ray images (i.e., Frontal/Lateral-view) is needed for an accurate diagnosis. Nevertheless, it remains a technically challenging task to find reliable and discriminative cross-view representations for automatic diagnosis. First, it is difficult to locate discriminative task-related features in each X-ray view due to the weak supervision of image-level classification labels. Second, it is hard to extract reliable complementary information between different X-ray views as there is a displacement between them. To address the above challenges, this paper presents a novel cross-view deformable transformer framework to model relations of critical representations between different views for non-displaced hip fracture identification. Specifically, we adopt a deformable self-attention module to localize discriminative task-related features for each X-ray view only with the image-level label. Moreover, the located discriminative features are further adopted to explore correlated representations across views by taking advantage of the query of the dominated view as guidance. Furthermore, we build a dataset including 768 hip cases, in which each case has paired hip X-ray images (Frontal/Lateral-view), to evaluate our framework for the non-displaced fracture and normal hip classification task.

Similar content being viewed by others

References

Annarumma, M., Withey, S.J., Bakewell, R.J., Pesce, E., Goh, V., Montana, G.: Automated triaging of adult chest radiographs with deep artificial neural networks. Radiology 291(1), 196–202 (2019)

Bekker, A.J., Shalhon, M., Greenspan, H., Goldberger, J.: Multi-view probabilistic classification of breast microcalcifications. IEEE Trans. Med. Imaging 35(2), 645–653 (2015)

Bertrand, H., Hashir, M., Cohen, J.P.: Do lateral views help automated chest X-ray predictions? arXiv preprint arXiv:1904.08534 (2019)

Çallı, E., Sogancioglu, E., van Ginneken, B., van Leeuwen, K.G., Murphy, K.: Deep learning for chest X-ray analysis: a survey. Med. Image Anal. 72, 102125 (2021)

Carneiro, G., Nascimento, J., Bradley, A.P.: Deep learning models for classifying mammogram exams containing unregistered multi-view images and segmentation maps of lesions. In: Deep learning for medical image analysis, pp. 321–339 (2017)

Chen, Z., et al.: DPT: deformable patch-based transformer for visual recognition. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 2899–2907 (2021)

Cohen, J.P., et al.: Predicting COVID-19 pneumonia severity on chest X-ray with deep learning. Cureus 12(7) (2020)

Dong, X., et al.: Cswin transformer: a general vision transformer backbone with cross-shaped windows. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12124–12134 (2022)

Dosovitskiy, A., et al.: An image is worth 16\(\times \)16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Hashir, M., Bertrand, H., Cohen, J.P.: Quantifying the value of lateral views in deep learning for chest X-rays. In: Medical Imaging with Deep Learning, pp. 288–303. PMLR (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Huang, G., Liu, Z., Laurens, V., Weinberger, K.Q.: Densely connected convolutional networks. IEEE Computer Society (2016)

Krogue, J.D., et al.: Automatic hip fracture identification and functional subclassification with deep learning. Radiol. Artif. Intell. 2(2), e190023 (2020)

Li, M.D., et al.: Automated assessment of covid-19 pulmonary disease severity on chest radiographs using convolutional siamese neural networks. MedRxiv pp. 2020–05 (2020)

Liu, Y., Zhang, F., Zhang, Q., Wang, S., Wang, Y., Yu, Y.: Cross-view correspondence reasoning based on bipartite graph convolutional network for mammogram mass detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3812–3822 (2020)

Liu, Z., et al.: Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022 (2021)

Mutasa, S., Varada, S., Goel, A., Wong, T.T., Rasiej, M.J.: Advanced deep learning techniques applied to automated femoral neck fracture detection and classification. J. Digit. Imaging 33, 1209–1217 (2020)

Novikov, A.A., Lenis, D., Major, D., Hladvka, J., Wimmer, M., Bühler, K.: Fully convolutional architectures for multiclass segmentation in chest radiographs. IEEE Trans. Med. Imaging 37(8), 1865–1876 (2018)

Rubin, J., Sanghavi, D., Zhao, C., Lee, K., Qadir, A., Xu-Wilson, M.: Large scale automated reading of frontal and lateral chest X-rays using dual convolutional neural networks. arXiv preprint arXiv:1804.07839 (2018)

Shokouh, G.S., Magnier, B., Xu, B., Montesinos, P.: Ridge detection by image filtering techniques: a review and an objective analysis. Pattern Recognit. Image Anal. 31, 551–570 (2021)

Tang, Y.X.: Automated abnormality classification of chest radiographs using deep convolutional neural networks. NPJ Digit. Med. 3(1), 70 (2020)

Van Tulder, G., Tong, Y., Marchiori, E.: Multi-view analysis of unregistered medical images using cross-view transformers. In: Medical Image Computing and Computer Assisted Intervention – MICCAI 2021, pp. 104–113 (2021)

Xia, Z., Pan, X., Song, S., Li, L.E., Huang, G.: Vision transformer with deformable attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4794–4803 (2022)

Yamada, Y., et al.: Automated classification of hip fractures using deep convolutional neural networks with orthopedic surgeon-level accuracy: ensemble decision-making with antero-posterior and lateral radiographs. Acta Orthop. 91(6), 699–704 (2020)

Yue, X., et al.: Vision transformer with progressive sampling. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 387–396 (2021)

Zhu, X., Feng, Q.: Mvc-Net: Multi-view chest radiograph classification network with deep fusion. In: 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), pp. 554–558. IEEE (2021)

Acknowledgement

This work was supported by the National Key Research and Development Program of China (2019YFE0113900).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zhu, Z. et al. (2023). Cross-View Deformable Transformer for Non-displaced Hip Fracture Classification from Frontal-Lateral X-Ray Pair. In: Greenspan, H., et al. Medical Image Computing and Computer Assisted Intervention – MICCAI 2023. MICCAI 2023. Lecture Notes in Computer Science, vol 14225. Springer, Cham. https://doi.org/10.1007/978-3-031-43987-2_43

Download citation

DOI: https://doi.org/10.1007/978-3-031-43987-2_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-43986-5

Online ISBN: 978-3-031-43987-2

eBook Packages: Computer ScienceComputer Science (R0)