Abstract

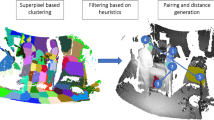

The digitization of surgical operating rooms (OR) has gained significant traction in the scientific and medical communities. However, existing deep-learning methods for operating room recognition tasks still require substantial quantities of annotated data. In this paper, we introduce a method for weakly-supervised semantic segmentation for surgical operating rooms. Our method operates directly on 4D point cloud sequences from multiple ceiling-mounted RGB-D sensors and requires less than 0.01% of annotated data. This is achieved by incorporating a self-supervised temporal prior, enforcing semantic consistency in 4D point cloud video recordings. We show how refining these priors with learned semantic features can increase segmentation mIoU to \(10\%\) above existing works, achieving higher segmentation scores than baselines that use four times the number of labels. Furthermore, the 3D semantic predictions from our method can be projected back into 2D images; we establish that these 2D predictions can be used to improve the performance of existing surgical phase recognition methods. Our method shows promise in automating 3D OR segmentation with a 20 times lower annotation cost than existing methods, demonstrating the potential to improve surgical scene understanding systems.

Lennart Bastian and Daniel Derkacz-Bogner contributed equally to this work.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Baker, S., Matthews, I.: Lucas-Kanade 20 years on: a unifying framework. Int. J. Comput. Vision 56, 221–255 (2004)

Bastian, L., et al.: Know your sensors-a modality study for surgical action classification. Comput. Methods Biomech. Biomed. Eng.: Imaging Vis. 11, 1–9 (2022)

Bastian, L., Wang, T.D., Czempiel, T., Busam, B., Navab, N.: DisguisOR: holistic face anonymization for the operating room. Int. J. Comput. Assist. Radiol. Surg. 1–7 (2023)

Choy, C., Gwak, J., Savarese, S.: 4D spatio-temporal convnets: Minkowski convolutional neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3075–3084 (2019)

Spconv Contributors: Spconv: spatially sparse convolution library (2022). https://github.com/traveller59/spconv

Czempiel, T., et al.: TeCNO: surgical phase recognition with multi-stage temporal convolutional networks. In: Martel, A.L., et al. (eds.) MICCAI 2020. LNCS, vol. 12263, pp. 343–352. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-59716-0_33

Czempiel, T., Sharghi, A., Paschali, M., Navab, N., Mohareri, O.: Surgical workflow recognition: from analysis of challenges to architectural study. In: Karlinsky, L., Michaeli, T., Nishino, K. (eds.) ECCV 2022, Part III. LNCS, vol. 13803, pp. 556–568. Springer, Cham (2023). https://doi.org/10.1007/978-3-031-25066-8_32

Dai, A., Chang, A.X., Savva, M., Halber, M., Funkhouser, T., Nießner, M.: ScanNet: richly-annotated 3D reconstructions of indoor scenes. In: CVPR, pp. 5828–5839 (2017)

Farnebäck, G.: Two-frame motion estimation based on polynomial expansion. In: Bigun, J., Gustavsson, T. (eds.) SCIA 2003. LNCS, vol. 2749, pp. 363–370. Springer, Heidelberg (2003). https://doi.org/10.1007/3-540-45103-x_50

Hanyu, S., Jiacheng, W., Hao, W., Fayao, L., Guosheng, L.: Learning spatial and temporal variations for 4D point cloud segmentation. arXiv preprint arXiv:2207.04673 (2022)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR, pp. 770–778 (2016)

Liu, M., Zhou, Y., Qi, C.R., Gong, B., Su, H., Anguelov, D.: Less: Label-efficient semantic segmentation for lidar point clouds. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) ECCV 2022, Part VII. LNCS, vol. 13699, pp. 70–89. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-19842-7_5

Kennedy-Metz, L.R., et al.: Computer vision in the operating room: opportunities and caveats. IEEE Trans. Med. Robot. Bionics 3(1), 2–10 (2020)

Kochanov, D., Ošep, A., Stückler, J., Leibe, B.: Scene flow propagation for semantic mapping and object discovery in dynamic street scenes. In: IROS, pp. 1785–1792. IEEE (2016)

Li, R., Zhang, C., Lin, G., Wang, Z., Shen, C.: RigidFlow: self-supervised scene flow learning on point clouds by local rigidity prior. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16959–16968 (2022)

Li, Z., Shaban, A., Simard, J.G., Rabindran, D., DiMaio, S., Mohareri, O.: A robotic 3D perception system for operating room environment awareness. arXiv:2003.09487 [cs] (2020)

Lin, Y., Wang, C., Zhai, D., Li, W., Li, J.: Toward better boundary preserved supervoxel segmentation for 3D point clouds. ISPRS J. Photogram. Remote Sens. 143, 39–47 (2018). https://www.sciencedirect.com/science/article/pii/S0924271618301370. iSPRS Journal of Photogrammetry and Remote Sensing Theme Issue “Point Cloud Processing”

Liu, M., Zhou, Y., Qi, C.R., Gong, B., Su, H., Anguelov, D.: LESS: label-efficient semantic segmentation for lidar point clouds. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) ECCV 2022. LNCS, vol. 13699, pp. 70–89. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-19842-7_5

Liu, Z., Qi, X., Fu, C.W.: One thing one click: a self-training approach for weakly supervised 3d semantic segmentation. In: CVPR, pp. 1726–1736 (2021)

Maier-Hein, L., et al.: Surgical data science-from concepts toward clinical translation. Med. Image Anal. 76, 102306 (2022)

Mayer, N., et al.: A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In: CVPR, pp. 4040–4048 (2016)

Menze, M., Geiger, A.: Object scene flow for autonomous vehicles. In: CVPR (2015)

Mittal, H., Okorn, B., Held, D.: Just go with the flow: self-supervised scene flow estimation. In: CVPR, pp. 11177–11185 (2020)

Mottaghi, A., Sharghi, A., Yeung, S., Mohareri, O.: Adaptation of surgical activity recognition models across operating rooms. In: Wang, L., Dou, Q., Fletcher, P.T., Speidel, S., Li, S. (eds.) MICCAI 2022, Part VII. LNCS, vol. 13437, pp. 530–540. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-16449-1_51

Özsoy, E., Örnek, E.P., Eck, U., Czempiel, T., Tombari, F., Navab, N.: 4D-OR: semantic scene graphs for or domain modeling. In: Wang, L., Dou, Q., Fletcher, P.T., Speidel, S., Li, S. (eds.) MICCAI 2022. LNCS, vol. 13437, pp. 475–485. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-16449-1_45

Schmidt, A., Sharghi, A., Haugerud, H., Oh, D., Mohareri, O.: Multi-view surgical video action detection via mixed global view attention. In: de Bruijne, M., et al. (eds.) MICCAI 2021. LNCS, vol. 12904, pp. 626–635. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-87202-1_60

Sharghi, A., Haugerud, H., Oh, D., Mohareri, O.: Automatic operating room surgical activity recognition for robot-assisted surgery. In: Martel, A.L., et al. (eds.) MICCAI 2020. LNCS, vol. 12263, pp. 385–395. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-59716-0_37

Shi, H., Wei, J., Li, R., Liu, F., Lin, G.: Weakly supervised segmentation on outdoor 4D point clouds with temporal matching and spatial graph propagation. In: CVPR, pp. 11840–11849 (2022)

Twinanda, A.P., Winata, P., Gangi, A., Mathelin, M., Padoy, N.: Multi-stream deep architecture for surgical phase recognition on multi-view RGBD videos. In: Proceedings of the M2CAI Workshop MICCAI, pp. 1–8 (2016)

Yang, C.K., Wu, J.J., Chen, K.S., Chuang, Y.Y., Lin, Y.Y.: An mil-derived transformer for weakly supervised point cloud segmentation. In: CVPR, pp. 11830–11839 (2022)

Yousif, K., Bab-Hadiashar, A., Hoseinnezhad, R.: An overview to visual odometry and visual SLAM: applications to mobile robotics. Intell. Industr. Syst. 1(4), 289–311 (2015)

Acknowledgements

This work was funded by the German Federal Ministry of Education and Research (BMBF), No.: 16SV8088 and 13GW0236B. We additionally thank the J &J Robotics & Digital Solutions team for their support. Furthermore, we thank Ruiyang Li for supporting the point cloud annotation. Code and data can be found at: https://bastianlb.github.io/segmentOR/.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Bastian, L., Derkacz-Bogner, D., Wang, T.D., Busam, B., Navab, N. (2023). SegmentOR: Obtaining Efficient Operating Room Semantics Through Temporal Propagation. In: Greenspan, H., et al. Medical Image Computing and Computer Assisted Intervention – MICCAI 2023. MICCAI 2023. Lecture Notes in Computer Science, vol 14228. Springer, Cham. https://doi.org/10.1007/978-3-031-43996-4_6

Download citation

DOI: https://doi.org/10.1007/978-3-031-43996-4_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-43995-7

Online ISBN: 978-3-031-43996-4

eBook Packages: Computer ScienceComputer Science (R0)