Abstract

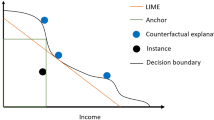

With the increasing adoption of Artificial Intelligence (AI) for decision-making processes by companies, developing systems that behave fairly and do not discriminate against specific groups of people becomes crucial. Reaching this objective requires a multidisciplinary approach that includes domain experts, data scientists, philosophers, and legal experts, to ensure complete accountability for algorithmic decisions. In such a context, Explainable AI (XAI) plays a key role in enabling professionals from different backgrounds to comprehend the functioning of automatized decision-making processes and, consequently, being able to identify the presence of fairness issues. This paper presents FairX, an innovative approach that uses Group-Contrastive (G-contrast) explanations to estimate whether different decision criteria apply among distinct population subgroups. FairX provides actionable insights through a comprehensive explanation of the decision-making process, enabling businesses to: detect the presence of direct discrimination on the target variable and choose the most appropriate fairness framework.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Compared to the notation in previous chapters, \(X=(R,Q)\), \(S=A\) and \(Y=Y\). Historical bias is generated using \(l_{hr} = 1.5\). Measurement bias is generated using \(l_{my} = 1.5\). For further detail on the data generation framework, see github.com/rcrupiISP/ISParity and [5].

References

Agarwal, A., Agarwal, H.: A seven-layer model with checklists for standardising fairness assessment throughout the AI lifecycle. AI Ethics 1–16 (2023)

Aghaei, S., Azizi, M.J., Vayanos, P.: Learning optimal and fair decision trees for non-discriminative decision-making. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 1418–1426 (2019)

Alimonda, N., Castelnovo, A., Crupi, R., Mercorio, F., Mezzanzanica, M.: Preserving utility in fair top-k ranking with intersectional bias. In: Boratto, L., Faralli, S., Marras, M., Stilo, G. (eds.) BIAS 2023. CCIS, vol. 1840, pp. 59–73. Springer, Cham (2023). https://doi.org/10.1007/978-3-031-37249-0_5

Barocas, S., Hardt, M., Narayanan, A.: Fairness and Machine Learning. fairmlbook.org (2019). http://www.fairmlbook.org

Baumann, J., Castelnovo, A., Crupi, R., Inverardi, N., Regoli, D.: Bias on demand: a modelling framework that generates synthetic data with bias. In: Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, pp. 1002–1013 (2023)

Baumann, J., Castelnovo, A., Crupi, R., Inverardi, N., Regoli, D.: An open-source toolkit to generate biased datasets (2023)

Begley, T., Schwedes, T., Frye, C., Feige, I.: Explainability for fair machine learning. arXiv preprint arXiv:2010.07389 (2020)

Binns, R.: On the apparent conflict between individual and group fairness. In: Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, pp. 514–524 (2020)

Cambria, E., Malandri, L., Mercorio, F., Mezzanzanica, M., Nobani, N.: A survey on XAI and natural language explanations. Inf. Process. Manag. 60(1), 103111 (2023)

Castelnovo, A., Cosentini, A., Malandri, L., Mercorio, F., Mezzanzanica, M.: FFTree: a flexible tree to handle multiple fairness criteria. Inf. Process. Manag. 59(6), 103099 (2022)

Castelnovo, A., et al.: Befair: addressing fairness in the banking sector. In: 2020 IEEE International Conference on Big Data (Big Data), pp. 3652–3661. IEEE (2020)

Castelnovo, A., Crupi, R., Greco, G., Regoli, D., Penco, I.G., Cosentini, A.C.: The zoo of fairness metrics in machine learning (2021)

Castelnovo, A., Crupi, R., Greco, G., Regoli, D., Penco, I.G., Cosentini, A.C.: A clarification of the nuances in the fairness metrics landscape. Sci. Rep. 12(1), 4209 (2022)

Castelnovo, A., Malandri, L., Mercorio, F., Mezzanzanica, M., Cosentini, A.: Towards fairness through time. In: Kamp, M., et al. (eds.) ECML PKDD 2021. CCIS, vol. 1524, pp. 647–663. Springer, Cham (2022). https://doi.org/10.1007/978-3-030-93736-2_46

Corbett-Davies, S., Goel, S.: The measure and mismeasure of fairness: a critical review of fair machine learning. arXiv preprint arXiv:1808.00023 (2018)

Crupi, R., Castelnovo, A., Regoli, D., San Miguel Gonzalez, B.: Counterfactual explanations as interventions in latent space. Data Mining Knowl. Discov. 1–37 (2022)

Dieterich, W., Mendoza, C., Brennan, T.: Compas risk scales: demonstrating accuracy equity and predictive parity. Northpointe Inc. (2016)

Dwork, C., Hardt, M., Pitassi, T., Reingold, O., Zemel, R.: Fairness through awareness. In: TCSC, pp. 214–226 (2012)

Flores, A.W., Bechtel, K., Lowenkamp, C.T.: False positives, false negatives, and false analyses: a rejoinder to machine bias: there’s software used across the country to predict future criminals. and it’s biased against blacks. Fed. Probat. 80, 38 (2016)

Franklin, J.S., Bhanot, K., Ghalwash, M., Bennett, K.P., McCusker, J., McGuinness, D.L.: An ontology for fairness metrics. In: Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society, pp. 265–275 (2022)

Friedler, S.A., Scheidegger, C., Venkatasubramanian, S.: The (im) possibility of fairness: different value systems require different mechanisms for fair decision making. Commun. ACM 64(4), 136–143 (2021)

Grabowicz, P., Perello, N., Mishra, A.: Marrying fairness and explainability in supervised learning. In: 2022 ACM FAccT, pp. 1905–1916 (2022)

Green, T.K.: The future of systemic disparate treatment law. Berkeley J. Emp. Lab. L. 32, 395 (2011)

Hertweck, C., Heitz, C., Loi, M.: On the moral justification of statistical parity. In: Proceedings of the 2021 ACM FAccT, pp. 747–757 (2021)

Hilton, D.J.: Conversational processes and causal explanation. Psychol. Bull. 107(1), 65 (1990)

Hu, L., Immorlica, N., Vaughan, J.W.: The disparate effects of strategic manipulation. In: Proceedings of the Conference on Fairness, Accountability, and Transparency, pp. 259–268 (2019)

Jobin, A., Ienca, M., Vayena, E.: The global landscape of AI ethics guidelines. Nat. Mach. Intell. 1(9), 389–399 (2019)

Lipton, P.: Contrastive explanation. R. Inst. Philos. Suppl. 27, 247–266 (1990)

Lundberg, S.M., Lee, S.I.: A unified approach to interpreting model predictions. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Madaio, M.A., Stark, L., Wortman Vaughan, J., Wallach, H.: Co-designing checklists to understand organizational challenges and opportunities around fairness in AI. In: 2020 CHI Conference on HFCS, pp. 1–14 (2020)

Makhlouf, K., Zhioua, S., Palamidessi, C.: On the applicability of machine learning fairness notions. ACM SIGKDD 23(1), 14–23 (2021)

Malandri, L., Mercorio, F., Mezzanzanica, M., Nobani, N.: Convxai: a system for multimodal interaction with any black-box explainer. Cogn. Comput. 15(2), 613–644 (2023)

Malandri, L., Mercorio, F., Mezzanzanica, M., Nobani, N., Seveso, A.: Contrxt: generating contrastive explanations from any text classifier. Inf. Fusion 81, 103–115 (2022)

Malandri, L., Mercorio, F., Mezzanzanica, M., Nobani, N., Seveso, A.: The good, the bad, and the explainer: a tool for contrastive explanations of text classifiers. In: Raedt, L.D. (ed.) Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI 2022, Vienna, Austria, 23–29 July 2022, pp. 5936–5939. ijcai.org (2022). https://doi.org/10.24963/ijcai.2022/858

Malandri, L., Mercorio, F., Mezzanzanica, M., Seveso, A.: Model-contrastive explanations through symbolic reasoning. Decis. Support Syst. 114040 (2023). https://doi.org/10.1016/j.dss.2023.114040. https://doi.org/www.sciencedirect.com/science/article/pii/S016792362300115X

Manerba, M.M., Guidotti, R.: Fairshades: fairness auditing via explainability in abusive language detection systems. In: 2021 IEEE Third International Conference on Cognitive Machine Intelligence (CogMI), pp. 34–43. IEEE (2021)

Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., Galstyan, A.: A survey on bias and fairness in machine learning. ACM Comput. Surv. (CSUR) 54(6), 1–35 (2021)

Miller, C.C.: Can an algorithm hire better than a human. The New York Times, vol. 25 (2015)

Miller, C.C.: When algorithms discriminate. The New York Times, vol. 9 (2015)

Miller, T.: Explanation in artificial intelligence: insights from the social sciences. Artif. Intell. 267, 1–38 (2019)

Mukerjee, A., Biswas, R., Deb, K., Mathur, A.P.: Multi-objective evolutionary algorithms for the risk-return trade-off in bank loan management. Int. Trans. Oper. Res. 9(5), 583–597 (2002)

Ribeiro, M.T., Wu, T., Guestrin, C., Singh, S.: Beyond accuracy: behavioral testing of NLP models with checklist. arXiv preprint arXiv:2005.04118 (2020)

Ruf, B., Detyniecki, M.: Towards the right kind of fairness in AI. arXiv preprint arXiv:2102.08453 (2021)

Ryan, M., Stahl, B.C.: Artificial intelligence ethics guidelines for developers and users: clarifying their content and normative implications. J. Inf. Commun. Ethics Soc. 19(1), 61–86 (2020)

Saxena, N.A.: Perceptions of fairness. In: Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, pp. 537–538 (2019)

Shin, D., Park, Y.J.: Role of fairness, accountability, and transparency in algorithmic affordance. Comput. Hum. Behav. 98, 277–284 (2019)

Speicher, T., et al.: A unified approach to quantifying algorithmic unfairness: measuring individual & group unfairness via inequality indices. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 2239–2248 (2018)

Sweeney, L.: Discrimination in online ad delivery. Commun. ACM 56(5), 44–54 (2013)

Tadmor, C.T., Hong, Y.Y., Chao, M.M., Wiruchnipawan, F., Wang, W.: Multicultural experiences reduce intergroup bias through epistemic unfreezing. J. Pers. Soc. Psychol. 103(5), 750 (2012)

Van Bouwel, J., Weber, E.: Remote causes, bad explanations? J. Theory Soc. Behav. 32(4), 437–449 (2002)

Verma, S., Rubin, J.: Fairness definitions explained. In: 2018 IEEE/ACM International Workshop on Software Fairness (FairWare), pp. 1–7. IEEE (2018)

Wahl, B., Cossy-Gantner, A., Germann, S., Schwalbe, N.R.: Artificial intelligence (AI) and global health: how can AI contribute to health in resource-poor settings? BMJ Glob. Health 3(4), e000798 (2018)

Wang, H.E., et al.: A bias evaluation checklist for predictive models and its pilot application for 30-day hospital readmission models. J. Am. Med. Inform. Assoc. 29(8), 1323–1333 (2022)

Warner, R., Sloan, R.H.: Making artificial intelligence transparent: fairness and the problem of proxy variables. CJE 40(1), 23–39 (2021)

Willborn, S.L.: The disparate impact model of discrimination: theory and limits. Am. UL Rev. 34, 799 (1984)

Zafar, M.B., Valera, I., Gomez-Rodriguez, M., Gummadi, K.P.: Fairness constraints: a flexible approach for fair classification. J. Mach. Learn. Res. 20(1), 2737–2778 (2019)

Zafar, M.B., Valera, I., Rogriguez, M.G., Gummadi, K.P.: Fairness constraints: mechanisms for fair classification. In: Artificial Intelligence and Statistics, pp. 962–970. PMLR (2017)

Zhou, J., Chen, F., Holzinger, A.: Towards explainability for AI fairness. In: Holzinger, A., Goebel, R., Fong, R., Moon, T., Müller, K.R., Samek, W. (eds.) xxAI 2020. LNCS, vol. 13200, pp. 375–386. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-04083-2_18

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Castelnovo, A., Inverardi, N., Malandri, L., Mercorio, F., Mezzanzanica, M., Seveso, A. (2023). Leveraging Group Contrastive Explanations for Handling Fairness. In: Longo, L. (eds) Explainable Artificial Intelligence. xAI 2023. Communications in Computer and Information Science, vol 1903. Springer, Cham. https://doi.org/10.1007/978-3-031-44070-0_17

Download citation

DOI: https://doi.org/10.1007/978-3-031-44070-0_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-44069-4

Online ISBN: 978-3-031-44070-0

eBook Packages: Computer ScienceComputer Science (R0)