Abstract

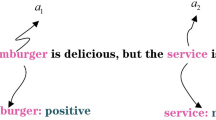

Aspect-based sentiment analysis is a challenging yet critical task for recognizing emotions in text, with various applications in social media, commodity reviews, and movie comments. Many researchers are working on developing more powerful sentiment analysis models. Most existing models use the pre-trained language models based fine-tuning paradigm, which only utilizes the encoder parameters of pre-trained language models. However, this approach fails to effectively leverage the prior knowledge revealed in pre-trained language models. To address these issues, we propose a novel approach, Target Word Transferred Language Model for aspect-based sentiment analysis (WordTransABSA), which investigates the potential of the pre-training scheme of pre-trained language models. WordTransABSA is an encoder-decoder architecture built on top of the Masked Language Model of Bidirectional Encoder Representation from Transformers. During the training procedure, we reformulate the previous generic fine-tuning models as a “Masked Language Model” task, which follows the original BERT pre-training paradigm. WordTransABSA takes full advantage of the versatile linguistic knowledge of Pre-trained Language Model, resulting in competitive accuracy compared with recent baselines, especially in data-insufficient scenarios. We have made our code publicly available on GitHub (https://github.com/albert-jin/WordTransABSA).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 Conference of the North American Association for Computational Linguistics, pp. 4171–4186. ACL, Minneapolis, Minnesota, June 2019. https://doi.org/10.18653/v1/N19-1423

Dong, L., Wei, F., Tan, C., Tang, D., Zhou, M., Xu, K.: Adaptive recursive neural network for target-dependent Twitter sentiment classification. In: Proceedings of the 52nd Annual Meeting of the Association, pp. 49–54. ACL, Baltimore, Maryland, June 2014. https://doi.org/10.3115/v1/P14-2009

Gao, J., Yu, H., Zhang, S.: Joint event causality extraction using dual-channel enhanced neural network. Knowl.-Based Syst. 258, 109935 (2022). https://doi.org/10.1016/j.knosys.2022.109935

Gao, T., Fisch, A., Chen, D.: Making pre-trained language models better few-shot learners. In: Proceedings of the 59th Association for Computational Linguistics, pp. 3816–3830. ACL, Online, August 2021

Han, X., Zhang, Z., Ding, N., Gu, Y., Liu, X., Huo, Y.E.A.: Pre-trained models: past, present and future. AI Open 2, 225–250 (2021). https://doi.org/10.1016/j.aiopen.2021.08.002

Jin, W., Zhao, B., Liu, C.: Fintech key-phrase: a new Chinese financial high-tech dataset accelerating expression-level information retrieval. In: Wang, X., et al. (eds.) Database Systems for Advanced Applications. DASFAA 2023. LNCS, vol. 13945, pp. 425–440. Springer, Cham (2023). https://doi.org/10.1007/978-3-031-30675-4_31

Jin, W., Zhao, B., Zhang, L., Liu, C., Yu, H.: Back to common sense: oxford dictionary descriptive knowledge augmentation for aspect-based sentiment analysis. Inf. Process. Manag. 60(3), 103260 (2023). https://doi.org/10.1016/j.ipm.2022.103260

Joshi, M., Chen, D., Liu, Y., Weld, D.S., Zettlemoyer, L., Levy, O.: SpanBERT: improving pre-training by representing and predicting spans. Trans. Assoc. Comput. Linguist. 8, 64–77 (2020). https://doi.org/10.1162/tacl_a_00300

Lan, Z., Chen, M., Goodman, S., Gimpel, K., Sharma, P., Soricut, R.: Albert: a lite bert for self-supervised learning of language representations. In: International Conference on Learning Representations (2020). https://openreview.net/forum?id=H1eA7AEtvS

Lewis, M., et al.: BART: denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In: Proceedings of the 58th Association for Computational Linguistics, pp. 7871–7880. ACL, Online, July 2020. https://doi.org/10.18653/v1/2020.acl-main.703

Li, X., Bing, L., Zhang, W., Lam, W.: Exploiting BERT for end-to-end aspect-based sentiment analysis. In: Proceedings of the 5th Workshop on Noisy User-generated Text (W-NUT 2019), pp. 34–41. ACL, Hong Kong, China, November 2019. https://doi.org/10.18653/v1/D19-5505

Liu, H., Wang, N., Li, X., Xu, C., Li, Y.: BFF R-CNN: balanced feature fusion for object detection. IEICE Trans. Inf. Syst. 105(8), 1472–1480 (2022)

Liu, L., et al.: Empower sequence labeling with task-aware neural language model. In: Proceedings of the Thirty-Second AAAI Conference. AAAI’18/IAAI’18/EAAI’18, AAAI Press, New Orleans, Louisiana, USA (2018)

Mitchell, M., Aguilar, J., Wilson, T., Van Durme, B.: Open domain targeted sentiment. In: Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, pp. 1643–1654. ACL, Seattle, Washington, USA, October 2013. https://aclanthology.org/D13-1171

Mohammad, S.M., Turney, P.D.: Crowdsourcing a word-emotion association lexicon. Comput. Intell. 29(3), 436–465 (2013). https://doi.org/10.1111/j.1467-8640.2012.00460.x

Nazir, A., Rao, Y., Wu, L., Sun, L.: IAF-LG: an interactive attention fusion network with local and global perspective for aspect-based sentiment analysis. IEEE Trans. Affect. Comput. 13(4), 1730–1742 (2022). https://doi.org/10.1109/TAFFC.2022.3208216

Pontiki, M., Galanis, D., Pavlopoulos, J., Papageorgiou, H., Androutsopoulos, I., Manandhar, S.: SemEval-2014 task 4: aspect based sentiment analysis. In: Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014), pp. 27–35. ACL, Dublin, Ireland, August 2014. https://doi.org/10.3115/v1/S14-2004

Raffel, C., et al.: Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 21(1) (2020)

Rietzler, A., Stabinger, S., Opitz, P., Engl, S.: Adapt or get left behind: domain adaptation through BERT language model finetuning for aspect-target sentiment classification. In: Proceedings of the Twelfth Language Resources and Evaluation Conference, pp. 4933–4941. European Language Resources Association, Marseille, France, May 2020. https://aclanthology.org/2020.lrec-1.607

Shen, Y., Ma, X., Tan, Z., Zhang, S., Wang, W., Lu, W.: Locate and label: a two-stage identifier for nested named entity recognition, pp. 2782–2794. ACL, Online, August 2021. https://doi.org/10.18653/v1/2021.acl-long.216

Shen, Y., et al.: Parallel instance query network for named entity recognition, pp. 947–961. ACL, Dublin, Ireland, May 2022. https://doi.org/10.18653/v1/2022.acl-long.67

Song, Y., Wang, J., Jiang, T., Liu, Z., Rao, Y.: Targeted sentiment classification with attentional encoder network. In: Tetko, I.V., Kůrková, V., Karpov, P., Theis, F. (eds.) ICANN 2019. LNCS, vol. 11730, pp. 93–103. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-30490-4_9

Sun, C., Huang, L., Qiu, X.: Utilizing BERT for aspect-based sentiment analysis via constructing auxiliary sentence. In: Proceedings of the 2019 Conference of the North American Association for Computational Linguistics, pp. 380–385. ACL, Minneapolis, Minnesota, June 2019. https://doi.org/10.18653/v1/N19-1035

Xia, N., Yu, H., Wang, Y., Xuan, J., Luo, X.: DAFS: a domain aware few shot generative model for event detection. Mach. Learn. 112(3), 1011–1031 (2023). https://doi.org/10.1007/s10994-022-06198-5

Xu, H., Liu, B., Shu, L., Yu, P.: BERT post-training for review reading comprehension and aspect-based sentiment analysis. In: Proceedings of the 2019 Conference of the North American Association for Computational Linguistics, pp. 2324–2335. ACL, Minneapolis, Minnesota, June 2019. https://doi.org/10.18653/v1/N19-1242

Zeng, B., Yang, H., Xu, R., Zhou, W., Han, X.: LCF: a local context focus mechanism for aspect-based sentiment classification. Appl. Sci. 9(16) (2019). https://doi.org/10.3390/app9163389

Zhao, B., Jin, W., Ser, J.D., Yang, G.: Chatagri: exploring potentials of chatgpt on cross-linguistic agricultural text classification (2023)

Zhou, J., Huang, J.X., Chen, Q., Hu, Q.V., Wang, T., He, L.: Deep learning for aspect-level sentiment classification: survey, vision, and challenges. IEEE Access 7, 78454–78483 (2019). https://doi.org/10.1109/ACCESS.2019.2920075

Zhuang, L., Wayne, L., Ya, S., Jun, Z.: A robustly optimized BERT pre-training approach with post-training. In: Proceedings of the 20th Chinese National Conference on Computational Linguistics, pp. 1218–1227. Chinese Information Processing Society of China, Huhhot, China, August 2021. https://aclanthology.org/2021.ccl-1.108

Acknowledgements

This work was funded by the Natural Science Basis Research Plan in Shaanxi Province of China (Project Code: 2021JQ-061). This work was conducted by the first two authors, Weiqiang Jin and Biao Zhao, during their research at Xi‘an Jiaotong University. The corresponding author is Biao Zhao. Thanks to the action editors and anonymous reviewers for improving the paper with their comments, and recommendations.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Jin, W., Zhao, B., Liu, C., Zhang, H., Jiang, M. (2023). Using Masked Language Modeling to Enhance BERT-Based Aspect-Based Sentiment Analysis for Affective Token Prediction. In: Iliadis, L., Papaleonidas, A., Angelov, P., Jayne, C. (eds) Artificial Neural Networks and Machine Learning – ICANN 2023. ICANN 2023. Lecture Notes in Computer Science, vol 14263. Springer, Cham. https://doi.org/10.1007/978-3-031-44204-9_44

Download citation

DOI: https://doi.org/10.1007/978-3-031-44204-9_44

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-44203-2

Online ISBN: 978-3-031-44204-9

eBook Packages: Computer ScienceComputer Science (R0)