Abstract

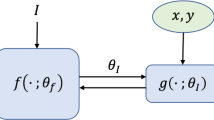

Federated learning (FL) is a decentralized learning paradigm in which multiple clients collaborate to train the global model. However, the generalization of a global model is often affected by data heterogeneity. The goal of Personalized Federated Learning (PFL) is to develop models tailored to local tasks that overcomes data heterogeneity from the clients’ perspective. In this paper, we introduce Prototype Contrastive Learning into FL (FedPCL) to learn a global base encoder, which aggregates knowledge learned by local models not only in the parameter space but also in the embedding space. Furthermore, given that some client resources are limited, we employ two prototype settings: multiple prototypes and a single prototype. The federated process combines with the Expectation Maximization (EM) algorithm. During the iterative process, clients perform the E-step to compute prototypes and the M-step to update model parameters by minimizing the ProtoNCE-M (ProtoNCE-S) loss. This process leads to achieving convergence of the global model. Subsequently, the global base encoder that extracts more compact representations is customized according to the local task to ensure personalization. Experimental results demonstrate the consistent increase in performance as well as its effective personalization ability.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Arivazhagan, M.G., Aggarwal, V., Singh, A.K., Choudhary, S.: Federated learning with personalization layers. arXiv preprint arXiv:1912.00818 (2019)

Bengio, Y., Courville, A., Vincent, P.: Representation learning: a review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 35(8), 1798–1828 (2013)

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. In: International Conference on Machine Learning, pp. 1597–1607 (2020)

Cheng, G., Chadha, K., Duchi, J.: Fine-tuning is fine in federated learning. arXiv preprint arXiv:2108.07313 (2021)

Collins, L., Hassani, H., Mokhtari, A., Shakkottai, S.: Exploiting shared representations for personalized federated learning. In: International Conference on Machine Learning, pp. 2089–2099 (2021)

Fallah, A., Mokhtari, A., Ozdaglar, A.: Personalized federated learning: A meta-learning approach. arXiv preprint arXiv:2002.07948 (2020)

Grill, J.B., et al.: Bootstrap your own latent-a new approach to self-supervised learning. Adv. Neural. Inf. Process. Syst. 33, 21271–21284 (2020)

Hanzely, F., Richtárik, P.: Federated learning of a mixture of global and local models. arXiv preprint arXiv:2002.05516 (2020)

Jiang, Y., Konečnỳ, J., Rush, K., Kannan, S.: Improving federated learning personalization via model agnostic meta learning. arXiv preprint arXiv:1909.12488 (2019)

Kairouz, P., et al.: Advances and open problems in federated learning. Found. Trends® Mach. Learn. 14(1–2), 1–210 (2021)

Khosla, P., et al.: Supervised contrastive learning. Adv. Neural. Inf. Process. Syst. 33, 18661–18673 (2020)

Li, J., Zhou, P., Xiong, C., Hoi, S.C.: Prototypical contrastive learning of unsupervised representations. arXiv preprint arXiv:2005.04966 (2020)

Li, Q., He, B., Song, D.: Model-contrastive federated learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10713–10722 (2021)

Li, T., Sahu, A.K., Talwalkar, A., Smith, V.: Federated learning: challenges, methods, and future directions. IEEE Signal Process. Mag. 37(3), 50–60 (2020)

McMahan, B., Moore, E., Ramage, D., Hampson, S., y Arcas, B.A.: Communication-efficient learning of deep networks from decentralized data. In: Artificial Intelligence and Statistics, pp. 1273–1282 (2017)

Mu, X., et al.: Fedproc: prototypical contrastive federated learning on non-iid data. Futur. Gener. Comput. Syst. 143, 93–104 (2023)

OH, J.H., Kim, S., Yun, S.: Fedbabu: Toward enhanced representation for federated image classification. In: 10th International Conference on Learning Representations, ICLR 2022. International Conference on Learning Representations (ICLR) (2022)

Pillutla, K., Malik, K., Mohamed, A.R., Rabbat, M., Sanjabi, M., Xiao, L.: Federated learning with partial model personalization. In: International Conference on Machine Learning, pp. 17716–17758 (2022)

Tan, Y., et al.: Fedproto: Federated prototype learning across heterogeneous clients. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 36, pp. 8432–8440 (2022)

Acknowledgment

This work was supported in part by the National Natural Science Foundation of China under Grant 62076179.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Deng, S., Yang, L. (2023). Prototype Contrastive Learning for Personalized Federated Learning. In: Iliadis, L., Papaleonidas, A., Angelov, P., Jayne, C. (eds) Artificial Neural Networks and Machine Learning – ICANN 2023. ICANN 2023. Lecture Notes in Computer Science, vol 14256. Springer, Cham. https://doi.org/10.1007/978-3-031-44213-1_44

Download citation

DOI: https://doi.org/10.1007/978-3-031-44213-1_44

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-44212-4

Online ISBN: 978-3-031-44213-1

eBook Packages: Computer ScienceComputer Science (R0)