Abstract

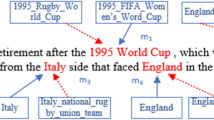

The problem of collective methods based on graph presents challenges: given mentions and their candidate entities, methods need to build graphs to model correlation of linking decisions between different mentions. In this paper, we propose three ideas: (i) build subgraphs made up of partial mentions instead of those in the entire document to improve computation efficiency, (ii) perform joint disambiguation over context and knowledge base (KB), and (iii) identify closely related knowledge from KB. With regard to above innovations, we propose EL-Graph, which addresses the challenges of collective methods: (i) attention mechanism, where we select low attention scores of partial mentions of a document to form a subgraph to improve the computation efficiency, (ii) joint disambiguation, where we connect mention context and KB to form a joint graph, gather and spread the message to update their node representations simultaneously through graph neural networks, and (iii) relevance scoring, where we compute similarity to estimate importance of KB nodes relative to the given context. We evaluate our model on publicly available dataset and show the effectiveness of our model.

K. Wang and Y. Xia—Equal contribution.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Cao, Y., Hou, L., Li, J., Liu, Z.: Neural collective entity linking. In: Proceedings of the 27th International Conference on Computational Linguistics, pp. 675–686 (2018)

Francis-Landau, M., Durrett, G., Klein, D.: Capturing semantic similarity for entity linking with convolutional neural networks. In: Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 1256–1261 (2016)

Ganea, O.E., Hofmann, T.: Deep joint entity disambiguation with local neural attention. In: Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pp. 2619–2629 (2017)

Globerson, A., Lazic, N., Chakrabarti, S., Subramanya, A., Ringgaard, M., Pereira, F.: Collective entity resolution with multi-focal attention. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 621–631 (2016)

Guo, Z., Barbosa, D.: Robust named entity disambiguation with random walks. Semant. Web 9, 459–479 (2018)

Hoffart, J., et al.: Robust disambiguation of named entities in text. In: Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing, pp. 782–792 (2011)

Huang, H., Cao, Y., Huang, X., Ji, H., Lin, C.Y.: Collective tweet wikification based on semi-supervised graph regularization. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 380–390 (2014)

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. CoRR arXiv:1609.02907 (2016)

Le, P., Titov, I.: Improving entity linking by modeling latent relations between mentions arXiv:1804.10637 (2018)

Nguyen, T.H., Fauceglia, N., Rodriguez Muro, M., Hassanzadeh, O., Massimiliano Gliozzo, A., Sadoghi, M.: Joint learning of local and global features for entity linking via neural networks. In: Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, pp. 2310–2320 (2016)

Pershina, M., He, Y., Grishman, R.: Personalized page rank for named entity disambiguation. In: Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 238–243 (2015)

Phan, M.C., Sun, A., Tay, Y., Han, J., Li, C.: Pair-linking for collective entity disambiguation: two could be better than all. CoRR arXiv:1802.01074 (2018)

Veličković, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., Bengio, Y.: Graph attention networks. arXiv:1710.10903 (2017)

Yamada, I., Shindo, H., Takeda, H., Takefuji, Y.: Joint learning of the embedding of words and entities for named entity disambiguation. In: Proceedings of The 20th SIGNLL Conference on Computational Natural Language Learning, pp. 250–259 (2016)

Yamada, I., Shindo, H., Takeda, H., Takefuji, Y.: Learning distributed representations of texts and entities from knowledge base. CoRR abs/1705.02494 (2017). http://arxiv.org/abs/1705.02494

Yang, X., et al.: Learning dynamic context augmentation for global entity linking. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pp. 271–281 (2019)

Yasunaga, M., Ren, H., Bosselut, A., Liang, P., Leskovec, J.: QA-GNN: reasoning with language models and knowledge graphs for question answering. In: Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 535–546 (2021)

Acknowledgments

This work was supported by Projects 62276178 under the National Natural Science Foundation of China, the National Key RD Program of China under Grant No. 2020AAA0108600 and the Priority Academic Program Development of Jiangsu Higher Education Institutions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, K., Xia, Y., Kong, F. (2023). Collective Entity Linking with Joint Subgraphs. In: Liu, F., Duan, N., Xu, Q., Hong, Y. (eds) Natural Language Processing and Chinese Computing. NLPCC 2023. Lecture Notes in Computer Science(), vol 14303. Springer, Cham. https://doi.org/10.1007/978-3-031-44696-2_14

Download citation

DOI: https://doi.org/10.1007/978-3-031-44696-2_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-44695-5

Online ISBN: 978-3-031-44696-2

eBook Packages: Computer ScienceComputer Science (R0)