Abstract

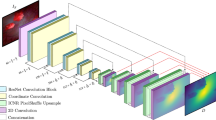

Colorectal cancer remains one of the deadliest cancers in the world. In recent years computer-aided methods have aimed to enhance cancer screening and improve the quality and availability of colonoscopies by automatizing sub-tasks. One such task is predicting depth from monocular video frames, which can assist endoscopic navigation. As ground truth depth from standard in-vivo colonoscopy remains unobtainable due to hardware constraints, two approaches have aimed to circumvent the need for real training data: supervised methods trained on labeled synthetic data and self-supervised models trained on unlabeled real data. However, self-supervised methods depend on unreliable loss functions that struggle with edges, self-occlusion, and lighting inconsistency. Methods trained on synthetic data can provide accurate depth for synthetic geometries but do not use any geometric supervisory signal from real data and overfit to synthetic anatomies and properties. This work proposes a novel approach to leverage labeled synthetic and unlabeled real data. While previous domain adaptation methods indiscriminately enforce the distributions of both input data modalities to coincide, we focus on the end task, depth prediction, and translate only essential information between the input domains. Our approach results in more resilient and accurate depth maps of real colonoscopy sequences. The project is available here: https://github.com/anitarau/Domain-Gap-Reduction-Endoscopy.

A. Rau and B. Bhattarai—Project conducted while at University College London.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Ahmad, O.F., et al.: Establishing key research questions for the implementation of artificial intelligence in colonoscopy: a modified delphi method. Endoscopy 53(09), 893–901 (2021)

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein generative adversarial networks. In: ICML, pp. 214–223. PMLR (2017)

Armin, M.A., et al.: Automated visibility map of the internal colon surface from colonoscopy video. IJCARS 11(9), 1599–1610 (2016)

Azagra, P., et al.: Endomapper dataset of complete calibrated endoscopy procedures. arXiv preprint arXiv:2204.14240 (2022)

Cheng, K., et al.: Depth estimation for colonoscopy images with self-supervised learning from videos. In: MICCAI, pp. 119–128 (2021)

Freedman, D., et al.: Detecting deficient coverage in colonoscopies. IEEE TMI 39(11), 3451–3462 (2020)

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: CVPR., pp. 1125–1134 (2017)

Itoh, H., et al.: Unsupervised colonoscopic depth estimation by domain translations with a lambertian-reflection keeping auxiliary task. IJCARS 16(6), 989–1001 (2021)

Ma, Y., et al.: Ldpolypvideo benchmark: a large-scale colonoscopy video dataset of diverse polyps. In: MICCAI, pp. 387–396 (2021)

Mahmood, F., Durr, N.J.: Deep learning and conditional random fields-based depth estimation and topographical reconstruction from conventional endoscopy. Med. Image Anal. 48, 230–243 (2018)

Mathew, S., Nadeem, S., Kumari, S., Kaufman, A.: Augmenting colonoscopy using extended and directional cyclegan for lossy image translation. In: Proceedings of CVPR, pp. 4696–4705 (2020)

Ozyoruk, K.B., et al.: Endoslam dataset and an unsupervised monocular visual odometry and depth estimation approach for endoscopic videos. Med. Image Anal. 71, 102058 (2021)

PNVR, K., Zhou, H., Jacobs, D.: Sharingan: combining synthetic and real data for unsupervised geometry estimation. In: Proceedings of CVPR, pp. 13974–13983 (2020)

Rau, A., Bhattarai, B., Agapito, L., Stoyanov, D.: Bimodal camera pose prediction for endoscopy. arXiv preprint arXiv:2204.04968 (2022)

Rau, A., et al.: Implicit domain adaptation with conditional generative adversarial networks for depth prediction in endoscopy. IJCARS 14(7), 1167–1176 (2019)

Shao, S., et al.: Self-supervised monocular depth and ego-motion estimation in endoscopy: appearance flow to the rescue. Med. Image Anal. 77, 102338 (2022)

Turan, M., et al.: Unsupervised odometry and depth learning for endoscopic capsule robots. In: IROS, pp. 1801–1807 (2018)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Zheng, C., Cham, T.J., Cai, J.: T2net: synthetic-to-realistic translation for solving single-image depth estimation tasks. In: Proceedings of the European Conference on Computer Vision, pp. 767–783 (2018)

Acknowledgements

This work was supported by the Wellcome/EPSRC Centre for Interventional and Surgical Sciences (WEISS) [203145Z/16/Z]; Engineering and Physical Sciences Research Council (EPSRC) [EP/P027938/1, EP/ R004080/1, EP/P012841/1]; The Royal Academy of Engineering Chair in Emerging Technologies scheme; and the EndoMapper project by Horizon 2020 FET (GA 863146). All datasets used in this work are publicly available and linked in this manuscript. The code for this project is publicly available on Github. For the purpose of open access, the author has applied a CC BY public copyright license to any author-accepted manuscript version arising from this submission.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Rau, A., Bhattarai, B., Agapito, L., Stoyanov, D. (2023). Task-Guided Domain Gap Reduction for Monocular Depth Prediction in Endoscopy. In: Bhattarai, B., et al. Data Engineering in Medical Imaging. DEMI 2023. Lecture Notes in Computer Science, vol 14314. Springer, Cham. https://doi.org/10.1007/978-3-031-44992-5_11

Download citation

DOI: https://doi.org/10.1007/978-3-031-44992-5_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-44991-8

Online ISBN: 978-3-031-44992-5

eBook Packages: Computer ScienceComputer Science (R0)