Abstract

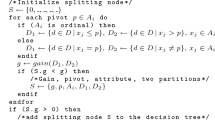

The growing interpretable machine learning research field is mainly focusing on the explanation of supervised approaches. However, also unsupervised approaches might benefit from considering interpretability aspects. While existing clustering methods only provide the assignment of records to clusters without justifying the partitioning, we propose tree-based clustering methods that offer interpretable data partitioning through a shallow decision tree. These decision trees enable easy-to-understand explanations of cluster assignments through short and understandable split conditions. The proposed methods are evaluated through experiments on synthetic and real datasets and proved to be more effective than traditional clustering approaches and interpretable ones in terms of standard evaluation measures and runtime. Finally, a case study involving human participation demonstrates the effectiveness of the interpretable clustering trees returned by the proposed method.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

After a preliminary experimentation we discarded evaluation measures for regressors as they do not consider the separation of the data but the performance of the regressor.

- 2.

- 3.

- 4.

- 5.

Details for the parameter values tested are available on the repository. However, since the objective is towards interpretable clustering, we do not search for more than 12 clusters or trees deeper than 10. Also, we remark that it is outside the purpose of this study to design strategies to identify good values for \({max\_clusters}, {max\_depth}, {min\_sample}, \varepsilon \). We leave this task for a future study.

- 6.

Similar results to those reported are obtained with best parameters w.r.t other measures.

- 7.

- 8.

All participants provided written informed consent and received no monetary rewards.

References

Basak, J., Krishnapuram, R.: Interpretable hierarchical clustering by constructing an unsupervised decision tree. IEEE TKDE 17(1), 121–132 (2005)

Bertsimas, D., Orfanoudaki, A., Wiberg, H.M.: Interpretable clustering: an optimization approach. Mach. Learn. 110(1), 89–138 (2021)

Blockeel, H., Raedt, L.D., Ramon, J.: Top-down induction of clustering trees. In: ICML, pp. 55–63. Morgan Kaufmann (1998)

Breiman, L., Friedman, J.H., Olshen, R.A., Stone, C.J.: Classification and regression trees. Wadsworth (1984)

Cao, A., Chintamani, K.K., Pandya, A.K., Ellis, R.D.: NASA TLX: software for assessing subjective mental workload. Behav. Res. Meth. 41(1), 113–117 (2009). https://doi.org/10.3758/BRM.41.1.113

Castin, L., Frénay, B.: Clustering with decision trees: divisive and agglomerative approach. In: ESANN, pp. 455–460 (2018)

Chen, J., et al.: Interpretable clustering via discriminative rectangle mixture model. In: ICDM, pp. 823–828. IEEE Computer Society (2016)

Chen, Y., Hsu, W., Lee, Y.: TASC: two-attribute-set clustering through decision tree construction. Eur. J. Oper. Res. 174(2), 930–944 (2006)

Chierichetti, F., Kumar, R., Lattanzi, S., Vassilvitskii, S.: Fair clustering through fairlets. In: NIPS, pp. 5029–5037 (2017)

Dasgupta, S., Freund, Y.: Random projection trees and low dimensional manifolds. In: STOC, pp. 537–546. ACM (2008)

Dasgupta, S., Frost, N., Moshkovitz, M., Rashtchian, C.: Explainable k-means clustering: theory and practice. In: XXAI Workshop. ICML (2020)

Demsar, J.: Statistical comparisons of classifiers. JMLR 7, 1–30 (2006)

Escofier, B., et al.: Analyses factorielles simples et multiples. Dunod 284 (1998)

Fowlkes, E.B., Mallows, C.L.: A method for comparing two hierarchical clusterings. J. Am. Stat. Assoc. 78(383), 553–569 (1983)

Fraiman, R., Ghattas, B., Svarc, M.: Interpretable clustering using unsupervised binary trees. Adv. Data Anal. Classif. 7(2), 125–145 (2013)

Freund, Y., et al.: Learning the structure of manifolds using random projections. In: NIPS, pp. 473–480. Curran Associates, Inc. (2007)

Frost, N., Moshkovitz, M., Rashtchian, C.: ExKMC: expanding explainable k-means clustering. CoRR abs/2006.02399 (2020)

Gabidolla, M., Carreira-Perpiñán, M.Á.: Optimal interpretable clustering using oblique decision trees. In: KDD, pp. 400–410. ACM (2022)

Ghattas, B., Michel, P., Boyer, L.: Clustering nominal data using unsupervised binary decision trees. Pattern Recognit. 67, 177–185 (2017)

Greenacre, M., et al.: Multiple correspondence analysis. CRC (2006)

Guidotti, R., et al.: Clustering individual transactional data for masses of users. In: KDD, pp. 195–204. ACM (2017)

Guidotti, R., et al.: A survey of methods for explaining black box models. ACM CSUR 51(5), 93:1–93:42 (2019)

Gutiérrez-Rodríguez, A.E., et al.: Mining patterns for clustering on numerical datasets using unsupervised decision trees. KBS 82, 70–79 (2015)

Holzinger, A., et al.: Measuring the quality of explanations: the system causability scale (SCS) comparing human and machine explanations. KI 34(2), 193–198 (2020)

Householder, A.S.: Unitary triangularization of a nonsymmetric matrix. J. ACM 5(4), 339–342 (1958)

Laber, E.S., Murtinho, L.: On the price of explainability for some clustering problems. In: ICML, vol. 139, pp. 5915–5925. PMLR (2021)

Laber, E.S., Murtinho, L., Oliveira, F.: Shallow decision trees for explainable k-means clustering. Pattern Recognit. 137, 109239 (2023)

Lawless, C., et al.: Interpretable clustering via multi-polytope machines. In: AAAI, pp. 7309–7316. AAAI Press (2022)

Liu, B., Xia, Y., Yu, P.S.: Clustering through decision tree construction. In: CIKM, pp. 20–29. ACM (2000)

Loyola-González, O., et al.: An explainable artificial intelligence model for clustering numerical databases. IEEE Access 8, 52370–52384 (2020)

McCartin-Lim, M., McGregor, A., Wang, R.: Approximate principal direction trees. In: ICML. icml.cc/Omnipress (2012)

Montgomery, D.C.: Design and Analysis of Experiments. Wiley, Hoboken (2017)

Moshkovitz, M., Dasgupta, S., Rashtchian, C., Frost, N.: Explainable k-means and k-medians clustering. In: ICML, vol. 119, pp. 7055–7065. PMLR (2020)

Nguyen, X.V., et al.: Information theoretic measures for clusterings comparison: is a correction for chance necessary? In: ICML, vol. 382, pp. 73–80. ACM (2009)

Pelleg, D., Moore, A.W.: X-means: extending k-means with efficient estimation of the number of clusters. In: ICML, pp. 727–734. Morgan Kaufmann (2000)

Plant, C., Böhm, C.: INCONCO: interpretable clustering of numerical and categorical objects. In: KDD, pp. 1127–1135. ACM (2011)

Quinlan, J.R.: Induction of decision trees. Mach. Learn. 1(1), 81–106 (1986)

Rand, W.M.: Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 66(336), 846–850 (1971)

Tan, P.N., et al.: Introduction to Data Mining. Pearson Education India, Noida (2016)

Tavallali, P., Tavallali, P., Singhal, M.: K-means tree: an optimal clustering tree for unsupervised learning. J. Supercomput. 77(5), 5239–5266 (2021)

Thomassey, S., Fiordaliso, A.: A hybrid sales forecasting system based on clustering and decision trees. Decis. Support Syst. 42(1), 408–421 (2006)

Verma, N., Kpotufe, S., Dasgupta, S.: Which spatial partition trees are adaptive to intrinsic dimension? In: UAI, pp. 565–574. AUAI Press (2009)

Wickramarachchi, D.C., Robertson, B.L., Reale, M., Price, C.J., Brown, J.: HHCART: an oblique decision tree. Comput. Stat. Data Anal. 96, 12–23 (2016)

Acknowledgment

This work is partially supported by the EU NextGenerationEU programme under the funding schemes PNRR-PE-AI FAIR (Future Artificial Intelligence Research), PNRR-SoBigData.it - Strengthening the Italian RI for Social Mining and Big Data Analytics - Prot. IR0000013, H2020-INFRAIA-2019-1: Res. Infr. G.A. 871042 SoBigData++, G.A. 761758 Humane AI, G.A. 952215 TAILOR, ERC-2018-ADG G.A. 834756 XAI, G.A. 101070416 Green.Dat.AI and CHIST-ERA-19-XAI-010 SAI.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Guidotti, R., Landi, C., Beretta, A., Fadda, D., Nanni, M. (2023). Interpretable Data Partitioning Through Tree-Based Clustering Methods. In: Bifet, A., Lorena, A.C., Ribeiro, R.P., Gama, J., Abreu, P.H. (eds) Discovery Science. DS 2023. Lecture Notes in Computer Science(), vol 14276. Springer, Cham. https://doi.org/10.1007/978-3-031-45275-8_33

Download citation

DOI: https://doi.org/10.1007/978-3-031-45275-8_33

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-45274-1

Online ISBN: 978-3-031-45275-8

eBook Packages: Computer ScienceComputer Science (R0)