Abstract

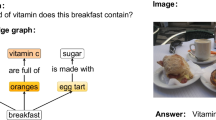

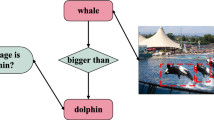

This paper focuses on cross-modality multi-hop visual questions, which require multi-hop reasoning over different sources of knowledge from multiple modalities, such as image and text. Due to the lack of cross-modality reasoning ability, it is difficult for the traditional model to make correct predictions. To solve this problem, we propose a new knowledge-enhanced inferential framework. We first build a reasoning graph to capture the topological relations between the objects in the given image and the logical relations of entities corresponding to these objects. To align the visual objects and textual entities, we design a cross-modality retriever with the help of an external multimodal knowledge graph. Based on the logical and topological relations on the graph, we can derive the answer by decomposing a complex multi-hop question into a series of attention-based reasoning steps. The result of the previous step acts as the context of the next step. By linking the results of all steps, we can form an evidence chain to the answer. Extensive experiments conducted on the popular KVQA dataset demonstrate the effectiveness of our approach.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Anderson, P., et al.: Bottom-up and top-down attention for image captioning and visual question answering. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6077–6086 (2018)

Antol, S., et al.: VQA: visual question answering. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2425–2433 (2015)

Ben-Younes, H., Cadene, R., Cord, M., Thome, N.: Mutan: multimodal tucker fusion for visual question answering. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2612–2620 (2017)

Brown, T., et al.: Language models are few-shot learners. Adv. Neural. Inf. Process. Syst. 33, 1877–1901 (2020)

Cadene, R., Ben-Younes, H., Cord, M., Thome, N.: Murel: multimodal relational reasoning for visual question answering. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1989–1998 (2019)

Chen, S., Zhao, Q.: Divide and conquer: answering questions with object factorization and compositional reasoning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6736–6745 (2023)

Cho, K., et al.: Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078 (2014)

Dettmers, T., Minervini, P., Stenetorp, P., Riedel, S.: Convolutional 2D knowledge graph embeddings. In: Thirty-second AAAI Conference on Artificial Intelligence (2018)

Gao, Y., Beijbom, O., Zhang, N., Darrell, T.: Compact bilinear pooling. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 317–326 (2016)

Hudson, D.A., Manning, C.D.: Compositional attention networks for machine reasoning. arXiv preprint arXiv:1803.03067 (2018)

Hudson, D.A., Manning, C.D.: Gqa: A new dataset for real-world visual reasoning and compositional question answering. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6700–6709 (2019)

Johnson, J., Hariharan, B., Van Der Maaten, L., Fei-Fei, L., Lawrence Zitnick, C., Girshick, R.: Clevr: a diagnostic dataset for compositional language and elementary visual reasoning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2901–2910 (2017)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Le, T.M., Le, V., Gupta, S., Venkatesh, S., Tran, T.: Guiding visual question answering with attention priors. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 4381–4390 (2023)

Le, T.M., Le, V., Venkatesh, S., Tran, T.: Dynamic language binding in relational visual reasoning. arXiv preprint arXiv:2004.14603 (2020)

Liu, Y., Li, H., Garcia-Duran, A., Niepert, M., Onoro-Rubio, D., Rosenblum, D.S.: MMKG: multi-modal knowledge graphs. In: Hitzler, P., et al. (eds.) The Semantic Web: 16th International Conference, ESWC 2019, Portorož, Slovenia, June 2–6, 2019, Proceedings, pp. 459–474. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-21348-0_30

Narasimhan, M., Schwing, A.G.: Straight to the facts: learning knowledge base retrieval for factual visual question answering. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018: 15th European Conference, Munich, Germany, September 8-14, 2018, Proceedings, Part VIII, pp. 460–477. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01237-3_28

Ng, A.Y., Harada, D., Russell, S.: Policy invariance under reward transformations: theory and application to reward shaping. In: ICML, vol. 99, pp. 278–287 (1999)

Nguyen, B.X., Do, T., Tran, H., Tjiputra, E., Tran, Q.D., Nguyen, A.: Coarse-to-fine reasoning for visual question answering. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4558–4566 (2022)

Pennington, J., Socher, R., Manning, C.D.: Glove: global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1532–1543 (2014)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. Adv. Neural. Inf. Process. Syst. 28, 91–99 (2015)

Shah, S., Mishra, A., Yadati, N., Talukdar, P.P.: KVQA: knowledge-aware visual question answering. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8876–8884 (2019)

Shao, Z., Yu, Z., Wang, M., Yu, J.: Prompting large language models with answer heuristics for knowledge-based visual question answering. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14974–14983 (2023)

Shi, J., Zhang, H., Li, J.: Explainable and explicit visual reasoning over scene graphs. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8376–8384 (2019)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Tucker, L.R.: Some mathematical notes on three-mode factor analysis. Psychometrika 31(3), 279–311 (1966)

Wang, P., Wu, Q., Shen, C., Dick, A., Van Den Hengel, A.: FVQA: fact-based visual question answering. IEEE Trans. Pattern Anal. Mach. Intell. 40(10), 2413–2427 (2017)

Weston, J., Chopra, S., Bordes, A.: Memory networks. arXiv preprint arXiv:1410.3916 (2014)

Williams, R.J.: Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 8(3–4), 229–256 (1992)

Yang, Z., et al.: An empirical study of gpt-3 for few-shot knowledge-based VQA. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 36, pp. 3081–3089 (2022)

Zhang, L., et al.: Rich visual knowledge-based augmentation network for visual question answering. IEEE Trans. Neural Netw. Learn. Syst. 32(10), 4362–4373 (2020)

Zhang, Y., Jiang, M., Zhao, Q.: Query and attention augmentation for knowledge-based explainable reasoning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15576–15585 (2022)

Zhu, Z., Yu, J., Wang, Y., Sun, Y., Hu, Y., Wu, Q.: Mucko: multi-layer cross-modal knowledge reasoning for fact-based visual question answering. arXiv preprint arXiv:2006.09073 (2020)

Acknowledgment

This work is supported by the National Natural Science Foundation of China (62276279), Key-Area Research and Development Program of Guangdong Province (2020B0101100001), the Tencent WeChat Rhino-Bird Focused Research Program (WXG-FR-2023-06), Open Fund of Guangdong Key Laboratory of Big Data Analysis and Processing (202301), and Zhuhai Industry-University-Research Cooperation Project (2220004002549).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, S., Yu, J., Lin, M., Qiu, S., Luo, X., Yin, J. (2023). A Knowledge-Enhanced Inferential Network for Cross-Modality Multi-hop VQA. In: Yang, X., et al. Advanced Data Mining and Applications. ADMA 2023. Lecture Notes in Computer Science(), vol 14177. Springer, Cham. https://doi.org/10.1007/978-3-031-46664-9_41

Download citation

DOI: https://doi.org/10.1007/978-3-031-46664-9_41

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-46663-2

Online ISBN: 978-3-031-46664-9

eBook Packages: Computer ScienceComputer Science (R0)