Abstract

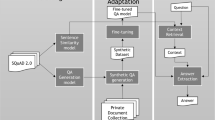

Open-domain answer sentence selection (OD-AS2), as a practical branch of open-domain question answering (OD-QA), aims to respond to a query by a potential answer sentence from a large-scale collection. A dense retrieval model plays a significant role across different solution paradigms, while its success depends heavily on sufficient labeled positive QA pairs and diverse hard negative sampling in contrastive learning. However, it is hard to satisfy such dependencies in a privacy-preserving distributed scenario, where in each client, fewer in-domain pairs and a relatively small collection cannot support effective dense retriever training. To alleviate this, we propose a brand-new learning framework for Privacy-preserving Distributed OD-AS2, dubbed PDD-AS2. Built upon federated learning, it consists of a client-customized query encoding for better personalization and a cross-client negative sampling for learning effectiveness. To evaluate our learning framework, we first construct a new OD-AS2 dataset, called Fed-NewsQA, based on NewsQA to simulate distributed clients with different genre/domain data. Experiment results show that our learning framework can outperform its baselines and exhibit its personalization ability.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

We will make our data and codes public.

References

Allam, A.M.N., Haggag, M.H.: The question answering systems: a survey (2016)

Berant, J., Chou, A., Frostig, R., Liang, P.: Semantic parsing on Freebase from question-answer pairs. In: Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, pp. 1533–1544. Association for Computational Linguistics, Seattle, Washington, USA, October 2013. https://aclanthology.org/D13-1160

Bird, S., Klein, E., Loper, E.: Natural language processing with Python: analyzing text with the natural language toolkit. O’Reilly Media, Inc. (2009)

Chen, D., Fisch, A., Weston, J., Bordes, A.: Reading wikipedia to answer open-domain questions. In: ACL (2017)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp. 4171–4186. Association for Computational Linguistics, Minneapolis, Minnesota, June 2019. https://doi.org/10.18653/v1/N19-1423. https://aclanthology.org/N19-1423

Gao, L., Callan, J.: Unsupervised corpus aware language model pre-training for dense passage retrieval. In: Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 2843–2853. Association for Computational Linguistics, Dublin, Ireland, May 2022. https://doi.org/10.18653/v1/2022.acl-long.203. https://aclanthology.org/2022.acl-long.203

Gao, L., Dai, Z., Chen, T., Fan, Z., Van Durme, B., Callan, J.: Complement lexical retrieval model with semantic residual embeddings. In: Hiemstra, D., Moens, M.-F., Mothe, J., Perego, R., Potthast, M., Sebastiani, F. (eds.) ECIR 2021. LNCS, vol. 12656, pp. 146–160. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-72113-8_10

Garg, S., Vu, T., Moschitti, A.: Tanda: transfer and adapt pre-trained transformer models for answer sentence selection. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 7780–7788 (2020)

Ge, S., Wu, F., Wu, C., Qi, T., Huang, Y., Xie, X.: Fedner: privacy-preserving medical named entity recognition with federated learning. ArXiv abs/2003.09288 (2020)

Guu, K., Lee, K., Tung, Z., Pasupat, P., Chang, M.W.: Realm: retrieval-augmented language model pre-training. In: Proceedings of the 37th International Conference on Machine Learning. ICML 2020, JMLR.org (2020)

Guu, K., Lee, K., Tung, Z., Pasupat, P., Chang, M.W.: Realm: Retrieval-augmented language model pre-training. ArXiv abs/2002.08909 (2020)

Harabagiu, S.M., Maiorano, S.J., Pasca, M.: Open-domain textual question answering techniques. Nat. Lang. Eng. 9, 231–267 (2003)

Hardy, S., et al.: Private federated learning on vertically partitioned data via entity resolution and additively homomorphic encryption. ArXiv abs/1711.10677 (2017)

Huang, J.T., et al.: Embedding-based retrieval in facebook search. In: Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 2553–2561 (2020)

Jiang, D., et al.: Federated topic modeling. In: Proceedings of the 28th ACM International Conference on Information and Knowledge Management, CIKM 2019, pp. 1071–1080. Association for Computing Machinery, New York (2019). https://doi.org/10.1145/3357384.3357909. https://doi.org/10.1145/3357384.3357909

Johnson, J., Douze, M., Jegou, H.: Billion-scale similarity search with gpus. IEEE Trans. Big Data 7(03), 535–547 (2021). https://doi.org/10.1109/TBDATA.2019.2921572

Karpukhin, V., et al.: Dense passage retrieval for open-domain question answering. In: Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 6769–6781. Association for Computational Linguistics, Online, November 2020. https://doi.org/10.18653/v1/2020.emnlp-main.550. https://aclanthology.org/2020.emnlp-main.550

Kwiatkowski, T., et al.: Natural questions: a benchmark for question answering research. Transactions of the Association of Computational Linguistics (2019)

Lee, J., Sung, M., Kang, J., Chen, D.: Learning dense representations of phrases at scale. In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pp. 6634–6647. Association for Computational Linguistics, Online, August 2021. https://doi.org/10.18653/v1/2021.acl-long.518. https://aclanthology.org/2021.acl-long.518

Lee, J., Yun, S., Kim, H., Ko, M., Kang, J.: Ranking paragraphs for improving answer recall in open-domain question answering. In: EMNLP (2018)

Lee, K., Chang, M.W., Toutanova, K.: Latent retrieval for weakly supervised open domain question answering. ArXiv abs/1906.00300 (2019)

Lin, Y., Ji, H., Liu, Z., Sun, M.: Denoising distantly supervised open-domain question answering. In: ACL (2018)

Lu, S., et al.: Less is more: Pretrain a strong Siamese encoder for dense text retrieval using a weak decoder. In: Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pp. 2780–2791. Association for Computational Linguistics, Online and Punta Cana, Dominican Republic, November 2021. https://doi.org/10.18653/v1/2021.emnlp-main.220. https://aclanthology.org/2021.emnlp-main.220

McMahan, B., Moore, E., Ramage, D., Hampson, S., Arcas, B.A.y.: Communication-efficient learning of deep networks from decentralized data. In: Singh, A., Zhu, J. (eds.) Proceedings of the 20th International Conference on Artificial Intelligence and Statistics. Proceedings of Machine Learning Research, vol. 54, pp. 1273–1282. PMLR (20–22 Apr 2017). https://proceedings.mlr.press/v54/mcmahan17a.html

Nguyen, T., et al.: Ms marco: a human generated machine reading comprehension dataset, November 2016

Paca, M.: Open-domain question answering from large text collections. Comput. Linguist. 29, 665–667 (2003)

Qu, Y., et al.: RocketQA: an optimized training approach to dense passage retrieval for open-domain question answering. In: Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 5835–5847. Association for Computational Linguistics, Online, June 2021. https://doi.org/10.18653/v1/2021.naacl-main.466. https://aclanthology.org/2021.naacl-main.466

Rajpurkar, P., Zhang, J., Lopyrev, K., Liang, P.: SQuAD: 100,000+ questions for machine comprehension of text. In: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pp. 2383–2392. Association for Computational Linguistics, Austin, Texas, November 2016. https://doi.org/10.18653/v1/D16-1264.https://aclanthology.org/D16-1264

Sanh, V., Debut, L., Chaumond, J., Wolf, T.: Distilbert, a distilled version of bert: smaller, faster, cheaper and lighter. arXiv preprint arXiv:1910.01108 (2019)

Seo, M., Lee, J., Kwiatkowski, T., Parikh, A.P., Farhadi, A., Hajishirzi, H.: Real-time open-domain question answering with dense-sparse phrase index. ArXiv abs/1906.05807 (2019)

Shen, G., Yang, Y., Deng, Z.H.: Inter-weighted alignment network for sentence pair modeling. In: Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pp. 1179–1189. Association for Computational Linguistics, Copenhagen, Denmark, September 2017. https://doi.org/10.18653/v1/D17-1122. https://aclanthology.org/D17-1122

Tran, Q.H., Lai, T., Haffari, G., Zukerman, I., Bui, T., Bui, H.: The context dependent additive recurrent neural net. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), pp. 1274–1283.Association for Computational Linguistics, New Orleans, Louisiana, June 2018.https://doi.org/10.18653/v1/N18-1115. https://aclanthology.org/N18-1115

Trischler, A., et al.: NewsQA: a machine comprehension dataset. In: Proceedings of the 2nd Workshop on Representation Learning for NLP, pp. 191–200. Association for Computational Linguistics, Vancouver, Canada, August 2017. https://doi.org/10.18653/v1/W17-2623. https://aclanthology.org/W17-2623

Wang, M., Smith, N.A., Mitamura, T.: What is the Jeopardy model? a quasi-synchronous grammar for QA. In: Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL), pp. 22–32. Association for Computational Linguistics, Prague, Czech Republic, June 2007. https://aclanthology.org/D07-1003

Xiong, L., et al.: Approximate nearest neighbor negative contrastive learning for dense text retrieval. arXiv preprint arXiv:2007.00808 (2020)

Yoon, S., Dernoncourt, F., Kim, D.S., Bui, T., Jung, K.: A compare-aggregate model with latent clustering for answer selection. In: Proceedings of the 28th ACM International Conference on Information and Knowledge Management (2019)

Zhan, J., Mao, J., Liu, Y., Guo, J., Zhang, M., Ma, S.: Optimizing dense retrieval model training with hard negatives. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1503–1512. SIGIR ’21. Association for Computing Machinery, New York (2021). https://doi.org/10.1145/3404835.3462880. https://doi.org/10.1145/3404835.3462880

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, W., Shen, T., Blumenstein, M., Long, G. (2023). Improving Open-Domain Answer Sentence Selection by Distributed Clients with Privacy Preservation. In: Yang, X., et al. Advanced Data Mining and Applications. ADMA 2023. Lecture Notes in Computer Science(), vol 14180. Springer, Cham. https://doi.org/10.1007/978-3-031-46677-9_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-46677-9_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-46676-2

Online ISBN: 978-3-031-46677-9

eBook Packages: Computer ScienceComputer Science (R0)