Abstract

The global decline in amphibian populations is a pressing issue, with numerous species facing the threat of extinction. One such species is the pool frog, Pelophylax lessonae, whose Norwegian population has experienced a significant long-term decline since monitoring began in 1996. This decline has pushed the species to the verge of local extinction. A substantial knowledge gap in the species’ biology hinders the proposal and evaluation of effective management actions. Consequently, there is a pressing need for efficient techniques to gather data on population size and composition.

Recent advancements in Machine Learning (ML) and Deep Learning (DL) have shown promising results in various domains, including ecology and evolution. Current research in these fields primarily focuses on species modeling, behavior detection, and identity recognition. The progress in mobile technology, ML, and DL has equipped researchers across numerous disciplines, including ecology, with innovative data collection methods for building knowledge bases on species and ecosystems. This study addresses the need for systematic field data collection for monitoring endangered species like the pool frog by employing deep learning and image processing techniques.

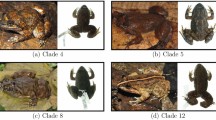

In this research, a multidisciplinary team developed a technique, termed ReFrogID, to identify individual frogs using their unique abdominal patterns. Utilizing RGB images, the system operates on two main principles: (1) a DL algorithm for automatic segmentation achieving AP@89.147, AP50@99.123, and AP75@98.942, and (2) pattern matching via local feature detection and matching methods. A new dataset, pelophylax_lessonae, addresses the identity recognition problem in pool frogs. The effectiveness of ReFrogIDis validated by its ability to identify frogs even when human experts fail. Source code is available at here.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Please consult the source code repository for additional training graphs.

- 2.

More detailed results can be found in the Tensorboard recording here.

- 3.

The mobile application can be found in the source code repository.

References

Awaludin, M., Yasin, V.: Application of oriented fast and rotated brief (ORB) and bruteforce hamming in library opencv for classification of plants. J. Inf. Syst. Appl. Manage. Account. Res. 4(3), 51–59 (2020)

Chen, Y.: The image annotation algorithm using convolutional features from intermediate layer of deep learning. Multimedia Tools Appl. 80(3), 4237–4261 (2021)

de Arruda, M.S., Spadon, G., Rodrigues, J.F., Gonçalves, W.N., Machado, B.B.: Recognition of endangered pantanal animal species using deep learning methods. In: 2018 International Joint Conference on Neural Networks (IJCNN), pp. 1–8. IEEE (2018)

Dervo, B., van der Kooij, J., Johansen, B.S.: Artsgruppeomtale amfibier og reptiler (amphibia og reptilia). norsk rødliste for arter 2021. artsdatabanken (2021)

Dujon, A.M., Schofield, G.: Importance of machine learning for enhancing ecological studies using information-rich imagery. Endangered Species Res. 39, 91–104 (2019)

Díaz, S., et al.: Pervasive human-driven decline of life on earth points to the need for transformative change. Science, 366(6471), eaax3100 (2019). ISSN 0036–8075

Engemyr, A.K., Reinkind, I.R.: Handlingsplan for damfrosk Pelophylax lessonae 2019–2023. Report, The Norwegian Environment Agency (2019)

Ferner, J.W.: Measuring and Marking Post-Metamorphic Amphibians. Amphibian Ecology and Conservation: A Handbook of Techniques, pp. 123–141 (2010)

Girshick, R.: Fast R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1440–1448 (2015)

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2961–2969 (2017)

Hearst, M.A., Dumais, S.T., Osuna, E., Platt, J., Scholkopf, B.: Support vector machines. IEEE Intell. Syst. Appl. 13(4), 18–28 (1998)

IUCN. The IUCN red list of threatened species (2022). https://www.iucnredlist.org

Jakubović, A., Velagić, J.: Image feature matching and object detection using brute-force matchers. In: 2018 International Symposium ELMAR, pp. 83–86. IEEE (2018). https://doi.org/10.23919/ELMAR.2018.8534641

Jewell, Z.: Effect of monitoring technique on quality of conservation science. Conserv. Biol. 27(3), 501–508 (2013). ISSN 0888–8892

Karahan, Ş., Karaöz, A., Özdemir, Ö.F., Gü, A.G., Uludag, U.: On identification from periocular region utilizing sift and surf. In: 2014 22nd European Signal Processing Conference (EUSIPCO), pp. 1392–1396. IEEE (2014)

Li, F., et al.: Mask DINO: towards a unified transformer-based framework for object detection and segmentation. arXiv preprint arXiv:2206.02777 (2022)

Lindeberg, T.: Scale invariant feature transform (2012). QC 20120524

Petso, T., Jamisola, R.S., Mpoeleng, D.: Review on methods used for wildlife species and individual identification. Eur. J. Wildl. Res. 68(1), 1–18 (2022)

Pimm, S.L., et al.: The biodiversity of species and their rates of extinction, distribution, and protection. Science 344(6187), 1246752 (2014). ISSN 0036–8075

Redmon, J., Farhadi, A.: YOLOv3: an incremental improvement. arXiv preprint arXiv:1804.02767 (2018)

Rezvy, S., Zebin, T., Braden, B., Pang, W., Taylor, S., Gao, X.: Transfer learning for endoscopy disease detection and segmentation with mask-R-CNN benchmark architecture. In: 2020 IEEE 17th International Symposium on Biomedical Imaging (2020)

Rublee, E., Rabaud, V., Konolige, K., Bradski, G.: ORB: an efficient alternative to sift or surf. In: 2011 International Conference on Computer Vision, pp. 2564–2571 (2011). https://doi.org/10.1109/ICCV.2011.6126544

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Sun, J., Shen, Z., Wang, Y., Bao, H., Zhou, X.: LoFTR: detector-free local feature matching with transformers. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8918–8927. IEEE (2021)

Szeliski, R.: Feature detection and matching. In: Computer Vision. TCS, pp. 333–399. Springer, Cham (2022). https://doi.org/10.1007/978-3-030-34372-9_7

Tourani, M.: A review of spatial capture-recapture: ecological insights, limitations, and prospects. Ecol. Evol. 12(1), e8468 (2022). https://doi.org/10.1002/ece3.8468. ISSN 2045–7758

Wu, J., Yu, Y., Huang, C., Yu, K.: Deep multiple instance learning for image classification and auto-annotation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3460–3469 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Evensen, V.N. et al. (2023). ReFrogID: Pattern Recognition for Pool Frog Identification Using Deep Learning and Feature Matching. In: Bramer, M., Stahl, F. (eds) Artificial Intelligence XL. SGAI 2023. Lecture Notes in Computer Science(), vol 14381. Springer, Cham. https://doi.org/10.1007/978-3-031-47994-6_33

Download citation

DOI: https://doi.org/10.1007/978-3-031-47994-6_33

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-47993-9

Online ISBN: 978-3-031-47994-6

eBook Packages: Computer ScienceComputer Science (R0)