Abstract

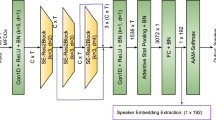

Throughout the history of computational paralinguistics, numerous feature extraction, preprocessing and classification techniques have been used. One of the important challenges in this subfield of speech technology is handling utterances with different duration. Since standard speech processing features (such as filter banks or DNN embeddings) are typically frame-level ones and we would like to classify whole utterances, a set of frame-level features have to be converted into fixed-sized utterance-level features. The choice of this aggregation method is often overlooked, and simple functions like mean and/or standard deviation are used without solid experimental support. In this study we take wav2vec 2.0 deep embeddings, and aggregate them with 11 different functions. We sought to obtain a subset of potentially optimal aggregation functions, because there are no general rules yet that can be applied universally between subtopics. Besides testing both standard and non-traditional aggregation strategies individually, we also combined them to improve the classification performance. By using multiple aggregation functions, we were able to achieve significant improvements on three public paralinguistic corpora.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Baevski, A., Auli, M., Conneau, A.: Wav2vec 2.0: learning the structure of speech from raw audio (2020). https://ai.meta.com/blog/wav2vec-20-learning-the-structure-of-speech-from-raw-audio/

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2, 1–27 (2011). https://doi.org/10.1145/1961189.1961199

Chen, J., Ye, J., Tang, F., Zhou, J.: Automatic detection of Alzheimer’s Disease using spontaneous speech only. In: Proceedings of the Interspeech 2021, pp. 3830–3834 (2021). https://doi.org/10.21437/Interspeech.2021-2002

Conneau, A., Baevski, A., Collobert, R., Mohamed, A., Auli, M.: Unsupervised Cross-lingual Representation Learning for Speech Recognition (2020). https://doi.org/10.48550/ARXIV.2006.13979

Egas-López, J.V., Gosztolya, G.: Deep Neural Network Embeddings for the estimation of the degree of sleepiness. In: IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP, pp. 7288–7292 (2021). https://doi.org/10.1109/ICASSP39728.2021.9413589

Egas-López, J.V., Kiss, G., Sztahó, D., Gosztolya, G.: Automatic assessment of the degree of clinical depression from speech using X-Vectors. In: ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 8502–8506 (2022). https://doi.org/10.1109/ICASSP43922.2022.9746068

Egas-López, J.V., Vetráb, M., Tóth, L., Gosztolya, G.: identifying conflict escalation and primates by using ensemble x-vectors and fisher vector features. In: Proceedings of the Interspeech 2021, pp. 476–480 (2021). https://doi.org/10.21437/Interspeech.2021-1173

Gosztolya, G.: Using the Fisher vector representation for audio-based emotion recognition. Acta Polytechnica Hungarica 17, 7–23 (2020)

Gosztolya, G., Tóth, L., Svindt, V., Bóna, J., Hoffmann, I.: Using acoustic deep neural network embeddings to detect multiple sclerosis from speech. In: Proceedings of ICASSP, pp. 6927–6931 (2022)

Gosztolya, G., Beke, A., Neuberger, T.: Differentiating laughter types via HMM/DNN and probabilistic sampling. In: Speech and Computer, SPECOM 2019. vol. 11658, pp. 122–132 (2019)

Grezes, F., Richards, J., Rosenberg, A.: Let me finish: automatic conflict detection using speaker overlap. In: Proceedings of the Interspeech 2013, pp. 200–204 (2013). https://doi.org/10.21437/Interspeech.2013-67

Grosman, J.: Fine-tuned XLSR-53 large model for speech recognition in German (2021). https://huggingface.co/jonatasgrosman/wav2vec2-large-xlsr-53-german

Han, K.J., Kim, S., Narayanan, S.S.: Strategies to improve the robustness of Agglomerative Hierarchical Clustering under data source variation for speaker diarization. IEEE Trans. Audio Speech Lang. Process. 16, 1590–1601 (2008). https://doi.org/10.1109/TASL.2008.2002085

Hinton, G., et al.: Deep Neural Networks for Acoustic Modeling in Speech Recognition: the shared views of four research groups. IEEE Signal Process. Mag. 29, 82–97 (2012). https://doi.org/10.1109/MSP.2012.2205597

Jeancolas, L., et al.: X-Vectors: new quantitative biomarkers for early Parkinson’s Disease detection from speech. Front. Neuroinform. 15, 1–18 (2021). https://doi.org/10.3389/fninf.2021.578369

Kadiri, S., Kethireddy, R., Alku, P.: Parkinson’s Disease detection from speech using Single Frequency Filtering Cepstral Coefficients. In: Proceedings of the Interspeech 2020, pp. 4971–4975 (2020). https://doi.org/10.21437/Interspeech.2020-3197

Kaya, H., Karpov, A., Salah, A.: Fisher vectors with cascaded normalization for paralinguistic analysis. In: Proceedings of the Interspeech 2015, pp. 909–913 (2015). https://doi.org/10.21437/Interspeech.2015-193

Krajewski, J., Schieder, S., Batliner, A.: Description of the upper respiratory tract infection corpus (urtic). In: Proceedings of the Interspeech 2017 (2017)

Lin, W.W., Mak, M.W.: Wav2spk: a simple DNN architecture for learning speaker embeddings from waveforms. In: Proceedings of Interspeech, pp. 3211–3215 (2020)

Metze, F., Batliner, A., Eyben, F., Polzehl, T., Schuller, B., Steidl, S.: Emotion recognition using imperfect speech recognition. In: Proceedings of the Interspeech 2010, pp. 478–481 (2010). https://doi.org/10.21437/Interspeech.2010-202

Mustaqeem, Kwon, S.: CLSTM: deep feature-based speech emotion recognition using the hierarchical ConvLSTM network. Mathematics 8, 1–19 (2020). https://doi.org/10.3390/math8122133

Oflazoglu, C., Yildirim, S.: Recognizing emotion from Turkish speech using acoustic features. In: EURASIP Journal on Audio Speech and Music Processing 2013 (2013). https://doi.org/10.1186/1687-4722-2013-26

Pappagari, R., et al.: Automatic detection and assessment of Alzheimer Disease using speech and language technologies in low-resource scenarios. In: Proceedings of the Interspeech 2021, pp. 3825–3829 (2021). https://doi.org/10.21437/Interspeech.2021-1850

Pérez-Toro, P., et al.: Alzheimer’s detection from English to Spanish using acoustic and linguistic embeddings. In: Proceedings of Interspeech 2022, pp. 2483–2487 (2022). https://doi.org/10.21437/Interspeech.2022-10883

Přibil, J., Přibilová, A., Matoušek, J.: GMM-based speaker age and gender classification in Czech and Slovak. J. Electr. Eng. 68, 3–12 (2017). https://doi.org/10.1515/jee-2017-0001

Schuller, B., Steidl, S., Batliner, A.: The INTERSPEECH 2009 emotion challenge. In: Proceedings of the Interspeech 2009, pp. 312–315 (2009). https://doi.org/10.21437/Interspeech. 2009–103

Schuller, B., et al.: The INTERSPEECH 2017 computational paralinguistics challenge: addressee, cold & snoring. In: Proceedings of the Interspeech 2017, pp. 3442–3446 (2017). https://doi.org/10.21437/Interspeech.2017-43

Schuller, B., et al.: The INTERSPEECH 2015 computational paralinguistics challenge: Nativeness, Parkinson’s & eating condition. In: Proceedings of the Interspeech 2015, pp. 478–482 (2015). https://doi.org/10.21437/Interspeech.2015-179

Schuller, B.W., et al.: The INTERSPEECH 2019 computational paralinguistics challenge: Styrian dialects, continuous sleepiness, baby sounds & orca activity. In: Proceedings of the Interspeech 2019, pp. 2378–2382 (2019). https://doi.org/10.21437/Interspeech.2019-1122

Sheikh, S.A., Sahidullah, M., Hirsch, F., Ouni, S.: Introducing ECAPA-TDNN and Wav2Vec2.0 Embeddings to Stuttering Detection (2022). https://doi.org/10.48550/ARXIV.2204.01564

Snyder, D., Garcia-Romero, D., Sell, G., Povey, D., Khudanpur, S.: X-Vectors: robust DNN embeddings for speaker verification. In: IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP, pp. 5329–5333 (2018). https://doi.org/10.1109/ICASSP.2018.8461375

Steidl, S.: Automatic classification of emotion related user states in spontaneous children’s speech. Logos-Verlag Berlin, Germany (2009). https://d-nb.info/992551641

Tzirakis, P., Zhang, J., Schuller, B.W.: End-to-end speech emotion recognition using deep neural networks. In: 2018 IEEE international Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5089–5093 (2018)

Van Segbroeck, M., et al.: Classification of cognitive load from speech using an i-vector framework. In: Proceedings of the Interspeech 2014, pp. 751–755 (2014). https://doi.org/10.21437/Interspeech.2014-114

Vetráb, M., Gosztolya, G.: Speech emotion detection form a Hungarian database with the Bag-of-Audi-Words technique (in Hungarian). In: Proceedings of MSZNY, pp. 265–274. Szeged (2019)

Vetráb, M., Gosztolya, G.: Using hybrid HMM/DNN embedding extractor models in computational paralinguistic tasks. Sensors 23, 5208 (2023)

Vetráb, M., et al.: Using spectral sequence-to-sequence autoencoders to assess mild cognitive impairment. In: IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP, pp. 6467–6471 (2022). https://doi.org/10.1109/ICASSP43922.2022.9746148

Vásquez-Correa, J., Orozco-Arroyave, J.R., Nöth, E.: Convolutional Neural Network to model articulation impairments in patients with Parkinson’s Disease. In: Proceedings of the Interspeech 2017, pp. 314–318 (2017). https://doi.org/10.21437/Interspeech.2017-1078

Wagner, J., Schiller, D., Seiderer, A., Andre, E.: Deep learning in paralinguistic recognition tasks: are hand-crafted features still relevant? In: Interspeech, pp. 147–151 (2018). https://doi.org/10.21437/Interspeech.2018-1238

Wang, W., Lu, P., Yan, Y.: An improved hierarchical speaker clustering. Acta Acustica 33, 9–14 (2008)

Zhao, Z., Bao, Z., Zhang, Z., Cummins, N., Wang, H., Schuller, B.: Attention-enhanced connectionist temporal classification for discrete speech emotion recognition. In: Proceedings of the Interspeech 2019, pp. 206–210 (2019). https://doi.org/10.21437/Interspeech.2019-1649

Acknowledgements

This research was supported by the NRDI Office of the Hungarian Ministry of Innovation and Technology (grant no. TKP2021-NVA-09), and within the framework of the Artificial Intelligence National Laboratory Program (RRF-2.3.1-21-2022-00004).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Vetráb, M., Gosztolya, G. (2023). Aggregation Strategies of Wav2vec 2.0 Embeddings for Computational Paralinguistic Tasks. In: Karpov, A., Samudravijaya, K., Deepak, K.T., Hegde, R.M., Agrawal, S.S., Prasanna, S.R.M. (eds) Speech and Computer. SPECOM 2023. Lecture Notes in Computer Science(), vol 14338. Springer, Cham. https://doi.org/10.1007/978-3-031-48309-7_7

Download citation

DOI: https://doi.org/10.1007/978-3-031-48309-7_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-48308-0

Online ISBN: 978-3-031-48309-7

eBook Packages: Computer ScienceComputer Science (R0)