Abstract

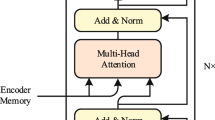

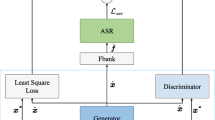

State-of-the-art spoken language identification (LID) systems are sensitive to domain-mismatch between training and testing samples, due to which, they often perform unsatisfactorily in unseen target domain conditions. In order to improve the performance in domain-mismatched conditions, the LID system should be encouraged to learn domain-invariant representation of the speech. In this paper, we propose an adversarially trained hierarchical attention network for achieving this. Specifically, the proposed method first uses a transformer-encoder which uses attention mechanism at three different-levels to learn better representations at segment-level, suprasegmental-level and utterance-level. Such hierarchical attention mechanism allows the network to encode LID-specific contents of the speech in a better way. The network is then encouraged to learn domain-invariant representation of the speech using adversarial multi-task learning (AMTL). Results obtained on unseen target domain conditions demonstrate the superiority of proposed approach over state-of-the-art baselines.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Open-speech-ekstep dataset. https://github.com/Open-Speech-EkStep, (Accessed 23 Nov 2022)

Abdelwahab, M., Busso, C.: Domain adversarial for acoustic emotion recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 26(12), 2423–2435 (2018)

Abdullah, B.M., Avgustinova, T., Möbius, B., Klakow, D.: Cross-domain adaptation of spoken language identification for related languages: the curious case of Slavic languages. In: INTERSPEECH 2020, pp. 477–481 (2020)

Ambikairajah, E., Li, H., Wang, L., Yin, B., Sethu, V.: Language identification: a tutorial. IEEE Circuits Syst. Mag. 11(2), 82–108 (2011)

Chadha, H.S., et al.: Vakyansh: ASR toolkit for low resource indic languages, pp. 1–5. arXiv preprint arXiv:2203.16512 (2022)

Dey, S., Saha, G., Sahidullah, M.: Cross-corpora language recognition: a preliminary investigation with indian languages. In: 2021 29th European Signal Processing Conference (EUSIPCO), pp. 546–550. IEEE (2021)

Dey, S., Sahidullah, M., Saha, G.: Cross-corpora spoken language identification with domain diversification and generalization. Comput. Speech Lang. 81, 1–24 (2023)

Duroselle, R., Jouvet, D., Illina, I.: Metric learning loss functions to reduce domain mismatch in the x-vector space for language recognition. In: INTERSPEECH 2020, pp. 447–451 (2020)

Ganin, Y., Lempitsky, V.: Unsupervised domain adaptation by backpropagation. In: International Conference on Machine Learning, pp. 1180–1189. PMLR (2015)

Meng, Z., Zhao, Y., Li, J., Gong, Y.: Adversarial speaker verification. In: 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2019), pp. 6216–6220. IEEE (2019)

Mounika, K., Achanta, S., Lakshmi, H., Gangashetty, S.V., Vuppala, A.K.: An investigation of deep neural network architectures for language recognition in indian languages. In: INTERSPEECH, pp. 2930–2933 (2016)

Muralikrishna, H., Dileep, A.D.: Spoken language identification in unseen channel conditions using modified within-sample similarity loss. Pattern Recogn. Lett. 158, 16–23 (2022)

Muralikrishna, H., Kapoor, S., Dileep, A.D., Rajan, P.: Spoken language identification in unseen target domain using within-sample similarity loss. In: 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2021), pp. 7223–7227. IEEE (2021)

Muralikrishna, H., Sapra, P., Jain, A., Dileep, A.D.: Spoken language identification using bidirectional LSTM based LID sequential senones. In: 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), pp. 320–326. IEEE (2019)

Padi, B., Mohan, A., Ganapathy, S.: End-to-end language recognition using attention based hierarchical gated recurrent unit models. In: ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5966–5970. IEEE (2019)

Pešán, J., Burget, L., Černockỳ, J.: Sequence summarizing neural networks for spoken language recognition. In: Proceedings of interspeech 2016, pp. 3285–3288 (2016)

Shinohara, Y.: Adversarial multi-task learning of deep neural networks for robust speech recognition. In: Interspeech, pp. 2369–2372. San Francisco, CA, USA (2016)

Silnova, A., et al.: BUT/Phonexia bottleneck feature extractor. In: Odyssey, pp. 283–287 (2018)

Snyder, D., Garcia-Romero, D., McCree, A., Sell, G., Povey, D., Khudanpur, S.: Spoken language recognition using x-vectors. In: Odyssey, vol. 2018, pp. 105–111 (2018)

Suthokumar, G., Sethu, V., Sriskandaraja, K., Ambikairajah, E.: Adversarial multi-task learning for speaker normalization in replay detection. In: 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2020), pp. 6609–6613. IEEE (2020)

Zhou, K., Liu, Z., Qiao, Y., Xiang, T., Loy, C.C.: Domain generalization: a survey. IEEE Trans. Pattern Anal. Mach. Intell. 45(4), 4396–4415 (2023)

Acknowledgement

This work resulted from research supported by Ministry of Electronics & Information Technology (MeitY), Government of India through project titled “National Language Translation Mission (NLTM) : BHASHINI”.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Goswami, U., Muralikrishna, H., Dileep, A.D., Thenkanidiyoor, V. (2023). Adversarially Trained Hierarchical Attention Network for Domain-Invariant Spoken Language Identification. In: Karpov, A., Samudravijaya, K., Deepak, K.T., Hegde, R.M., Agrawal, S.S., Prasanna, S.R.M. (eds) Speech and Computer. SPECOM 2023. Lecture Notes in Computer Science(), vol 14339. Springer, Cham. https://doi.org/10.1007/978-3-031-48312-7_38

Download citation

DOI: https://doi.org/10.1007/978-3-031-48312-7_38

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-48311-0

Online ISBN: 978-3-031-48312-7

eBook Packages: Computer ScienceComputer Science (R0)