Abstract

Automatic surgical skill assessment has the capacity to bring a transformative shift in the assessment, development, and enhancement of surgical proficiency. It offers several advantages, including objectivity, precision, and real-time feedback. These benefits will greatly enhance the development of surgical skills for novice surgeons, enabling them to improve their abilities in a more effective and efficient manner. In this study, our primary objective was to explore the potential of hand skeleton dynamics as an effective means of evaluating surgical proficiency. Specifically, we aimed to discern between experienced surgeons and surgical residents by analyzing sequences of hand skeletons. To the best of our knowledge, this study represents a pioneering approach in using hand skeleton sequences for assessing surgical skills. To effectively capture the spatial-temporal correlations within sequences of hand skeletons for surgical skill assessment, we present STGFormer, a novel approach that combines the capabilities of Graph Convolutional Networks and Transformers. STGFormer is designed to learn advanced spatial-temporal representations and efficiently capture long-range dependencies. We evaluated our proposed approach on a dataset comprising experienced surgeons and surgical residents practicing surgical procedures in a simulated training environment. Our experimental results demonstrate that the proposed STGFormer outperforms all state-of-the-art models for the task of surgical skill assessment. More precisely, we achieve an accuracy of 83.29% and a weighted average F1-score of 81.41%. These results represent a significant improvement of 1.37% and 1.28% respectively when compared to the best state-of-the-art model.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bai, S., Kolter, J.Z., Koltun, V.: An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv preprint arXiv:1803.01271 (2018)

De Smedt, Q., Wannous, H., Vandeborre, J.P.: Skeleton-based dynamic hand gesture recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 1–9 (2016)

Funke, I., Mees, S.T., Weitz, J., Speidel, S.: Video-based surgical skill assessment using 3D convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 14, 1217–1225 (2019)

Gao, Y., et al.: JHU-ISI gesture and skill assessment working set (jigsaws): a surgical activity dataset for human motion modeling. In: MICCAI workshop: M2cai, vol. 3 (2014)

Goh, A.C., Goldfarb, D.W., Sander, J.C., Miles, B.J., Dunkin, B.J.: Global evaluative assessment of robotic skills: validation of a clinical assessment tool to measure robotic surgical skills. J. Urol. 187(1), 247–252 (2012)

Guo, S., Lin, Y., Feng, N., Song, C., Wan, H.: Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 922–929 (2019)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Ismail Fawaz, H., Forestier, G., Weber, J., Idoumghar, L., Muller, P.-A.: Evaluating surgical skills from kinematic data using convolutional neural networks. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11073, pp. 214–221. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00937-3_25

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907 (2016)

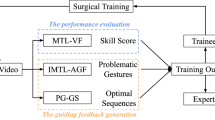

Liu, D., et al.: Towards unified surgical skill assessment. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9522–9531 (2021)

Maghoumi, M., LaViola, J.J.: DeepGRU: deep gesture recognition utility. In: Bebis, G., et al. (eds.) ISVC 2019, Part I. LNCS, vol. 11844, pp. 16–31. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-33720-9_2

Martin, J., et al.: Objective structured assessment of technical skill (OSATS) for surgical residents. Br. J. Surg. 84(2), 273–278 (1997)

Mazzia, V., Angarano, S., Salvetti, F., Angelini, F., Chiaberge, M.: Action transformer: a self-attention model for short-time pose-based human action recognition. Pattern Recogn. 124, 108487 (2022)

Pérez-Escamirosa, F., et al.: Objective classification of psychomotor laparoscopic skills of surgeons based on three different approaches. Int. J. Comput. Assist. Radiol. Surg. 15(1), 27–40 (2020)

Peters, J.H., et al.: Development and validation of a comprehensive program of education and assessment of the basic fundamentals of laparoscopic surgery. Surgery 135(1), 21–27 (2004)

Plizzari, C., Cannici, M., Matteucci, M.: Spatial temporal transformer network for skeleton-based action recognition. In: Del Bimbo, A., et al. (eds.) ICPR 2021, Part III. LNCS, vol. 12663, pp. 694–701. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-68796-0_50

Slama, R., Rabah, W., Wannous, H.: STR-GCN: dual spatial graph convolutional network and transformer graph encoder for 3D hand gesture recognition. In: IEEE FG, pp. 1–6 (2023)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Wang, L., et al.: Temporal segment networks for action recognition in videos. IEEE Trans. Pattern Anal. Mach. Intell. 41(11), 2740–2755 (2018)

Wang, Z., Majewicz Fey, A.: Deep learning with convolutional neural network for objective skill evaluation in robot-assisted surgery. Int. J. Comput. Assist. Radiol. Surg. 13, 1959–1970 (2018)

Yu, B., Yin, H., Zhu, Z.: Spatio-temporal graph convolutional networks: a deep learning framework for traffic forecasting. arXiv preprint arXiv:1709.04875 (2017)

Zhang, F., et al.: Mediapipe hands: On-device real-time hand tracking. arXiv preprint arXiv:2006.10214 (2020)

Zhang, Y., Wu, B., Li, W., Duan, L., Gan, C.: STST: spatial-temporal specialized transformer for skeleton-based action recognition. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 3229–3237 (2021)

Zia, A., Sharma, Y., Bettadapura, V., Sarin, E.L., Essa, I.: Video and accelerometer-based motion analysis for automated surgical skills assessment. Int. J. Comput. Assist. Radiol. Surg. 13, 443–455 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 Springer Nature Switzerland AG

About this paper

Cite this paper

Feghoul, K., Maia, D.S., Amrani, M.E., Daoudi, M., Amad, A. (2024). Spatial-Temporal Graph Transformer for Surgical Skill Assessment in Simulation Sessions. In: Vasconcelos, V., Domingues, I., Paredes, S. (eds) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2023. Lecture Notes in Computer Science, vol 14469. Springer, Cham. https://doi.org/10.1007/978-3-031-49018-7_21

Download citation

DOI: https://doi.org/10.1007/978-3-031-49018-7_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-49017-0

Online ISBN: 978-3-031-49018-7

eBook Packages: Computer ScienceComputer Science (R0)