Abstract

Camera calibration is a first and fundamental step in various computer vision applications. Despite being an active field of research, Zhang’s method remains widely used for camera calibration due to its implementation in popular toolboxes like MATLAB and OpenCV. However, this method initially assumes a pinhole model with oversimplified distortion models. In this work, we propose a novel approach that involves a pre-processing step to remove distortions from images by means of Gaussian processes. Our method does not need to assume any distortion model and can be applied to severely warped images, even in the case of multiple distortion sources, e.g., a fisheye image of a curved mirror reflection. The Gaussian processes capture all distortions and camera imperfections, resulting in virtual images as though taken by an ideal pinhole camera with square pixels. Furthermore, this ideal GP-camera only needs one image of a square grid calibration pattern. This model allows for a serious upgrade of many algorithms and applications that are designed in a pure projective geometry setting but with a performance that is very sensitive to non-linear lens distortions. We demonstrate the effectiveness of our method by simplifying Zhang’s calibration method, reducing the number of parameters and getting rid of the distortion parameters and iterative optimization. We validate by means of synthetic data and real world images. The contributions of this work include the construction of a virtual ideal pinhole camera using Gaussian processes, a simplified calibration method and lens distortion removal.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Beardsley, P., Murray, D., Zisserman, A.: Camera calibration using multiple images. In: Sandini, G. (ed.) ECCV 1992. LNCS, vol. 588, pp. 312–320. Springer, Heidelberg (1992). https://doi.org/10.1007/3-540-55426-2_36

Burger, W.: Zhang’s camera calibration algorithm: in-depth tutorial and implementation. HGB16-05 pp. 1–6 (2016)

Caprile, B., Torre, V.: Using vanishing points for camera calibration. Int. J. Comput. Vision 4(2), 127–139 (1990). https://doi.org/10.1007/BF00127813

Devernay, F., Faugeras, O.: Straight lines have to be straight. Mach. Vis. Appl. 13(1), 14–24 (2001). https://doi.org/10.1007/PL00013269

Duvenaud, D.K., College, P.: Automatic model construction with Gaussian processes. PhD thesis (2014). https://doi.org/10.17863/CAM.14087

Galan, M., Strojnik, M., Wang, Y.: Design method for compact, achromatic, high-performance, solid catadioptric system (SoCatS), from visible to IR. Opt. Express 27(1), 142–149 (2019)

Hartley, R.I., Zisserman, A.: Multiple View Geometry in Computer Vision. Cambridge University Press, ISBN: 0521540518, second edn. (2004)

Khan, A., Li, J.-P., Malik, A., Yusuf Khan, M.: Vision-based inceptive integration for robotic control. In: Wang, J., Reddy, G.R.M., Prasad, V.K., Reddy, V.S. (eds.) Soft Computing and Signal Processing. AISC, vol. 898, pp. 95–105. Springer, Singapore (2019). https://doi.org/10.1007/978-981-13-3393-4_11

Lesueur, V., Nozick, V.: Least square for Grassmann-Cayley agelbra in homogeneous coordinates. In: Huang, F., Sugimoto, A. (eds.) PSIVT 2013. LNCS, vol. 8334, pp. 133–144. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-642-53926-8_13

Li, Z., Yuxuan, L., Yangjie, S., Chaozhen, L., Haibin, A., Zhongli, F.: A review of developments in the theory and technology of three-dimensional reconstruction in digital aerial photogrammetry. Acta Geodaet. et Cartographica Sinica 51(7), 1437 (2022)

Liao, K., et al.: Deep learning for camera calibration and beyond: a survey. arXiv preprint arXiv:2303.10559 (2023)

Liu, D.C., Nocedal, J.: On the limited memory BFGS method for large scale optimization. Math. Program. 45(1–3), 503–528 (1989). https://doi.org/10.1007/BF01589116

Mertan, A., Duff, D.J., Unal, G.: Single image depth estimation: an overview. Digital Signal Process. 123, 103441 (2022)

Penne, R.: A mechanical interpretation of least squares fitting in 3D. Bull. Belg. Math. Soc.-Simon Stevin 15(1), 127–134 (2008)

Penne, R., Ribbens, B., Puttemans, S.: A new method for computing the principal point of an optical sensor by means of sphere images. In: Jawahar, C.V., Li, H., Mori, G., Schindler, K. (eds.) ACCV 2018. LNCS, vol. 11361, pp. 676–690. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-20887-5_42

Penne, R., Ribbens, B., Roios, P.: An exact robust method to localize a known sphere by means of one image. Int. J. Comput. Vision 127(8), 1012–1024 (2018). https://doi.org/10.1007/s11263-018-1139-6

Puig, L., Bermúdez, J., Sturm, P., Guerrero, J.J.: Calibration of omnidirectional cameras in practice: a comparison of methods. Comput. Vis. Image Underst. 116(1), 120–137 (2012)

Ramalingam, S., Sturm, P.: A unifying model for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 39(7), 1309–1319 (2017). https://doi.org/10.1109/tpami.2016.2592904

Ranganathan, P., Olson, E.: Gaussian process for lens distortion modeling. In: 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 3620–3625 (2012). https://doi.org/10.1109/iros.2012.6385481

Rasmussen, C.E., Williams, C.K.I.: Gaussian processes for machine learning. The MIT Press (2006)

Raza, S.N., ur Rehman, H.R., Lee, S.G., Choi, G.S.: Artificial intelligence based camera calibration. In: 2019 15th International Wireless Communications and Mobile Computing Conference (IWCMC), pp. 1564–1569. IEEE (2019)

Sarath, B., Varadarajan, K.: Fundamental theorem of projective geometry. Comm. Algebra 12(8), 937–952 (1984). https://doi.org/10.1080/00927878408823034

Sels, S., Ribbens, B., Vanlanduit, S., Penne, R.: Camera calibration using gray code. Sensors 19(2), 246 (2019). https://doi.org/10.3390/s19020246, https://www.mdpi.com/1424-8220/19/2/246

Smith, P., Reid, I.D., Davison, A.J.: Real-time monocular SLAM with straight lines (2006)

Sun, J., Chen, X., Gong, Z., Liu, Z., Zhao, Y.: Accurate camera calibration with distortion models using sphere images. Opt. Laser Technol. 65, 83–87 (2015)

Wu, Y., Jiang, S., Xu, Z., Zhu, S., Cao, D.: Lens distortion correction based on one chessboard pattern image. Front. Optoelectron. 8(3), 319–328 (2015). https://doi.org/10.1007/s12200-015-0453-7

Zhang, Y., Zhao, X., Qian, D.: Learning-based framework for camera calibration with distortion correction and high precision feature detection. arXiv preprint arXiv:2202.00158 (2022)

Zhang, Z.: A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22(11), 1330–1334 (2000)

Zheng, Z., Xie, X., Yu, Y.: Image undistortion and stereo rectification based on central ray-pixel models. In: Artificial Intelligence and Robotics: 7th International Symposium, ISAIR 2022, Shanghai, China, October 21–23, 2022, Proceedings, Part II, pp. 40–55. Springer (2022). https://doi.org/10.1007/978-981-19-7943-9_4

Acknowledgements

Conceptualization, I.D.B. and R.P.; methodology, I.D.B., S.P. and R.P; software I.D.B.; validation, S.P.; formal analysis, I.D.B., S.P. and R.P; data curation, I.D.B. and M.O.; writing original draft preparation, I.D.B. and S.P.; writing review and editing, I.D.B., S.P., M.O. and R.P; supervision, R.P.; project administration, R.P.; funding acquisition, R.P.

Funding

The authors would like to acknowledge funding from the following PhD scholarships: BOF FFB200259, Antigoon ID 42339; UAntwerp-Faculty of Applied Engineering; 2020.06592.BD funded by FCT, Portugal and the Institute of Systems and Robotics - University of Coimbra, under project UIDB/0048/2020.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendix

Zhang’s Method

The intrinsic camera matrix for a pinhole camera \(\textbf{K}\) can be written as

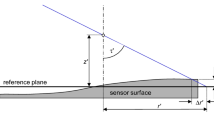

in which f is the focal length, \(s_x\) and \(s_y\) are sensor scale factors, \(s_{\theta }\) is a skew factor and \((u_c,v_c)\) is the coordinate of the image centre with respect to the image coordinate system. However, real world cameras and their lenses suffer from imperfections. This introduces all sorts of distortions, of which radial distortion is the most commonly implemented. Calibrating this non-ideal pinhole camera, means finding values for both \(\textbf{K}\) and \([\textbf{R}\mid \textbf{t}]\), and whichever distortion model is implemented.

Zhang’s method is based on the images of checkerboards with known size and structure. For each position of the board, we construct a coordinate system where the \(X-\) and Y-axis are on the board and the Z-axis is perpendicular to it. We assign all checkerboard corners a 3D coordinate in this system with a Z-component zero. This allows us to rewrite Eq. 8

in which \(\mathbf {r_1}\) and \(\mathbf {r_2}\) are the first two columns of \(\textbf{R}\). This equation shows a 2D to 2D correspondence known as a homography. This means we can write

with \(\textbf{H}\) the 3\(\,\times \,\)3 matrix that describes the homography. This matrix is only determined up to a scalar factor, so it has eight degrees of freedom. Each point correspondence yields two equations. Therefore, four point correspondences are needed to solve for \(\textbf{H}\). In practice, we work with several more points in an overdetermined system to compensate for noise in the measurements.

From these homographies, one for every position of the checkerboard, we estimate the camera intrinsics and extrinsic parameters. From Eq. 12 and 13, we can write a decomposition for \(\textbf{H}\), up to a multiple, as

where \(\lambda \) is a scaling factor and \(\mathbf {h_1}\), \(\mathbf {h_2}\) and \(\mathbf {h_3}\) are the columns of \(\textbf{H}\). We observe the following relationships:

Moreover, since \(\textbf{R}\) is a rotation matrix, it is orthonormal. This means \(\mathbf {r_1}^T \mathbf {r_2} = 0\) and \(\Vert \mathbf {r_1}\Vert = \Vert \mathbf {r_2}\Vert \). Combining these equations yields

These are now independent of the camera extrinsics.

We can write \(\mathbf {K^{-T}} \mathbf {K^{-1}}\) as a new symmetric 3\(\,\times \,\)3 matrix \(\textbf{B}\), alternatively by a 6-tuple \(\textbf{b}\). From Eqs. 17 and 18 we can write \(\textbf{A} \textbf{b} = 0\), in which \(\textbf{A}\) is composed out of all known homography values of the previous step and \(\textbf{b}\) is the vector of six unknowns to solve for. For n checkerboards, and thus n homographies, we now have 2n equations. This means we need at least three checkerboard positions. Once \(\textbf{b}\) and thus \(\textbf{B}\) is found, we can calculate \(\textbf{K}\) via a Cholesky decomposition on \(\textbf{B}\). From \(\textbf{K}\), we know all camera intrinsics such as skewness, scale factor, focal length and principal point.

From Eqs. 15 and 16 we can determine \(\mathbf {r_1}\) and \(\mathbf {r_2}\). The scaling factor \(\lambda \) can be found by normalising \(\mathbf {r_1}\) and \(\mathbf {r_2}\) to unit length. Building on the orthogonality of the rotation matrix \(\textbf{R}\), we can write

Lastly, we find

Up until this point, we have assumed an ideal pinhole camera model. MATLAB and OpenCV use this as a first step in an iterative process in which they introduce extra intrinsic camera parameters to account for image distortion. After convergence, a compromise is found for all camera parameters.

Simplified Zhang’s Method

In this work, we construct an ideal virtual GP-camera. The Gaussian processes capture all distortions and other imperfections in a pre-processing step. This means that the images of the checkerboards on the virtual image plane are projections of a perfect checkerboard, up to noise. This allows us to simplify Zhang’s method as follows.

First, since there is no skewness in the virtual image plane and all virtual pixels are squares, we can rewrite the intrinsic camera matrix \(\textbf{K}\) as

This results in

The rest of the procedure is similar to Zhang’s method. We combine Eqs. 17, 18 and 22 into the system \(\textbf{A} \textbf{b} = 0\). The vector \(\textbf{b}\) is now the vector of four unknowns to solve for, instead of six. For n checkerboards, and thus n homographies, we still have 2n equations. This means we need at least two checkerboard positions to be able to solve this, instead of three. As before, more positions provide more equations, which are solved via Singular Value Decomposition (SVD). Notice that the form of Eq. 22 is such that we do not have to perform the Cholesky decomposition anymore.

Second, there is no need for a distortion model, nor for a converging iterative process. The camera calibration is reduced to a one-step analytical calculation.

Rights and permissions

Copyright information

© 2024 Springer Nature Switzerland AG

About this paper

Cite this paper

De Boi, I., Pathak, S., Oliveira, M., Penne, R. (2024). How to Turn Your Camera into a Perfect Pinhole Model. In: Vasconcelos, V., Domingues, I., Paredes, S. (eds) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2023. Lecture Notes in Computer Science, vol 14469. Springer, Cham. https://doi.org/10.1007/978-3-031-49018-7_7

Download citation

DOI: https://doi.org/10.1007/978-3-031-49018-7_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-49017-0

Online ISBN: 978-3-031-49018-7

eBook Packages: Computer ScienceComputer Science (R0)