Abstract

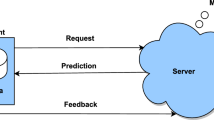

At present many distributed and decentralized frameworks for federated learning algorithms are already available. However, development of such a framework targeting smart Internet of Things in edge systems is still an open challenge. A solution to that challenge named Python Testbed for Federated Learning Algorithms (PTB-FLA) appeared recently. This solution is written in pure Python, it supports both centralized and decentralized algorithms, and its usage was validated and illustrated by three simple algorithm examples. In this paper, we present the federated learning algorithms development paradigm based on PTB-FLA. The paradigm comprises the four phases named by the code they produce: (1) the sequential code, (2) the federated sequential code, (3) the federated sequential code with callbacks, and (4) the PTB-FLA code. The development paradigm is validated and illustrated in the case study on logistic regression, where both centralized and decentralized algorithms are developed.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

McMahan, H.B., Moore, E., Ramage, D., Hampson, S., Arcas, B.A.: Communication-efficient learning of deep networks from decentralized data. In 20th International Conference on Artificial Intelligence and Statistics, vol. 54, pp. 1273–1282. PMLR (2017)

TensorFlow Federated: Machine Learning on Decentralized Data. https://www.tensorflow.org/federated. Accessed 01 Sept 2023

Federated Learning from Research to Practice. https://www.pdl.cmu.edu/SDI/2019/slides/2019-09-05Federated%20Learning.pdf. Accessed 01 Sept 2023

Kholod, I., et al.: Open-source federated learning frameworks for IoT: a comparative review and analysis. Sensors 21(167), 1–22 (2021). https://doi.org/10.3390/s21010167

Popovic, M., Popovic, M., Kastelan, I., Djukic, M., Ghilezan, S.: A simple Python testbed for federated learning algorithms. In: 2023 Zooming Innovation in Consumer Technologies Conference, Piscataway, New Jersey, USA, pp. 148–153. IEEE Xplore (2023). https://doi.org/10.1109/ZINC58345.2023.10173859

Bonawitz, K., et al.: Practical secure aggregation for privacy-preserving machine learning. In: 2017 ACM SIGSAC Conference on Computer and Communications Security, pp. 1175–1191. ACM, New York (2017). https://doi.org/10.1145/3133956.3133982

Konecny, J., McMahan, H.B., Yu, F.X., Suresh, A.T., Bacon, D., Richtarik, P.: Federated Learning: strategies for improving communication efficiency. arXiv, Cornell University (2017). https://arxiv.org/abs/1610.05492

Bonawitz, K., Kairouz, P., McMahan, B., Ramage, D.: Federated learning and privacy. Commun. ACM 65(4), 90–97 (2022). https://doi.org/10.1145/3500240

Perino, D., Katevas, K., Lutu, A., Marin, E., Kourtellis, N.: Privacy-preserving AI for future networks. Commun. ACM 65(4), 52–53 (2022). https://doi.org/10.1145/3512343

Ying, B., Yuan, K., Hu, H., Chen, Y., Yin, W.: BlueFog: make decentralized algorithms practical for optimization and deep learning. arXiv, Cornell University (2021). https://arxiv.org/abs/2111.04287

Ying, B., Yuan, K., Chen, Y., Hu, H., Pan, P., Yin, W.: Exponential graph is provably efficient for decentralized deep training. arXiv, Cornell University (2021). https://arxiv.org/abs/2110.13363

An Industrial Grade Federated Learning Framework. https://fate.fedai.org/. Accessed 01 Sept 2023

An Open-Source Deep Learning Platform Originated from Industrial Practice. https://www.paddlepaddle.org.cn/en. Accessed 01 Sept 2023

A world where every good question is answered. https://www.openmined.org. Accessed 01 Sept 2023

Privacy-Preserving Artificial Intelligence to advance humanity. https://sherpa.ai. Accessed 01 Sept 2023

Deploy machine learning models on mobile and edge devices. https://www.tensorflow.org/lite. Accessed 01 Sept 2023

David, R., et al.: TensorFlow lite micro: embedded machine learning on TinyML systems. arXiv, Cornell University (2021). https://arxiv.org/abs/2010.08678

PyTorch Mobile. End-to-end workflow from Training to Deployment for iOS and Android mobile devices. https://pytorch.org/mobile/home/. Accessed 01 Sept 2023

Paszke, A., et al.: PyTorch: an imperative style, high-performance deep learning library. In: 33rd International Conference on Neural Information Processing Systems, Article 721, pp. 8026–8037. ACM, New York (2019). https://doi.org/10.5555/3454287.3455008

Luo, C., He, X., Zhan, J., Wang, L., Gao, W., Dai, J.: Comparison and benchmarking of AI models and frameworks on mobile devices. arXiv, Cornell University (2020). https://arxiv.org/abs/2005.05085

Feraudo, A., et al.: CoLearn: enabling federated learning in MUD-compliant IoT Edge Networks. In: 3rd International Workshop on Edge Systems, Analytics and Networking, pp. 25–30. ACM, New York (2020). https://doi.org/10.1145/3378679.3394528

Zhang, T., He, C., Ma, T., Gao, L., Ma, M., Avestimehr, S.: Federated learning for Internet of Things. In: 19th ACM Conference on Embedded Networked Sensor Systems, pp. 413–419. ACM, New York (2021). https://doi.org/10.1145/3485730.3493444

Shen, C., Xue, W.: An experiment study on federated learning testbed. In: Zhang, Y.D., Senjyu, T., So-In, C., Joshi, A. (eds.) Smart Trends in Computing and Communications. LNNS, vol. 286, pp. 209–217. Springer, Singapore (2022). https://doi.org/10.1007/978-981-16-4016-2_20

Mattson, T.G., Sanders, B., Massingill, B.: Patterns for Parallel Programming. Addison-Wesley, Massachusetts, USA (2008)

Logistic Regression. https://colab.research.google.com/drive/1qmdfU8tzZ08D3O84qaD11Ffl9YuNUvlD. Accessed 01 Sept 2023

Cellamare, M., van Gestel, A.J., Alradhi, H., Martin, F., Moncada-Torres, A.: A federated generalized linear model for privacy-preserving analysis. Algorithms 15(243), 1–12 (2022). https://doi.org/10.3390/a15070243

Acknowledgements

Funded by the European Union (TaRDIS, 101093006). Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union. Neither the European Union nor the granting authority can be held responsible for them.

Funded by the European Union (TaRDIS, 101093006). Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union. Neither the European Union nor the granting authority can be held responsible for them.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Popovic, M., Popovic, M., Kastelan, I., Djukic, M., Basicevic, I. (2024). A Federated Learning Algorithms Development Paradigm. In: Kofroň, J., Margaria, T., Seceleanu, C. (eds) Engineering of Computer-Based Systems. ECBS 2023. Lecture Notes in Computer Science, vol 14390. Springer, Cham. https://doi.org/10.1007/978-3-031-49252-5_4

Download citation

DOI: https://doi.org/10.1007/978-3-031-49252-5_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-49251-8

Online ISBN: 978-3-031-49252-5

eBook Packages: Computer ScienceComputer Science (R0)