Abstract

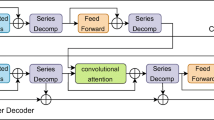

Accurate time series forecasting is a fundamental challenge in data science, as it is often affected by external covariates such as weather or human intervention, which in many applications, may be predicted with reasonable accuracy. We refer to them as predicted future covariates. However, existing methods that attempt to predict time series in an iterative manner with auto-regressive models end up with exponential error accumulations. Other strategies that consider the past and future in the encoder and decoder respectively limit themselves by dealing with the past and future data separately. To address these limitations, a novel feature representation strategy - shifting - is proposed to fuse the past data and future covariates such that their interactions can be considered. To extract complex dynamics in time series, we develop a parallel deep learning framework composed of RNN and CNN, both of which are used in a hierarchical fashion. We also utilize the skip connection technique to improve the model’s performance. Extensive experiments on three datasets reveal the effectiveness of our method. Finally, we demonstrate the model interpretability using the Grad-CAM algorithm.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Baybuza, I.: Inflation forecasting using machine learning methods. Russian J. Money Finance 77(4), 42–59 (2018)

Böse, J., et al.: Probabilistic demand forecasting at scale. In: Proceedings of the VLDB Endowment 10, pp. 1694–1705 (2017)

Chen, P., Pedersen, T., Bak-Jensen, B., Chen, Z.: ARIMA-based time series model of stochastic wind power generation. IEEE Trans. Power Syst. 25(2), 667–676 (2009)

Chen, Y., Kang, Y., Chen, Y., Wang, Z.: Probabilistic forecasting with temporal convolutional neural network. Neurocomputing 399, 491–501 (2020)

Cinar, Y., Hamid, M., Parantapa G., Eric G., Ali A., Vadim S.: Position-based content attention for time series forecasting with sequence-to-sequence RNNs. In: NeurIPS, vol. 24, pp. 533–544 (2017)

Dong, G., Fataliyev, K., Wang, L.: One-step and multi-step ahead stock prediction using backpropagation neural networks. In: 9th International Conference on Information, Communications & Signal Processing, pp. 1–5 (2013)

Du, S., Li, T., Yang, Y., Horng, S.J.: Multivariate time series forecasting via attention-based encoder-decoder framework. Neurocomputing 388, 269–279 (2020)

Efendi, R., Arbaiy, N., Deris, M.: A new procedure in stock market forecasting based on fuzzy random auto-regression time series model. Inf. Sci. 441, 113–132 (2018)

Fan, C., et al.: Multi-horizon time series forecasting with temporal attention learning. In: SIGKDD, pp. 2527–2535 (2019)

Fauvel, K., Lin, T., Masson, V., Fromont, É., Termier, A.: Xcm: an explainable convolutional neural network for multivariate time series classification. Mathematics 9(23), 3137 (2021)

Gidea, M., Katz, Y.: Topological data analysis of financial time series: landscapes of crashes. Phys. A 491, 820–834 (2018)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: CVPR, pp. 4700–4708 (2017)

Hyndman, R., Koehler, A., Ord, J., Snyder, R.: Forecasting with exponential smoothing: the state space approach. Springer Science & Business Media (2008)

Lamb, A., Goyal, A., Zhang, Y., Zhang, S., Courville, A., Bengio, Y.: Professor forcing: a new algorithm for training recurrent networks. In: NeurIPS (2016)

Tang, W., Long, G., Liu, L., Zhou, T., Jiang, J., Blumenstein, M.: Rethinking 1d-cnn for time series classification: A stronger baseline. arXiv preprint arXiv:2002.10061 (2020)

Li, S., et al.: Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. In: NeurIPS (2019)

Li, L., Noorian, F., Moss, D. J., Leong, P.: Rolling window time series prediction using MapReduce. In: Proceedings of the 2014 IEEE 15th International Conference on Information Reuse and Integration, pp. 757–764. IEEE (2014)

Li, H., Xu, Z., Taylor, G., Studer, C., Goldstein, T.: Visualizing the loss landscape of neural nets. In: NeurIPS (2018)

Lim, B., Arık, S.Ö., Loeff, N., Pfister, T.: Temporal fusion transformers for interpretable multi-horizon time series forecasting. Int. J. Forecast. 37(4), 1748–1764 (2021)

Luong, M. T., Le, Q. V., Sutskever, I., Vinyals, O., Kaiser, L.: Multi-task sequence to sequence learning. arXiv preprint arXiv:1511.06114 (2015)

Niu, W., Feng, Z.: Evaluating the performances of several artificial intelligence methods in forecasting daily streamflow time series for sustainable water resources management. Sustain. Urban Areas 64, 102562 (2021)

Qing, X., Jin, J., Niu, Y., Zhao, S.: Time-space coupled learning method for model reduction of distributed parameter systems with encoder-decoder and RNN. AIChE J. 66(8), e16251 (2020)

Rangapuram, S., Seeger, M., Gasthaus, J., Stella, L., Wang, Y., Januschowski, T.: Deep state space models for time series forecasting. In: NeurIPS (2018)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Salinas, D., Flunkert, V., Gasthaus, J., Januschowski, T.: DeepAR: probabilistic forecasting with autoregressive recurrent networks. Int. J. Forecast. 36(3), 1181–1191 (2020)

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D.: Grad-cam: visual explanations from deep networks via gradient-based localization. In: ICCV, pp. 618–626 (2017)

Shi, J., Jain, M., Narasimhan, G.: Time series forecasting (tsf) using various deep learning models. arXiv preprint arXiv:2204.11115 (2022)

Siami-Namini, S., Tavakoli, N., Namin, A.: A comparison of ARIMA and LSTM in forecasting time series. In: 17th IEEE International Conference on Machine Learning and Applications (ICMLA), pp. 1394–1401. IEEE (2018)

Therneau, T., Crowson, C., Atkinson, E.: Using time dependent covariates and time dependent coefficients in the cox model. Survival Vignettes 2(3), 1–25 (2017)

Vaswani, A., et al.: Attention is all you need. In: NeurIPS (2017)

Wen, R., Torkkola, K., Narayanaswamy, B., Madeka, D.: A multi-horizon quantile recurrent forecaster. arXiv preprint arXiv:1711.11053 (2017)

Yang, B., Oh, M., Tan, A.: Machine condition prognosis based on regression trees and one-step-ahead prediction. Mech. Syst. Signal Process. 22(5), 1179–1193 (2008)

Yang, X., Ye, Y., Li, X., Lau, R.Y., Zhang, X., Huang, X.: Hyperspectral image classification with deep learning models. IEEE Trans. Geosci. Remote Sens. 56(9), 5408–5423 (2018)

Yuan, Y., et al.: Using an attention-based LSTM encoder-decoder network for near real-time disturbance detection. IEEE J. Selected Topics Appli. Earth Observat. Remote Sensing 13, 1819–1832 (2020)

Zhang, J., Nawata, K.: Multi-step prediction for influenza outbreak by an adjusted long short-term memory. Epidemiology Infect. 146(7), 809–816 (2018)

Zhang, C., et al.: A deep neural network for unsupervised anomaly detection and diagnosis in multivariate time series data. In: AAAI (2019)

Acknowledgements

This work is part of the I-GUIDE project, which is funded by the National Science Foundation under award number 2118329.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

7 Appendix

7 Appendix

1.1 7.1 Model Explainability

We provide the explainability of the trained model using the Water Stage dataset. The following figure shows how important each feature and each time step is for the final predictions.

1.2 7.2 Visualization of Time Series

We visualize the time series in Fig. 11 below. The unit of each feature is ignored. We refer readers to see Fig. 2 for better understanding.

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Shi, J., Myana, R., Stebliankin, V., Shirali, A., Narasimhan, G. (2023). Explainable Parallel RCNN with Novel Feature Representation for Time Series Forecasting. In: Ifrim, G., et al. Advanced Analytics and Learning on Temporal Data. AALTD 2023. Lecture Notes in Computer Science(), vol 14343. Springer, Cham. https://doi.org/10.1007/978-3-031-49896-1_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-49896-1_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-49895-4

Online ISBN: 978-3-031-49896-1

eBook Packages: Computer ScienceComputer Science (R0)