Abstract

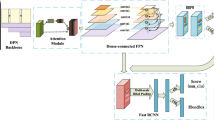

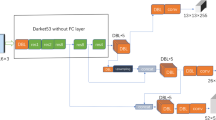

In the existing remote sensing image recognition, the problems of poor imaging quality, small sample size, and the difficulty of using a single attention mechanism to fully extract the hidden distinguishing features in the image, this paper proposes a method for detecting regional destruction in remote sensing images based on MA-CapsNet (multi-attention capsule encoder-decoder network) network. The method firstly adopts the BSRGAN model for image super-resolution processing of the original destruction data, and adopts various data enhancement operations for data expansion of the processed image, and then adopts the MA-CapsNet network proposed in this paper for further processing, using Swin-transformer and Convolutional Block The lower level features are extracted using a cascading attention mechanism consisting of Swin-transformer and Convolutional Block Attention Module (CBAM); finally, the feature map is fed into the classifier to complete the detection of the destroyed area after the precise target features are captured by the CapsNet module (CapsNet). In the destruction area detection experiment carried out in the remote sensing images after the 2010 Haiti earthquake, the accuracy of the MA-CapsNet model in area destruction detection reaches 99.64%, which is better than that of the current state-of-the-art models such as ResNet, Vision Transformer (VIT), and the ablation experimental network model. This method improves the model's characterization ability and solves the problem of low accuracy of remote sensing image destruction area detection under complex background, which is of theoretical guidance significance for quickly grasping remote sensing image destruction and damage assessment.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Li, W., et al.: Classification of high-spatial-resolution remote sensing scenes method using transfer learning and deep convolutional neural network. IEEE J. Sel. Top. Appl. Earth Observations Remote Sens. 13, 1986–1995 (2020)

Lv, W., Wang, X.: Overview of hyperspectral image classification. J. Sens. 2020, 1–13 (2020)

Hinton Geoffrey, E., Salakhutdinov, R.R.: Reducing the dimensionality of data with neural networks. Science 313(5786), 504–507 (2006)

Liu, L., et al.: Inshore ship detection in sar images based on deep neural networks. In: IEEE International Geoscience and Remote Sensing Symposium, pp. 25–28 (2018)

Khan, M., Jamil, A., Lv, Z., et al.: Efficient deep CNN-based fire detection and localization in video surveillance applications. IEEE Trans. Syst. Man Cybern. Syst. 49(7), 1419–1434 (2019)

Shao, Z., Tang, P., Wang, Z., et al.: BRRNet: a fully convolutional neural network for automatic building extraction from high-resolution remote sensing images. Remote Sens. 12(6), 1050 (2020)

Yang, P., Wang, M., Yuan, H., He, C., Cong, L.: Using contour loss constraining residual attention U-net on optical remote sensing interpretation. Vis. Comput. 39(9), 4279–4291 (2022)

Li, J., et al.: Automatic detection and classification system of domestic waste via multimodel cascaded convolutional neural network. IEEE Trans. 18(1), 163–173 (2022)

Soto, P.J., Costa, G.A.O.P., Feitosa, R.Q., et al.: Domain adaptation with cyclegan for change detection in the amazon forest. ISPRS Arch. 43(B3), 1635–1643 (2020)

Gianni, B., Frasincar, F.: A general survey on attention mechanisms in deep learning. IEEE Trans. Know. Data Eng. 35, 3279–3298 (2021)

Lin, X., Sun, S., Huang, W., Sheng, B., Li, P., Feng, D.D.: EAPT: efficient attention pyramid transformer for image processing. IEEE Trans. 25, 50–61 (2023)

Li, S., Yan, Q., Liu, P.: An efficient fire detection method based on multiscale feature extraction, implicit deep supervision and channel attention mechanism. IEEE Trans. Image Process. 29, 8467–8475 (2020)

Chen, L., Weng, T., Jin, X., et al.: A new deep learning network for automatic bridge detection from SAR images based on balanced and attention mechanism. Remote Sens. 12(3), 441 (2020)

Chen, L., et al.: Improved YOLOv3 based on attention mechanism for fast and accurate ship detection in optical remote sensing images. Remote Sens. 13(4), 660 (2021)

Wang, P., Liu, L., Shen, C., et al.: Multi-attention network for one shot learning. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 6212–6220 (2017)

Zhang, K., et al.: Designing a practical degradation model for deep blind image super-resolution. In: IEEE International Conference on Computer Vision, pp. 4771–4780 (2021)

Gui, J., Sun, Z., Wen, Y., et al.: A review on generative adversarial networks: algorithms, theory, and applications. IEEE Trans. Knowl. Data Eng. 14(8), 1–28 (2021)

Christian, L., Lucas, T., Ferenc, H., et al.: Photo-realistic single image super-resolution using a generative adversarial network. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 105–114 (2017)

Bulat, A., et al.: To learn image super-resolution, use a GAN to learn how to do image degradation first. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11210, pp. 187–202. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01231-1_12

Ashish, V., Noam, S., Niki, P., et al.: Attention is all you need. In: Annual Conference on Neural Information Processing Systems, pp. 5998–6008 (2017)

Alexey, D., et al.: An image is worth 16x16 words: transformers for image recognition at scale. In: 9th International Conference on Learning Representations (2021)

Liu, Z., Lin, Y., et al.: Swin transformer: hierarchical vision transformer using shifted windows. In: IEEE International Conference on Computer Vision, pp. 9992–10002 (2021)

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: CBAM: convolutional block attention module. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 3–19. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_1

Sara, S., Nicholas, F., Hinton, G.E.: Dynamic routing between capsules. In: Annual Conference on Neural Information Processing Systems, pp. 3856–3866 (2017)

Ilya, L., Hutter, F.: Decoupled weight decay regularization. In: 7th International Conference on Learning Representations (2019)

Chen, H., Han, Q., Li, Q., Tong, X.: A novel general blind detection model for image forensics based on DNN. Vis. Comput. 39(1), 27–42 (2021)

Acknowledgement

The subject is sponsored by the National Natural Science Foundation of P. R. China (No. 62102194 and No. 62102196), Six Talent Peaks Project of Jiangsu Province (No. RJFW-111), Postgraduate Research and Practice Innovation Program of Jiangsu Province (No. KYCX20_0759, No. KYCX21_0787, No. KYCX21_0788, No. KYCX21_0799, and KYCX22_1019).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Sun, H., Sun, Y., Li, P., Xu, H. (2024). Multi-attention Integration Mechanism for Region Destruction Detection of Remote Sensing Images. In: Sheng, B., Bi, L., Kim, J., Magnenat-Thalmann, N., Thalmann, D. (eds) Advances in Computer Graphics. CGI 2023. Lecture Notes in Computer Science, vol 14497. Springer, Cham. https://doi.org/10.1007/978-3-031-50075-6_16

Download citation

DOI: https://doi.org/10.1007/978-3-031-50075-6_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-50074-9

Online ISBN: 978-3-031-50075-6

eBook Packages: Computer ScienceComputer Science (R0)