Abstract

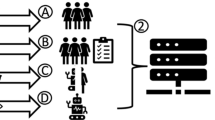

Privacy-preserving AI algorithms are widely adopted in various domains, but the lack of transparency might pose accountability issues. While auditing algorithms can address this issue, machine-based audit approaches are often costly and time-consuming. Herd audit, on the other hand, offers an alternative solution by harnessing collective intelligence. Nevertheless, the presence of epistemic disparity among auditors, resulting in varying levels of expertise and access to knowledge, may impact audit performance. An effective herd audit will establish a credible accountability threat for algorithm developers, incentivizing them to uphold their claims. In this study, our objective is to develop a systematic framework that examines the impact of herd audit on algorithm developers using the Stackelberg game approach. The optimal strategy for auditors emphasizes the importance of easy access to relevant information, as it increases the auditors’ confidence in the audit process. Similarly, the optimal choice for developers suggests that herd audit is viable when auditors face lower costs in acquiring knowledge. By enhancing transparency and accountability, herd audit contributes to the responsible development of privacy-preserving algorithms.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Allahbakhsh, M., Ignjatovic, A., Benatallah, B., Bertino, E., Foo, N., et al.: Reputation management in crowdsourcing systems. In: 8th International Conference on Collaborative Computing: Networking, Applications and Worksharing (CollaborateCom), pp. 664–671. IEEE (2012)

Anderson, J.R., Bothell, D., Byrne, M.D., Douglass, S., Lebiere, C., Qin, Y.: An integrated theory of the mind. Psychol. Rev. 111(4), 1036 (2004)

Bandy, J.: Problematic machine behavior: a systematic literature review of algorithm audits. Proc. ACM Hum.-Comput. Interact. 5(CSCW1), 1–34 (2021)

Bichsel, B., Gehr, T., Drachsler-Cohen, D., Tsankov, P., Vechev, M.: DP-finder: finding differential privacy violations by sampling and optimization. In: Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, CCS 2018, pp. 508–524. Association for Computing Machinery, New York (2018). https://doi.org/10.1145/3243734.3243863

Caplin, A., Dean, M.: Revealed preference, rational inattention, and costly information acquisition. Am. Econ. Rev. 105(7), 2183–2203 (2015). https://doi.org/10.1257/aer.20140117

Casorrán, C., Fortz, B., Labbé, M., Ordóñez, F.: A study of general and security stackelberg game formulations. Eur. J. Oper. Res. 278(3), 855–868 (2019)

Chen, J., Zhu, Q.: Optimal contract design under asymmetric information for cloud-enabled internet of controlled things. In: Zhu, Q., Alpcan, T., Panaousis, E., Tambe, M., Casey, W. (eds.) GameSec 2016. LNCS, vol. 9996, pp. 329–348. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-47413-7_19

Comeig, I., Mesa-Vázquez, E., Sendra-Pons, P., Urbano, A.: Rational herding in reward-based crowdfunding: An mturk experiment. Sustainability 12(23), 9827 (2020)

Ding, Z., Wang, Y., Wang, G., Zhang, D., Kifer, D.: Detecting violations of differential privacy. In: Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, pp. 475–489 (2018)

Dwork, C.: Differential privacy: a survey of results. In: Agrawal, M., Du, D., Duan, Z., Li, A. (eds.) TAMC 2008. LNCS, vol. 4978, pp. 1–19. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-79228-4_1

Eickhoff, C.: Cognitive biases in crowdsourcing. In: Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, pp. 162–170 (2018)

Fang, F., Liu, S., Basak, A., Zhu, Q., Kiekintveld, C.D., Kamhoua, C.A.: Introduction to game theory. In: Game Theory and Machine Learning for Cyber Security, pp. 21–46 (2021)

Fricker, M.: Epistemic Injustice: Power and the Ethics of Knowing. Oxford University Press, Oxford (2007)

Frye, H.: The technology of public shaming. Soc. Philos. Policy 38(2), 128–145 (2021)

Fum, D., Del Missier, F., Stocco, A., et al.: The cognitive modeling of human behavior: why a model is (sometimes) better than 10,000 words. Cogn. Syst. Res. 8(3), 135–142 (2007)

González-Prendes, A.A., Resko, S.M.: Cognitive-behavioral theory (2012)

Grasswick, H.: Epistemic injustice in science. In: The Routledge Handbook of Epistemic Injustice, pp. 313–323. Routledge (2017)

Guerrero, D., Carsteanu, A.A., Clempner, J.B.: Solving stackelberg security Markov games employing the bargaining nash approach: convergence analysis. Comput. Secur. 74, 240–257 (2018)

Guszcza, J., Rahwan, I., Bible, W., Cebrian, M., Katyal, V.: Why we need to audit algorithms (2018). https://hdl.handle.net/21.11116/0000-0003-1C9E-D

Han, Y., Martínez, S.: A numerical verification framework for differential privacy in estimation. IEEE Control Syst. Lett. 6, 1712–1717 (2021)

Horák, K., Zhu, Q., Bošanskỳ, B.: Manipulating adversary’s belief: a dynamic game approach to deception by design for proactive network security. In: Rass, S., An, B., Kiekintveld, C., Fang, F., Schauer, S. (eds.) GameSec 2017. LNCS, vol. 10575, pp. 273–294. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-68711-7_15

Hu, Y., Zhu, Q.: Evasion-aware Neyman-Pearson detectors: a game-theoretic approach. In: 2022 IEEE 61st Conference on Decision and Control (CDC), pp. 6111–6117 (2022). https://doi.org/10.1109/CDC51059.2022.9993423

Huang, L., Zhu, Q.: Duplicity games for deception design with an application to insider threat mitigation. IEEE Trans. Inf. Forensics Secur. 16, 4843–4856 (2021)

Huang, L., Zhu, Q.: Cognitive Security: A System-Scientific Approach. Springer, Cham (2023). https://doi.org/10.1007/978-3-031-30709-6

Karger, D.R., Oh, S., Shah, D.: Budget-optimal task allocation for reliable crowdsourcing systems. Oper. Res. 62(1), 1–24 (2014)

Leimeister, J.M.: Collective intelligence. Bus. Inf. Syst. Eng. 2, 245–248 (2010)

Manshaei, M.H., Zhu, Q., Alpcan, T., Bacşar, T., Hubaux, J.P.: Game theory meets network security and privacy. ACM Comput. Surv. (CSUR) 45(3), 1–39 (2013)

Matějka, F., McKay, A.: Rational inattention to discrete choices: a new foundation for the multinomial logit model. Am. Econ. Rev. 105(1), 272–298 (2015)

Mittelstadt, B.: Automation, algorithms, and politics| auditing for transparency in content personalization systems. Int. J. Commun. 10, 12 (2016)

Morris, R.R., Dontcheva, M., Gerber, E.M.: Priming for better performance in microtask crowdsourcing environments. IEEE Internet Comput. 16(5), 13–19 (2012)

Narayanan, S.N., Ganesan, A., Joshi, K., Oates, T., Joshi, A., Finin, T.: Early detection of cybersecurity threats using collaborative cognition. In: 2018 IEEE 4th International Conference on Collaboration and Internet Computing (CIC), pp. 354–363 (2018). https://doi.org/10.1109/CIC.2018.00054

Pawlick, J., Zhu, Q.: Active crowd defense. In: Game Theory for Cyber Deception: From Theory to Applications, pp. 147–167 (2021)

Rajtmajer, S., Squicciarini, A., Such, J.M., Semonsen, J., Belmonte, A.: An ultimatum game model for the evolution of privacy in jointly managed content. In: Rass, S., An, B., Kiekintveld, C., Fang, F., Schauer, S. (eds.) GameSec 2017. LNCS, vol. 10575, pp. 112–130. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-68711-7_7

Restuccia, F., Ghosh, N., Bhattacharjee, S., Das, S.K., Melodia, T.: Quality of information in mobile crowdsensing: survey and research challenges. ACM Trans. Sensor Netw. (TOSN) 13(4), 1–43 (2017)

Sims, C.A.: Implications of rational inattention. J. Monet. Econ. 50(3), 665–690 (2003)

Wang, K., Qi, X., Shu, L., Deng, D.J., Rodrigues, J.J.: Toward trustworthy crowdsourcing in the social internet of things. IEEE Wirel. Commun. 23(5), 30–36 (2016)

Yu, H., et al.: Mitigating herding in hierarchical crowdsourcing networks. Sci. Rep. 6(1), 4 (2016)

Yu, Y., Liu, S., Guo, L., Yeoh, P.L., Vucetic, B., Li, Y.: CrowdR-FBC: a distributed fog-blockchains for mobile crowdsourcing reputation management. IEEE Internet Things J. 7(9), 8722–8735 (2020)

Zhang, R., Zhu, Q.: FlipIn: a game-theoretic cyber insurance framework for incentive-compatible cyber risk management of internet of things. IEEE Trans. Inf. Forensics Secur. 15, 2026–2041 (2019)

Zhao, Y., Zhu, Q.: Evaluation on crowdsourcing research: current status and future direction. Inf. Syst. Front. 16, 417–434 (2014)

Zhu, Q., Fung, C., Boutaba, R., Basar, T.: GUIDEX: a game-theoretic incentive-based mechanism for intrusion detection networks. IEEE J. Sel. Areas Commun. 30(11), 2220–2230 (2012)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Yang, YT., Zhang, T., Zhu, Q. (2023). A Game-Theoretic Analysis of Auditing Differentially Private Algorithms with Epistemically Disparate Herd. In: Fu, J., Kroupa, T., Hayel, Y. (eds) Decision and Game Theory for Security. GameSec 2023. Lecture Notes in Computer Science, vol 14167. Springer, Cham. https://doi.org/10.1007/978-3-031-50670-3_18

Download citation

DOI: https://doi.org/10.1007/978-3-031-50670-3_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-50669-7

Online ISBN: 978-3-031-50670-3

eBook Packages: Computer ScienceComputer Science (R0)