Abstract

Due to the proliferation of malware, defenders are increasingly turning to automation and machine learning as part of the malware detection toolchain. However, machine learning models are susceptible to adversarial attacks, requiring the testing of model and product robustness. Meanwhile, attackers also seek to automate malware generation and evasion of antivirus systems, and defenders try to gain insight into their methods. This work proposes a new algorithm that combines Malware Evasion and Model Extraction (MEME) attacks. MEME uses model-based reinforcement learning to adversarially modify Windows executable binary samples while simultaneously training a surrogate model with a high agreement with the target model to evade. To evaluate this method, we compare it with two state-of-the-art attacks in adversarial malware creation, using three well-known published models and one antivirus product as targets. Results show that MEME outperforms the state-of-the-art methods in terms of evasion capabilities in almost all cases, producing evasive malware with an evasion rate in the range of 32–73%. It also produces surrogate models with a prediction label agreement with the respective target models between 97–99%. The surrogate could be used to fine-tune and improve the evasion rate in the future.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Akiba, T., Sano, S., Yanase, T., Ohta, T., Koyama, M.: Optuna: a next-generation hyperparameter optimization framework. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. pp. 2623–2631. KDD 2019, Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3292500.3330701

Anderson, H.S., Kharkar, A., Filar, B., Evans, D., Roth, P.: Learning to evade static PE machine learning malware models via reinforcement learning (2018). https://doi.org/10.48550/arXiv.1801.08917, arXiv:1801.08917

Anderson, H.S., Roth, P.: EMBER: an open dataset for training static PE malware machine learning models (2018). https://doi.org/10.48550/arXiv.1804.04637, arXiv:1804.04637

Bergstra, J., Bardenet, R., Bengio, Y., Kégl, B.: Algorithms for hyper-parameter optimization. In: Advances in Neural Information Processing Systems, vol. 24. Curran Associates, Inc. (2011)

Brockman, G., et al.: OpenAI gym (2016). https://doi.org/10.48550/arXiv.1606.01540, arXiv:1606.01540

Ceschin, F., Botacin, M., Gomes, H.M., Oliveira, L.S., Grégio, A.: Shallow security: on the creation of adversarial variants to evade machine learning-based malware detectors. In: Proceedings of the 3rd Reversing and Offensive-oriented Trends Symposium, pp. 1–9. ROOTS 2019, Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3375894.3375898

Chandrasekaran, V., Chaudhuri, K., Giacomelli, I., Jha, S., Yan, S.: Exploring connections between active learning and model extraction. In: Proceedings of the 29th USENIX Conference on Security Symposium, pp. 1309–1326. SEC 2020, USENIX Association, USA (2020)

Correia-Silva, J.R., Berriel, R.F., Badue, C., de Souza, A.F., Oliveira-Santos, T.: Copycat CNN: stealing knowledge by persuading confession with random non-labeled data. In: 2018 International Joint Conference on Neural Networks (IJCNN), pp. 1–8 (2018). iSSN: 2161–4407

Demetrio, L., Biggio, B., Lagorio, G., Roli, F., Armando, A.: Functionality-preserving black-box optimization of adversarial windows malware. IEEE Trans. Inf. Forensics Secur. 16, 3469–3478 (2021). https://doi.org/10.1109/TIFS.2021.3082330

Demetrio, L., Coull, S.E., Biggio, B., Lagorio, G., Armando, A., Roli, F.: Adversarial exemples: a survey and experimental evaluation of practical attacks on machine learning for windows malware detection. ACM Trans. Priv. Secur. 24(4), 1–31 (2021)

Dowling, S., Schukat, M., Barrett, E.: Using reinforcement learning to conceal honeypot functionality. In: Brefeld, U., Curry, E., Daly, E., MacNamee, B., Marascu, A., Pinelli, F., Berlingerio, M., Hurley, N. (eds.) Machine Learning and Knowledge Discovery in Databases, pp. 341–355. Lecture Notes in Computer Science, Springer International Publishing, Cham (2019). https://doi.org/10.1007/978-3-030-10997-4_21

Fang, Y., Zeng, Y., Li, B., Liu, L., Zhang, L.: DeepDetectNet vs RLAttackNet: an adversarial method to improve deep learning-based static malware detection model. PLoS ONE 15(4), e0231626 (2020). https://doi.org/10.1371/journal.pone.0231626

Fang, Z., Wang, J., Geng, J., Kan, X.: Feature selection for malware detection based on reinforcement learning. IEEE Access 7, 176177–176187 (2019). https://doi.org/10.1109/ACCESS.2019.2957429

Fang, Z., Wang, J., Li, B., Wu, S., Zhou, Y., Huang, H.: Evading anti-malware engines with deep reinforcement learning. IEEE Access 7, 48867–48879 (2019). https://doi.org/10.1109/ACCESS.2019.2908033

Harang, R., Rudd, E.M.: SOREL-20M: a large scale benchmark dataset for malicious PE detection. arXiv:2012.07634 (2020)

Hu, W., Tan, Y.: Generating adversarial malware examples for black-box attacks based on GAN. In: Tan, Y., Shi, Y. (eds.) Data Mining and Big Data, pp. 409–423. Communications in Computer and Information Science, Springer Nature, Singapore (2022). https://doi.org/10.1007/978-981-19-8991-9_29

Huang, L., Zhu, Q.: Adaptive honeypot engagement through reinforcement learning of semi-markov decision processes. In: Decision and Game Theory for Security, pp. 196–216. Lecture Notes in Computer Science, Springer International Publishing, Cham (2019). https://doi.org/10.1007/978-3-030-32430-8_13

Institute, A.T.: AV-ATLAS - Malware & PUA (2023). https://portal.av-atlas.org/malware

Jagielski, M., Carlini, N., Berthelot, D., Kurakin, A., Papernot, N.: High Accuracy and High Fidelity Extraction of Neural Networks. In: SEC 2020: Proceedings of the 29th USENIX Conference on Security Symposium, pp. 1345–1362 (2020)

Ke, G., et al.: LightGBM: a highly efficient gradient boosting decision tree. In: Advances in Neural Information Processing Systems, vol. 30. Curran Associates, Inc. (2017)

Kurutach, T., Clavera, I., Duan, Y., Tamar, A., Abbeel, P.: Model-ensemble trust-region policy optimization. In: International Conference on Learning Representations (2018)

Labaca-Castro, R., Franz, S., Rodosek, G.D.: AIMED-RL: exploring adversarial malware examples with reinforcement learning. In: Dong, Y., Kourtellis, N., Hammer, B., Lozano, J.A. (eds.) Machine Learning and Knowledge Discovery in Databases. Applied Data Science Track, pp. 37–52. Lecture Notes in Computer Science, Springer International Publishing, Cham (2021). https://doi.org/10.1007/978-3-030-86514-6_3

Li, D., Li, Q., Ye, Y.F., Xu, S.: Arms race in adversarial malware detection: a survey. ACM Comput. Surv. 55(1), 15:1-15:35 (2021)

Li, X., Li, Q.: An IRL-based malware adversarial generation method to evade anti-malware engines. Comput. Secur. 104, 102118 (2021). https://doi.org/10.1016/j.cose.2020.102118

Ling, X., et al.: Adversarial attacks against Windows PE malware detection: a survey of the state-of-the-art. Comput. Secur. 128, 103134 (2023). https://doi.org/10.1016/j.cose.2023.103134

Lundberg, S.M., Lee, S.I.: A unified approach to interpreting model predictions. In: Advances in Neural Information Processing Systems, vol. 30. Curran Associates, Inc. (2017)

Nguyen, T.T., Reddi, V.J.: Deep reinforcement learning for cyber security. IEEE Trans. Neural Networks Learn. Syst. 34, 3779–3795 (2021). https://doi.org/10.1109/TNNLS.2021.3121870

Orekondy, T., Schiele, B., Fritz, M.: Knockoff Nets: stealing functionality of black-box models. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4954–4963 (2019)

Pal, S., Gupta, Y., Shukla, A., Kanade, A., Shevade, S., Ganapathy, V.: ActiveThief: model extraction using active learning and unannotated public data. Proc. AAAI Conf. Artif. Intell. 34(01), 865–872 (2020). https://doi.org/10.1609/aaai.v34i01.5432

Phan, T.D., Duc Luong, T., Hoang Quoc An, N., Nguyen Huu, Q., Nghi, H.K., Pham, V.H.: Leveraging reinforcement learning and generative adversarial networks to craft mutants of windows malware against black-box malware detectors. In: Proceedings of the 11th International Symposium on Information and Communication Technology. pp. 31–38. SoICT 2022, Association for Computing Machinery, New York, NY, USA (2022)

Quertier, T., Marais, B., Morucci, S., Fournel, B.: MERLIN - malware evasion with reinforcement LearnINg. arXiv:2203.12980 (2022)

Raffin, A., Hill, A., Gleave, A., Kanervisto, A., Ernestus, M., Dormann, N.: Stable-Baselines3: reliable reinforcement learning implementations. J. Mach. Learn. Res. 22(268), 1–8 (2021)

Rigaki, M., Garcia, S.: Stealing and evading malware classifiers and antivirus at low false positive conditions. Comput. Secur. 129, 103192 (2023). https://doi.org/10.1016/j.cose.2023.103192

Rosenberg, I., Meir, S., Berrebi, J., Gordon, I., Sicard, G., Omid David, E.: Generating end-to-end adversarial examples for malware classifiers using explainability. In: 2020 International Joint Conference on Neural Networks (IJCNN), pp. 1–10 (2020). https://doi.org/10.1109/IJCNN48605.2020.9207168, iSSN: 2161-4407

Sanyal, S., Addepalli, S., Babu, R.V.: Towards data-free model stealing in a hard label setting. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 15284–15293 (2022)

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., Klimov, O.: Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 (2017)

Security.org, T.: 2023 Antivirus market annual report (2023). https://www.security.org/antivirus/antivirus-consumer-report-annual/

Severi, G., Meyer, J., Coull, S., Oprea, A.: Explanation-guided backdoor poisoning attacks against malware classifiers. In: 30th USENIX Security Symposium (USENIX Security 21), pp. 1487–1504. USENIX Association (2021)

Song, W., Li, X., Afroz, S., Garg, D., Kuznetsov, D., Yin, H.: MAB-malware: a reinforcement learning framework for blackbox generation of adversarial malware. In: Proceedings of the 2022 ACM on Asia Conference on Computer and Communications Security, pp. 990–1003. ASIA CCS 2022, Association for Computing Machinery, New York, NY, USA (2022). https://doi.org/10.1145/3488932.3497768

Sussman, B.: New malware is born every minute (2023). https://blogs.blackberry.com/en/2023/05/new-malware-born-every-minute

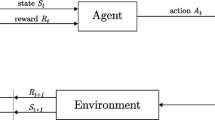

Sutton, R.S., Barto, A.G.: Reinforcement Learning, second edition: An Introduction. MIT Press (2018)

Total, V.: VirusTotal - Stats. https://www.virustotal.com/gui/stats

Uprety, A., Rawat, D.B.: Reinforcement learning for IoT security: a comprehensive survey. IEEE Internet Things J. 8(11), 8693–8706 (2021). https://doi.org/10.1109/JIOT.2020.3040957

Wu, C., Shi, J., Yang, Y., Li, W.: Enhancing machine learning based malware detection model by reinforcement learning. In: Proceedings of the 8th International Conference on Communication and Network Security, pp. 74–78. ICCNS 2018, Association for Computing Machinery, New York, NY, USA (Nov 2018). https://doi.org/10.1145/3290480.3290494

Yu, H., Yang, K., Zhang, T., Tsai, Y.Y., Ho, T.Y., Jin, Y.: CloudLeak: large-scale deep learning models stealing through adversarial examples. In: Proceedings 2020 Network and Distributed System Security Symposium. Internet Society, San Diego, CA (2020)

Zolotukhin, M., Kumar, S., Hämäläinen, T.: Reinforcement learning for attack mitigation in SDN-enabled networks. In: 2020 6th IEEE Conference on Network Softwarization (NetSoft), pp. 282–286 (2020). https://doi.org/10.1109/NetSoft48620.2020.9165383

Šembera, V., Paquet-Clouston, M., Garcia, S., Erquiaga, M.J.: Cybercrime specialization: an exposé of a malicious android obfuscation-as-a-service. In: 2021 IEEE European Symposium on Security and Privacy Workshops (EuroS &PW, pp. 213–236 (2021). https://doi.org/10.1109/EuroSPW54576.2021.00029

Acknowledgments

The authors acknowledge support from the Strategic Support for the Development of Security Research in the Czech Republic 2019–2025 (IMPAKT 1) program, by the Ministry of the Interior of the Czech Republic under No. VJ02010020 – AI-Dojo: Multi-agent testbed for the research and testing of AI-driven cyber security technologies. The authors acknowledge the support of NVIDIA Corporation with the donation of a Titan V GPU used for this research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

1.1 A. Hyper-parameter Tuning

The search space for the PPO hyper-parameters:

-

gamma: 0.01 - 0.75

-

max_grad_norm: 0.3 - 5.0

-

learning_rate: 0.001 - 0.1

-

activation function: ReLU or Tanh

-

neural network size: small or medium

Selected parameters: gamma=0.854, learning_rate=0.00138, max_grad_norm=0.4284, activation function=Tanh, small network size (2 layers with 64 units each).

The search space for the LGB surrogate training hyper-parameters:

-

alpha: 1 - 1,000

-

num_boosting_rounds: 100-2,000

-

learning_rate: 0.001 - 0.1

-

num_leaves: 128 - 2,048

-

max_depth: 5 - 16

-

min_child_samples: 5 - 100

-

feature_fraction: 0.4 - 1.0

Selected parameters for the LGB surrogate can be found in Table 4.

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Rigaki, M., Garcia, S. (2024). The Power of MEME: Adversarial Malware Creation with Model-Based Reinforcement Learning. In: Tsudik, G., Conti, M., Liang, K., Smaragdakis, G. (eds) Computer Security – ESORICS 2023. ESORICS 2023. Lecture Notes in Computer Science, vol 14347. Springer, Cham. https://doi.org/10.1007/978-3-031-51482-1_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-51482-1_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-51481-4

Online ISBN: 978-3-031-51482-1

eBook Packages: Computer ScienceComputer Science (R0)