Abstract

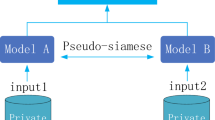

With the rapid development of deep learning, it has become a trend for clients to perform split learning with an untrusted cloud server. The models are split into the client-end and server-end with features transmitted in between. However, features are typically vulnerable to attribute inference attacks to the input data. Most existing schemes target protecting data privacy at the inference, but not at the training stage. It remains a significant challenge to remove private information from the features while accomplishing the learning task with high utility.

We found the fundamental issue is that utility and privacy are mostly conflicting tasks, which are hardly handled by the linear scalarization commonly used in previous works. Thus we resort to the multi-objective optimization (MOO) paradigm, seeking a Pareto optimal solution according to the utility and privacy objectives. The privacy objective is formulated by the mutual information between feature and sensitive attributes and is approximated by Gaussian models. In each training iteration, we select a direction that balances the dual goal of moving toward the Pareto Front and toward the users’ preference while keeping the privacy loss under the preset threshold. With a theoretical guarantee, the privacy of sensitive attributes is well preserved throughout training and at convergence. Experimental results on image and tabular datasets reveal our method is superior to the state-of-the-art in terms of utility and privacy.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Doersch, C.: Tutorial on variational autoencoders. arXiv preprint arXiv:1606.05908 (2016)

Duong, T., Hazelton, M.L.: Convergence rates for unconstrained bandwidth matrix selectors in multivariate kernel density estimation. J. Multivar. Anal. 93(2), 417–433 (2005)

Gupta, O., Raskar, R.: Distributed learning of deep neural network over multiple agents. J. Netw. Comput. Appl. 116, 1–8 (2018)

Hershey, J.R., Olsen, P.A.: Approximating the Kullback Leibler divergence between gaussian mixture models. In: 2007 IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP 2007, vol. 4, pp. IV–317. IEEE (2007)

Hillermeier, C.: Nonlinear Multiobjective Optimization: A Generalized Homotopy Approach, vol. 135. Springer Science & Business Media, Cham (2001)

Jia, J., Gong, N.Z.: \(\{\)AttriGuard\(\}\): a practical defense against attribute inference attacks via adversarial machine learning. In: 27th USENIX Security Symposium (USENIX Security 18), pp. 513–529 (2018)

Kosinski, M., Stillwell, D., Graepel, T.: Private traits and attributes are predictable from digital records of human behavior. Proc. Natl. Acad. Sci. 110(15), 5802–5805 (2013)

Li, A., Duan, Y., Yang, H., Chen, Y., Yang, J.: TIPRDC: task-independent privacy-respecting data crowdsourcing framework for deep learning with anonymized intermediate representations. In: Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 824–832 (2020)

Liu, S., Du, J., Shrivastava, A., Zhong, L.: Privacy adversarial network: representation learning for mobile data privacy. Proc. ACM Interact. Mob. Wear. Ubiquit. Technol. 3(4), 1–18 (2019)

Mahapatra, D., Rajan, V.: Multi-task learning with user preferences: Gradient descent with controlled ascent in pareto optimization. In: International Conference on Machine Learning, pp. 6597–6607. PMLR (2020)

Mahapatra, D., Rajan, V.: Exact pareto optimal search for multi-task learning: touring the pareto front. arXiv preprint arXiv:2108.00597 (2021)

Moyer, D., Gao, S., Brekelmans, R., Galstyan, A., Ver Steeg, G.: Invariant representations without adversarial training. In: Advances in Neural Information Processing Systems, vol. 31 (2018)

Osia, S.A., Taheri, A., Shamsabadi, A.S., Katevas, K., Haddadi, H., Rabiee, H.R.: Deep private-feature extraction. IEEE Trans. Knowl. Data Eng. 32(1), 54–66 (2018)

Pasquini, D., Ateniese, G., Bernaschi, M.: Unleashing the tiger: inference attacks on split learning. In: Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, pp. 2113–2129 (2021)

Pittaluga, F., Koppal, S., Chakrabarti, A.: Learning privacy preserving encodings through adversarial training. In: 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 791–799. IEEE (2019)

Rivest, R.L., Shamir, A., Adleman, L.: A method for obtaining digital signatures and public-key cryptosystems. Commun. ACM 21(2), 120–126 (1978)

Salamatian, S., et al.: Managing your private and public data: Bringing down inference attacks against your privacy. IEEE J. Select. Top. Signal Process. 9(7), 1240–1255 (2015)

Sener, O., Koltun, V.: Multi-task learning as multi-objective optimization. In: Advances in Neural Information Processing Systems, vol. 31 (2018)

Song, C., Shmatikov, V.: Overlearning reveals sensitive attributes. In: 8th International Conference on Learning Representations, ICLR 2020 (2020)

Weinsberg, U., Bhagat, S., Ioannidis, S., Taft, N.: Blurme: inferring and obfuscating user gender based on ratings. In: Proceedings of the Sixth ACM Conference on Recommender Systems, pp. 195–202 (2012)

D Xiaochen, Z.: Feature inference attacks on split learning with an honest-but-curious server, Ph.D. thesis, National University of Singapore (2022)

Xie, Q., Dai, Z., Du, Y., Hovy, E., Neubig, G.: Controllable invariance through adversarial feature learning. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Yang, T.Y., Brinton, C., Mittal, P., Chiang, M., Lan, A.: Learning informative and private representations via generative adversarial networks. In: 2018 IEEE International Conference on Big Data (Big Data), pp. 1534–1543. IEEE (2018)

Yao, A.C.: Protocols for secure computations. In: 23rd Annual Symposium on Foundations of Computer Science (SFCS 1982), pp. 160–164. IEEE (1982)

Zheng, T., Li, B.: Infocensor: an information-theoretic framework against sensitive attribute inference and demographic disparity. In: Proceedings of the 2022 ACM on Asia Conference on Computer and Communications Security, pp. 437–451 (2022)

Acknowledgements

This work was supported in part by NSF China (62272306, 62136006, 62032020), and a specialized technology project for the pre-research of generic information system equipment (31511130302).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

A Notations

We list all notations in this paper in Table 6 for better understanding.

B Proof for Loss Formulation

Proof

\(\textbf{Lower} \, \textbf{bound} \, \textbf{of} \, \textbf{I}(\textbf{z};\textbf{y}).\) According to [13], for any conditional distribution q(y|z), it holds that

Hence the lower bound of I(z; y) can be defined as

The model with parameter \(\phi \) is exactly the classifier performing the original task using uploaded feature z. For fixed z, the better the classifier is trained, the better estimation result it will achieve. Since H(y) is a constant, we only need to optimize the second term of \(\mathcal {L}\), which can be translated into minimizing the cross entropy loss. Denoting the cross entropy loss as \(CE(\cdot )\), the utility loss can be defined as:

\(\textbf{Upper} \, \textbf{Bound} \, \textbf{of} \, \textbf{I}(\textbf{z};\textbf{s}).\) Assuming s is a discrete variable, the mutual information can be written as

By Jensen’s Inequality, I(z; s) is upper bounded by:

C Proof of Lemma 2

Proof

We prove by contradiction. The formula in (10) can be written as

If \(\boldsymbol{a}^T G^T \boldsymbol{d} < 0\) at convergence, then \(||C\boldsymbol{\beta } - \boldsymbol{a}||_2^2 > ||\boldsymbol{a}||_2^2\), which is greater than the result at \(\boldsymbol{d} = \boldsymbol{0}\). Hence it contradicts with the fact that the current solution is optimal. Thus \(\boldsymbol{a}^T G^T \boldsymbol{d} \ge 0\) holds by solving (10).

D Proof of Lemma 3

Proof

When rank(C) = rank(G) = m, we can always find \(\boldsymbol{\beta }^{*}\) such that \(C\boldsymbol{\beta }^{*} = \boldsymbol{l}\). Due to the range constraint of \(\boldsymbol{\beta }\), we need to scale \(\boldsymbol{\beta }^{*}\) to the range of \([-1,1]^m\) without altering the direction of \(C\boldsymbol{\beta }^{*}\). Hence we could get \(G^T \boldsymbol{d} = C \boldsymbol{\beta } = k \boldsymbol{l} \succ \boldsymbol{0} (k>0)\), and \(\boldsymbol{a}^T G^T \boldsymbol{d} = k \boldsymbol{a}^T \boldsymbol{l} = 0\). The last equality holds due to our claim that \(\boldsymbol{a}^T \boldsymbol{l} = 0\).

E Proof of Theorem 1

Proof

If \(\theta \) is a Pareto optimal solution in optimizing (12), by Pareto Criticality ([5], ch4), G has rank \(m-1\). Hence rank(C) = rank(G) = \(m-1\). Note that \(\boldsymbol{a} \ne \boldsymbol{0}\) at this point, otherwise the optimization terminates for \(\boldsymbol{d} = \boldsymbol{0}\).

Let \( \mathcal {S} = \{ C \boldsymbol{\beta } | \boldsymbol{\beta } \in [-1,1]^m\}\), Col(C) be the column space of C, and Null(C) be the null space of C. It is clear that \(\mathcal {S} \subseteq Col(C)\), and Col(C) and Null(C) are orthogonal complement spaces. By minimizing the objective of (12), \(C \boldsymbol{\beta }\) turns out to be the best approximation of \(\boldsymbol{a}\) on \(\mathcal {S}\). Now we prove by contradiction. Assuming \(\boldsymbol{d} = \boldsymbol{0}\), we have \(C \boldsymbol{\beta } = G^{T} \boldsymbol{d} = \boldsymbol{0}\). Thus the orthogonal projection of \(\boldsymbol{a}\) on \(\mathcal {S}\) is \(\boldsymbol{0}\). Hence the orthogonal projection of \(\boldsymbol{a}\) on Col(C) is \(\boldsymbol{0}\), which means \(\boldsymbol{a} \in Null(C)\). Due to the orthogonality of \(\boldsymbol{a}\) and \(\boldsymbol{l}\), \(\boldsymbol{l} \in Col(C)\), which means \(\exists \boldsymbol{\alpha } \ \mathrm {s.t.} \ C \boldsymbol{\alpha } = G^T G \boldsymbol{\alpha } = \boldsymbol{l} \succeq \boldsymbol{0}\). So we can find a direction \(\boldsymbol{d} = G \boldsymbol{\alpha }\) to decrease all losses, which is contradictory to the condition of Pareto optimality. Therefore, \(\boldsymbol{d}\) is not \(\boldsymbol{0}\).

According to Lemma 1, Lemma 2 and Lemma 3, the direction \(\boldsymbol{d}\) found by (12) always satisfies \(\boldsymbol{a}^T G^T \boldsymbol{d} \ge 0\), so with proper step size, \(\mu \) keeps decreasing.

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Yu, X., Xiang, L., Wang, S., Long, C. (2024). Privacy-Preserving Split Learning via Pareto Optimal Search. In: Tsudik, G., Conti, M., Liang, K., Smaragdakis, G. (eds) Computer Security – ESORICS 2023. ESORICS 2023. Lecture Notes in Computer Science, vol 14347. Springer, Cham. https://doi.org/10.1007/978-3-031-51482-1_7

Download citation

DOI: https://doi.org/10.1007/978-3-031-51482-1_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-51481-4

Online ISBN: 978-3-031-51482-1

eBook Packages: Computer ScienceComputer Science (R0)