Abstract

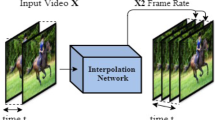

Prevalent deep-learning-based video frame interpolation (VFI) methods are mostly pre-trained and require an optical-flow model to obtain prior knowledge. However, pre-training is often time-consuming, and may introduce unexpected artifacts when applied to a test domain that differs significantly from the training one. Alternatively, implicit neural representations have shown the ability to synthesize novel views from sparse images without pre-training. In this paper, we consider VFI as a special case of novel view synthesis and leverage implicit neural representations to perform VFI without pre-training or an optical-flow model. We propose Bidirectional Regularization Framework (BiRF), a novel VFI method that is trained per scene requiring only two input frames, which is fundamentally different from existing methods that utilize pre-trained weights containing extensive prior knowledge. We demonstrate that our BiRF, even without using prior knowledge, can generate comparable or even superior interpolated frames to prevalent pre-trained models.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bao, W., Lai, W.S., Ma, C., Zhang, X., Gao, Z., Yang, M.H.: Depth-aware video frame interpolation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3703–3712 (2019)

Chen, A., et al.: Mvsnerf: fast generalizable radiance field reconstruction from multi-view stereo. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 14124–14133 (2021)

Chen, H., He, B., Wang, H., Ren, Y., Lim, S.N., Shrivastava, A.: Nerv: Neural representations for videos. Adv. Neural. Inf. Process. Syst. 34, 21557–21568 (2021)

Chen, Y., Liu, S., Wang, X.: Learning continuous image representation with local implicit image function. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8628–8638 (2021)

Cheng, X., Chen, Z.: Video frame interpolation via deformable separable convolution. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 10607–10614 (2020)

Choi, M., Kim, H., Han, B., Xu, N., Lee, K.M.: Channel attention is all you need for video frame interpolation. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 10663–10671 (2020)

Dupont, E., Goliński, A., Alizadeh, M., Teh, Y.W., Doucet, A.: Coin: compression with implicit neural representations. arXiv preprint arXiv:2103.03123 (2021)

Feng, B.Y., Jabbireddy, S., Varshney, A.: Viinter: view interpolation with implicit neural representations of images. In: SIGGRAPH Asia 2022 Conference Papers, pp. 1–9 (2022)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Huang, Z., Zhang, T., Heng, W., Shi, B., Zhou, S.: Real-time intermediate flow estimation for video frame interpolation. In: Computer Vision-ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022, Proceedings, Part XIV, pp. 624–642. Springer (2022). doi: https://doi.org/10.1007/978-3-031-19781-9_36

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Lee, H., Kim, T., Chung, T.Y., Pak, D., Ban, Y., Lee, S.: Adacof: adaptive collaboration of flows for video frame interpolation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5316–5325 (2020)

Li, H., Yuan, Y., Wang, Q.: Video frame interpolation via residue refinement. In: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2613–2617. IEEE (2020)

Li, Z., Niklaus, S., Snavely, N., Wang, O.: Neural scene flow fields for space-time view synthesis of dynamic scenes. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6498–6508 (2021)

Liu, Y.L., Liao, Y.T., Lin, Y.Y., Chuang, Y.Y.: Deep video frame interpolation using cyclic frame generation. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8794–8802 (2019)

Liu, Z., Yeh, R.A., Tang, X., Liu, Y., Agarwala, A.: Video frame synthesis using deep voxel flow. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4463–4471 (2017)

Long, G., Kneip, L., Alvarez, J.M., Li, H., Zhang, X., Yu, Q.: Learning image matching by simply watching video. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9910, pp. 434–450. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46466-4_26

Ma, Y., Chen, X., Cheng, K., Li, Y., Sun, B.: LDPolypVideo benchmark: a large-scale colonoscopy video dataset of diverse polyps. In: de Bruijne, M., Cattin, P.C., Cotin, S., Padoy, N., Speidel, S., Zheng, Y., Essert, C. (eds.) MICCAI 2021. LNCS, vol. 12905, pp. 387–396. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-87240-3_37

McClelland, J.L., Rumelhart, D.E., Group, P.R., et al.: Parallel Distributed Processing, Volume 2: Explorations in the Microstructure of Cognition: Psychological and Biological Models, vol. 2. MIT press (1987)

Mehta, I., Gharbi, M., Barnes, C., Shechtman, E., Ramamoorthi, R., Chandraker, M.: Modulated periodic activations for generalizable local functional representations. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 14214–14223 (2021)

Mildenhall, B., Srinivasan, P.P., Tancik, M., Barron, J.T., Ramamoorthi, R., Ng, R.: Nerf: representing scenes as neural radiance fields for view synthesis. Commun. ACM 65(1), 99–106 (2021)

Niklaus, S., Mai, L., Liu, F.: Video frame interpolation via adaptive separable convolution. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 261–270 (2017)

Park, J., Ko, K., Lee, C., Kim, C.-S.: BMBC: bilateral motion estimation with bilateral cost volume for video interpolation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12359, pp. 109–125. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58568-6_7

Sandia National Laboratories: Videosar. https://www.sandia.gov/app/uploads/sites/124/2021/08/eubankgateandtrafficvideosar.mp4

Saragadam, V., Tan, J., Balakrishnan, G., Baraniuk, R.G., Veeraraghavan, A.: Miner: Multiscale implicit neural representation. In: Computer Vision-ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022, Proceedings, Part XXIII, pp. 318–333. Springer (2022). https://doi.org/10.1007/978-3-031-20050-2_19

Shangguan, W., Sun, Y., Gan, W., Kamilov, U.S.: Learning cross-video neural representations for high-quality frame interpolation. arXiv preprint arXiv:2203.00137 (2022)

Sitzmann, V., Martel, J., Bergman, A., Lindell, D., Wetzstein, G.: Implicit neural representations with periodic activation functions. Adv. Neural. Inf. Process. Syst. 33, 7462–7473 (2020)

Soomro, K., Zamir, A.R., Shah, M.: A dataset of 101 human action classes from videos in the wild. Center Res. Comput. Vis. 2(11) (2012)

Wang, Z., Bovik, A.C., Lu, L.: Why is image quality assessment so difficult? In: 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 4, pp. IV-3313. IEEE (2002)

Xue, T., Chen, B., Wu, J., Wei, D., Freeman, W.T.: Video enhancement with task-oriented flow. Int. J. Comput. Vision 127, 1106–1125 (2019)

Yu, A., Ye, V., Tancik, M., Kanazawa, A.: pixelnerf: neural radiance fields from one or few images. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4578–4587 (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

He, Y., Zhang, W., Deng, J., Cong, Y. (2024). Prior-Knowledge-Free Video Frame Interpolation with Bidirectional Regularized Implicit Neural Representations. In: Rudinac, S., et al. MultiMedia Modeling. MMM 2024. Lecture Notes in Computer Science, vol 14556. Springer, Cham. https://doi.org/10.1007/978-3-031-53311-2_9

Download citation

DOI: https://doi.org/10.1007/978-3-031-53311-2_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-53310-5

Online ISBN: 978-3-031-53311-2

eBook Packages: Computer ScienceComputer Science (R0)